Ethan Perez

@EthanJPerez

Large language model safety

ID:908728623988953089

https://scholar.google.com/citations?user=za0-taQAAAAJ 15-09-2017 16:26:02

985 Tweets

6,4K Followers

464 Following

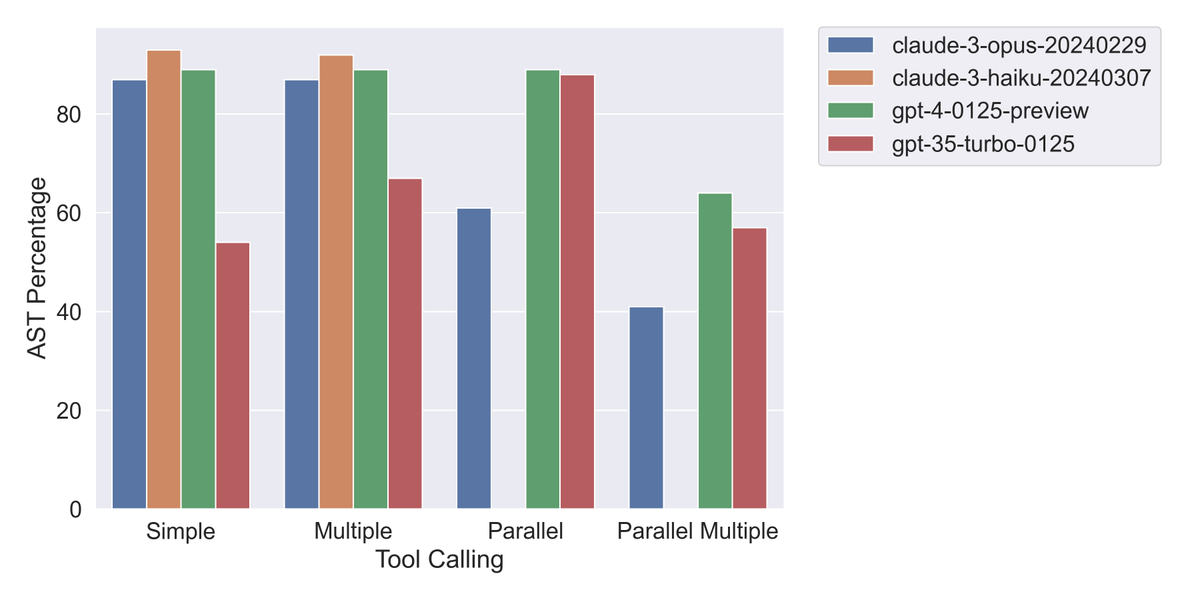

I benchmarked Anthropic's new tool use beta API on the Berkeley function calling benchmark. Haiku beats GPT-4 Turbo in half of the scenarios. Results in 🧵

A huge thanks to Shishir Patil, Fanjia Yan, Tianjun Zhang, Joey Gonzalez & rest for providing this benchmark publicly.

Eliezer Yudkowsky ⏹️ while computers may excel at soft skills like creativity and emotional understanding, they will never match human ability at dispassionate, mechanical reasoning

This seems like a good time to mention that I've taken a part-time role at Google DeepMind working on AI Safety and Alignment!

![lmsys.org (@lmsysorg) on Twitter photo 2024-03-26 22:57:35 [Arena Update] 70K+ new Arena votes🗳️ are in! Claude-3 Haiku has impressed all, even reaching GPT-4 level by our user preference! Its speed, capabilities & context length are unmatched now in the market🔥 Congrats @AnthropicAI on the incredible Claude-3 launch! More exciting [Arena Update] 70K+ new Arena votes🗳️ are in! Claude-3 Haiku has impressed all, even reaching GPT-4 level by our user preference! Its speed, capabilities & context length are unmatched now in the market🔥 Congrats @AnthropicAI on the incredible Claude-3 launch! More exciting](https://pbs.twimg.com/media/GJoXWeSaUAA9Waz.jpg)