Jeremy Howard

@jeremyphoward

🇦🇺 Co-founder: @AnswerDotAI & @FastDotAI ;

Hon Professor: @UQSchoolITEE ;

Digital Fellow: @Stanford

ID:175282603

http://answer.ai 06-08-2010 04:58:18

54,8K Tweets

220,6K Followers

4,9K Following

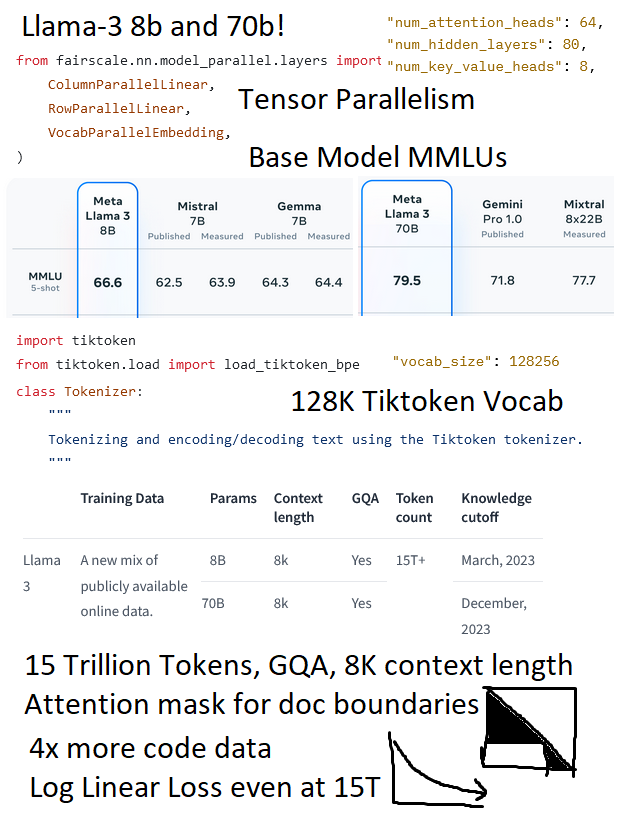

We've just uploaded a GGUF of the 8b llama-3 instruct model on Nous Research's huggingface org:

huggingface.co/NousResearch/M…

Mikel Artetxe I'd just like to interject for a moment. What you're referring to as <model>,

is in fact, Llama 3/<model>, or as I've recently taken to calling it, Llama 3 plus <model>.