Johannes Oswald

@oswaldjoh

Research Scientist, Google Research & ETH Zurich alumni

ID:867349295464316928

24-05-2017 11:59:21

143 Tweets

747 Followers

540 Following

🦎Can we teach Transformers to perform in-context Evolutionary Optimization? Surely! We propose Evolutionary Algorithm Distillation for pre-training Transformers to mimic teachers 🧑🏫

🎉 Work done Google DeepMind 🗼with Yingtao Tian & Yujin Tang 🤗

📜: arxiv.org/abs/2403.02985

arxiv drop tonite

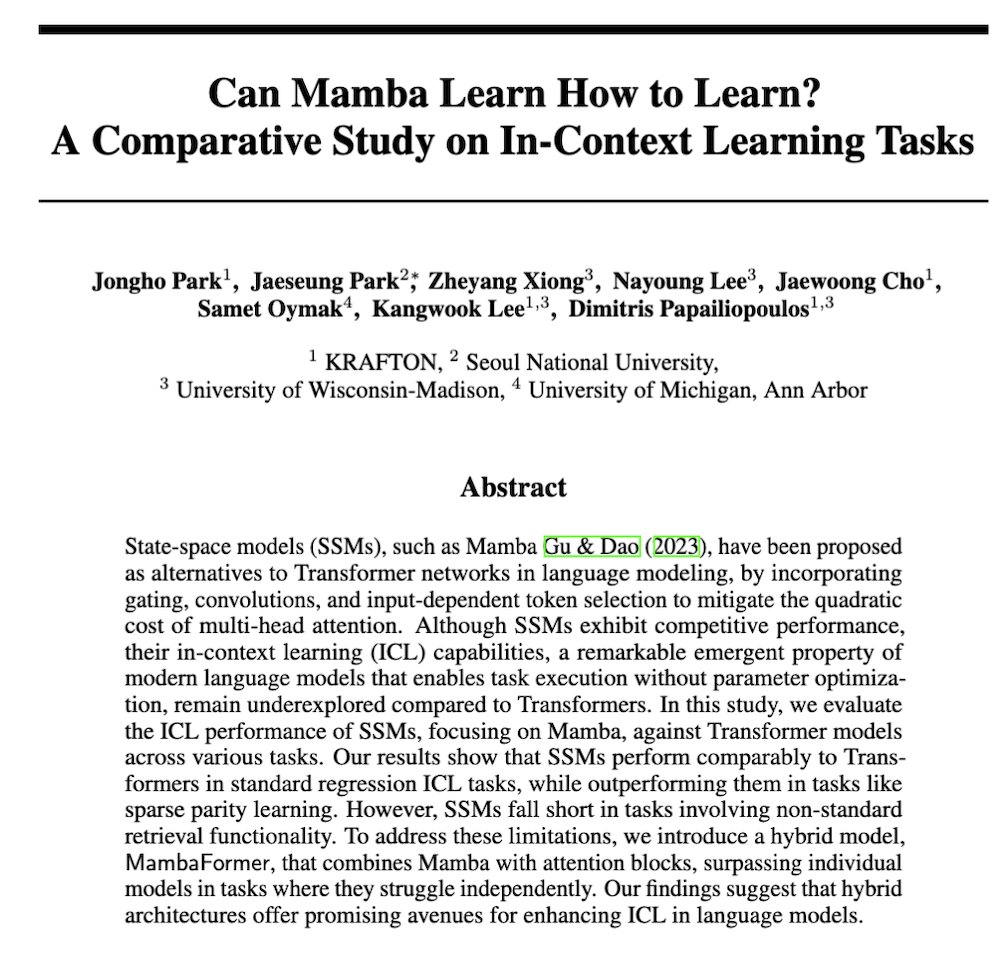

'Can Mamba Learn How to Learn?: A Comparative Study on In-Context Learning Tasks'

with all-star set of collaborations from Krafton inc. Seoul National University University of Michigan and UW–Madison

1/ Our paper is out!

Teaching Arithmetic to Small Transformers

We investigate several factors that control the emergence of basic arithmetic in small transformers (e.g., nanoGPT).

paper: arxiv.org/abs/2307.03381

Work led by:Nayoung Lee & Kartik Sreenivasan

Thread below.

Sasha Rush Actually linear RNNs with GLUs can behave like Linear Attention 😉 arxiv.org/abs/2309.01775

Artificial General Intelligence is Already Here, from Peter Norvig and me on Noema Magazine 'Today’s most advanced AI models have many flaws, but decades from now they will be recognized as the first true examples of artificial general intelligence.' noemamag.com/artificial-gen…

Blog post!!

Rumors of the death of RNNs have been largely exaggerated...

In this post I summarize why and how RNNs are making a comeback in ML, and what this means for theorists of neural comps.

Many thanks to Nicolas Zucchet for help and corrections!

adrian-valente.github.io/2023/10/03/lin…

Very happy to share that this work got accepted to #NeurIPS2023 as a spotlight 🥳

It's my personal first ever acceptance at NeurIPS - and got an additional poster as cherry on top!