Sam Bowman

@sleepinyourhat

AI alignment + LLMs at NYU & Anthropic. Views not employers'. No relation to @s8mb. I think you should join @givingwhatwecan.

ID:338526004

https://cims.nyu.edu/~sbowman/ 19-07-2011 18:19:52

2,2K Tweets

34,6K Followers

3,1K Following

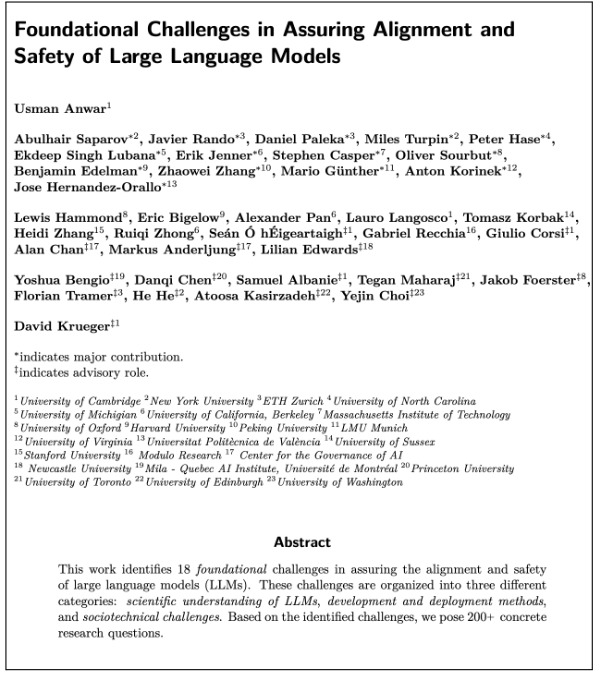

I’m super excited to release our 100+ page collaborative agenda - led by Usman Anwar - on “Foundational Challenges In Assuring Alignment and Safety of LLMs” alongside 35+ co-authors from NLP, ML, and AI Safety communities!

Some highlights below...

I'm incredibly excited to have Craig joining us on the Anthropic Interpretability team!

I've been a huge fan of Colaboratory for nearly a decade (I used it internally at Google!) and have really admired Craig's work on it.

🚨📄 Following up on 'LMs Don't Always Say What They Think', Miles Turpin et al. now have an intervention that dramatically reduces the problem! 📄🚨

It's not a perfect solution, but it's a simple method with few assumptions and it generalizes *much* better than I'd expected.

Want to work at the frontier of AI policy with the most technical policy team in the business? You do? Excellent. Please consider applying

- Special Projects Lead jobs.lever.co/Anthropic/5752…

- Policy Analyst, Product jobs.lever.co/Anthropic/6ecd…

- Outreach Lead jobs.lever.co/Anthropic/df58…