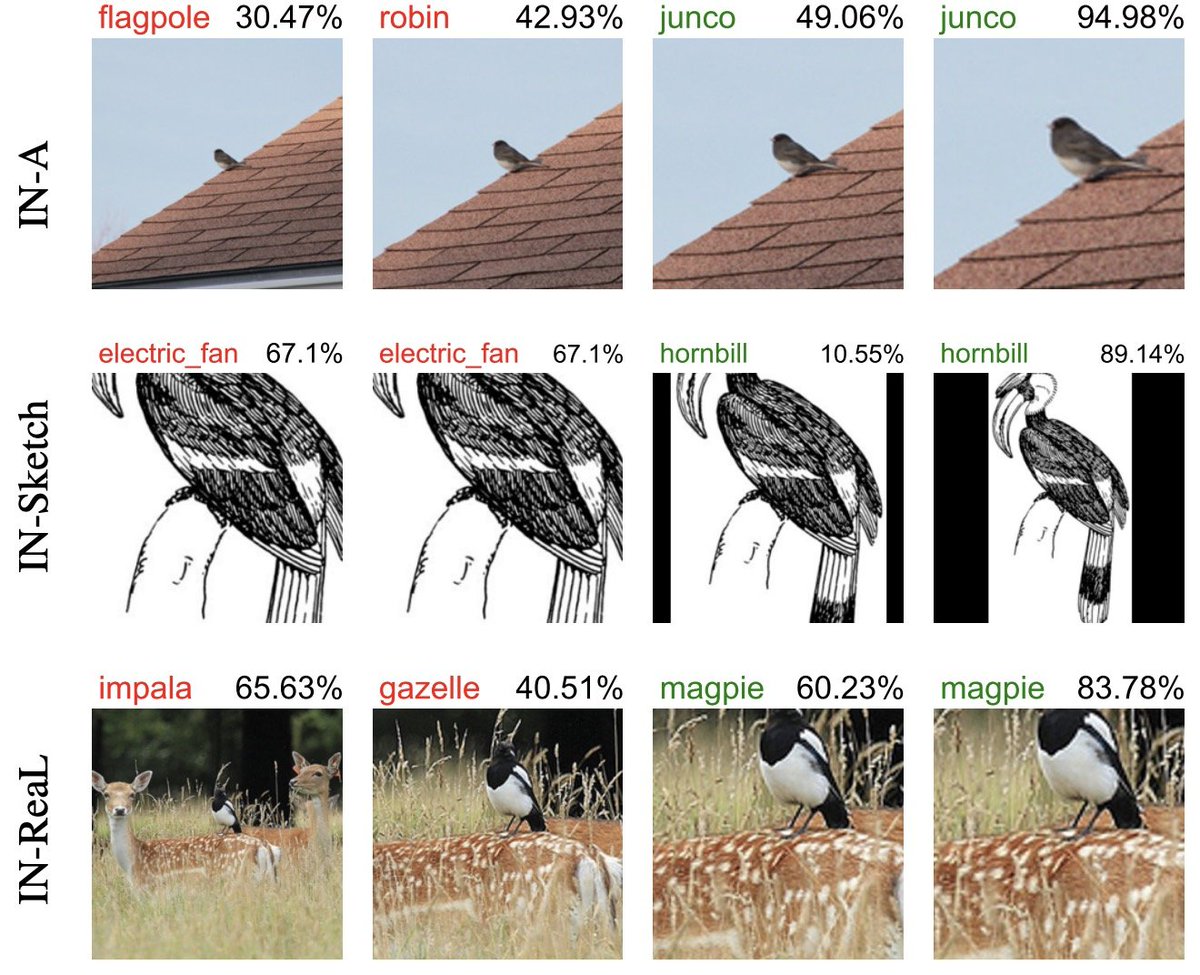

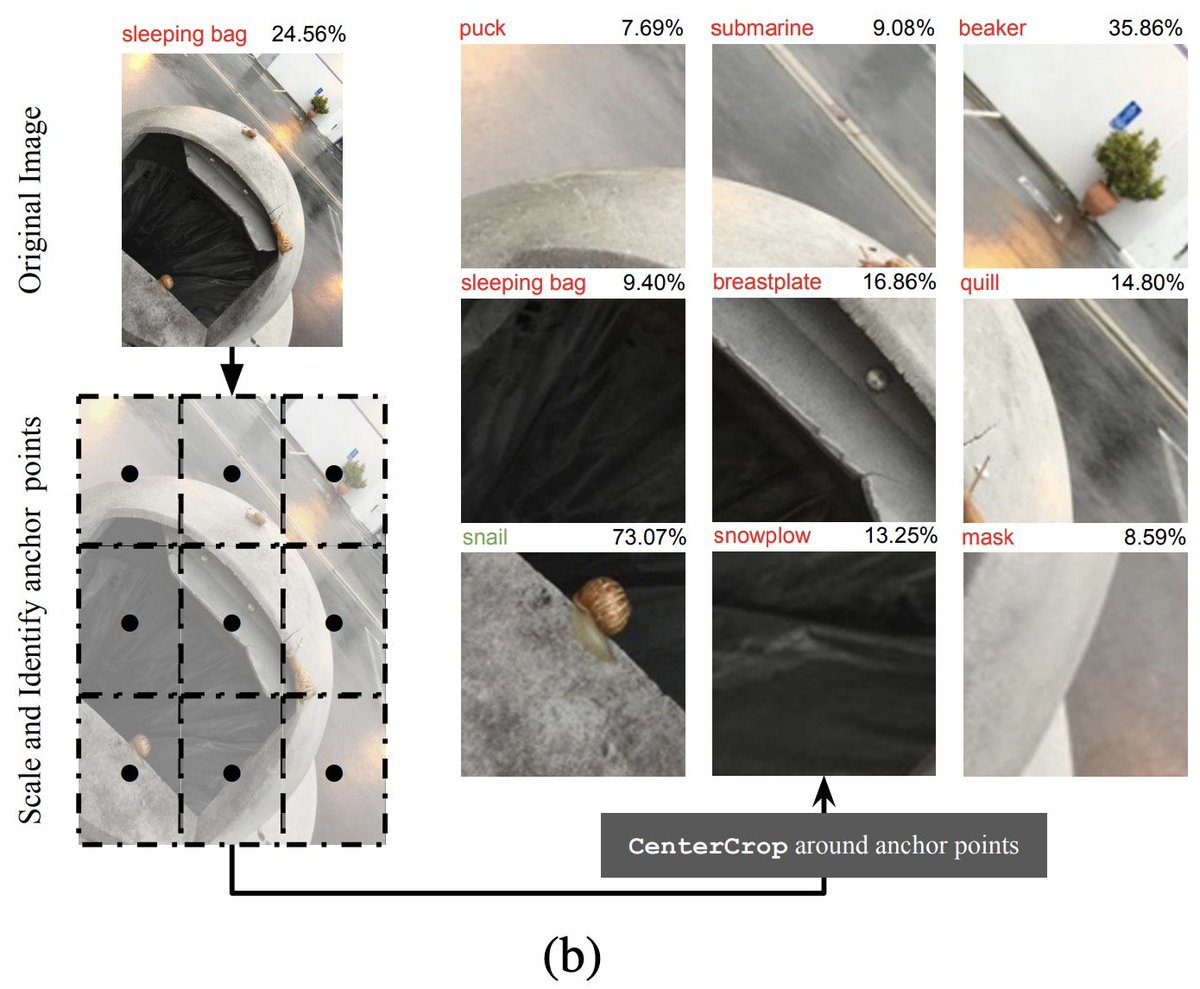

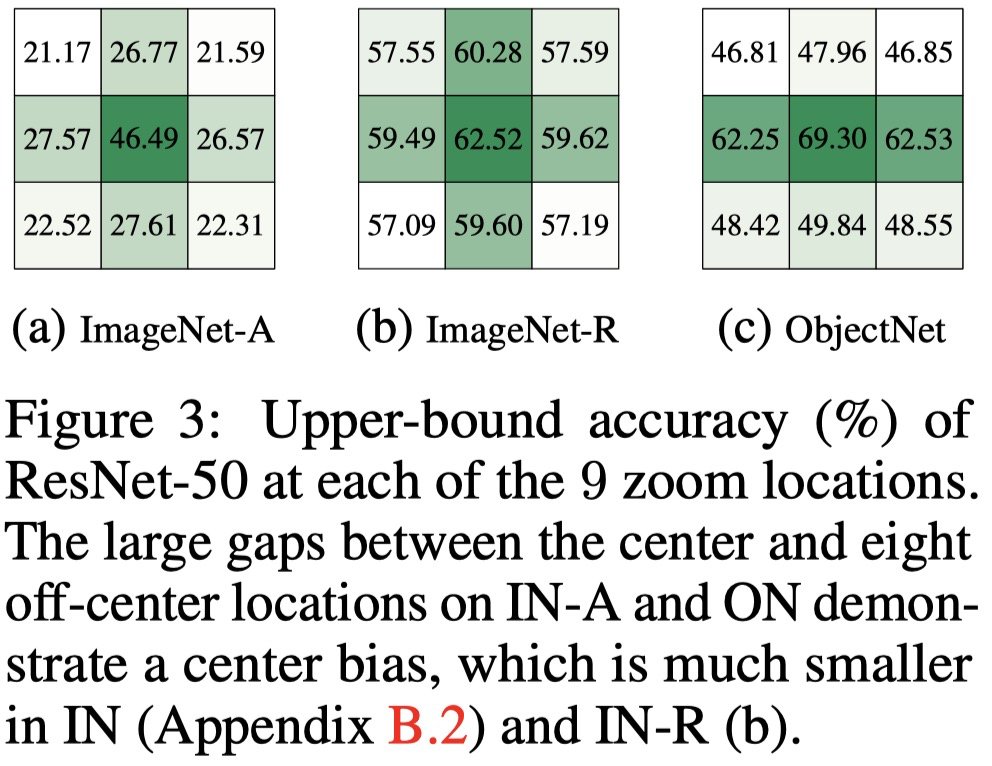

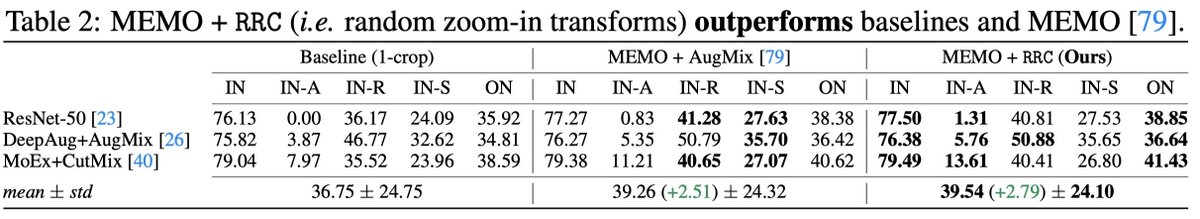

Integrating a zoom-in ⨁ process into MEMO (Zhang, Chelsea Finn Sergey Levine 2022), a state-of-the-art test-time augmentation method, can increase prediction accuracy even further.

6/n

This #healthsystem increases #resilience and #efficiency with #AI and contextualized #data . How Johns Hopkins Medicine addresses #supplychain disruptions head on. Learn more Deloitte. #ad bit.ly/3G1Wv7f

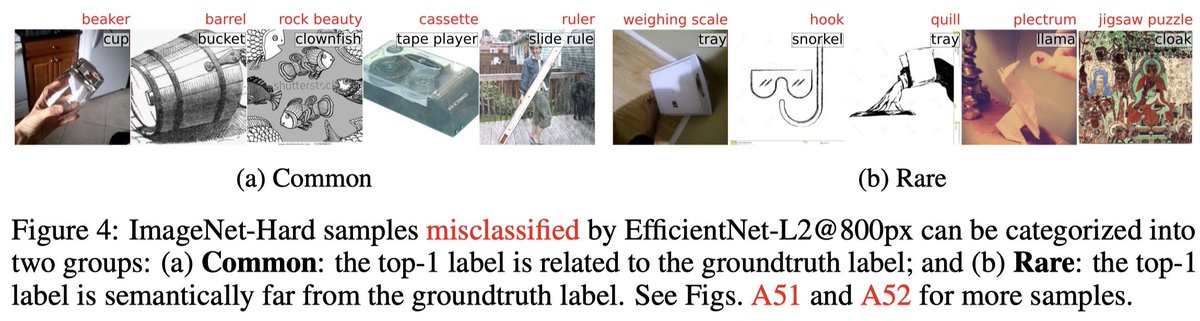

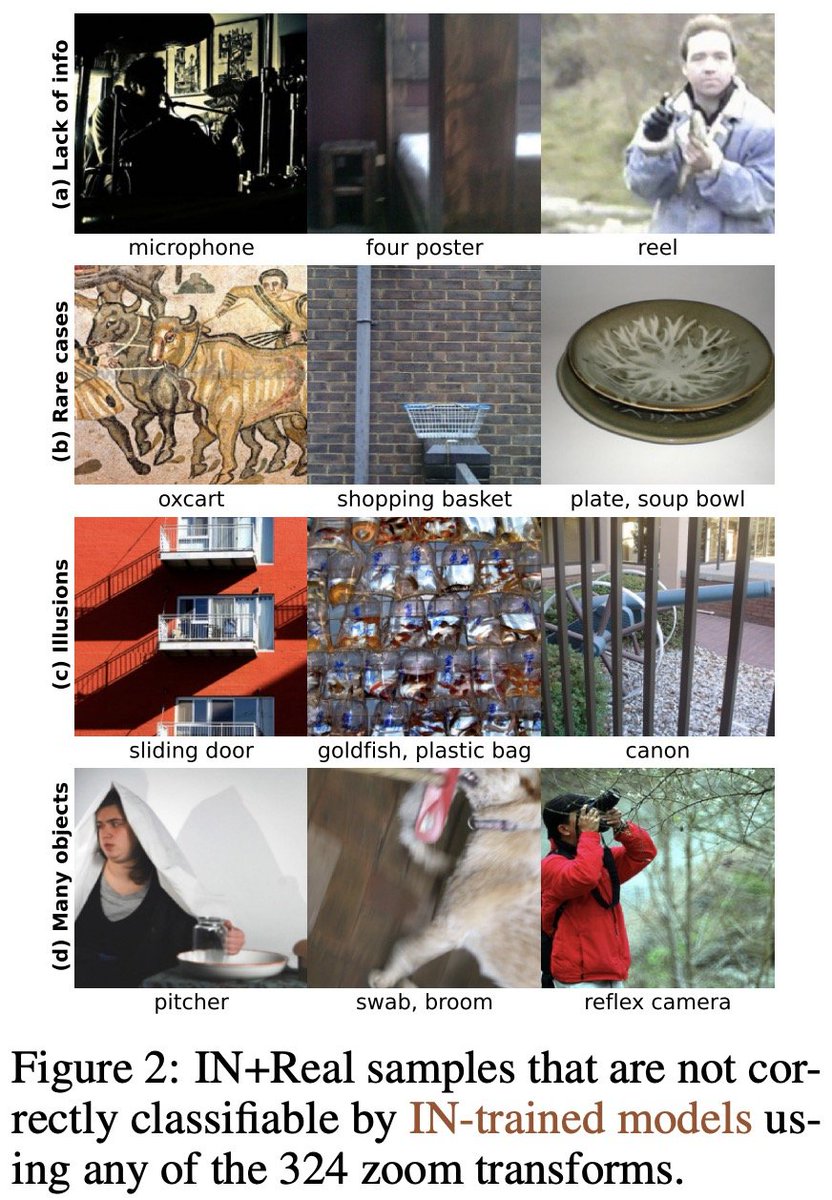

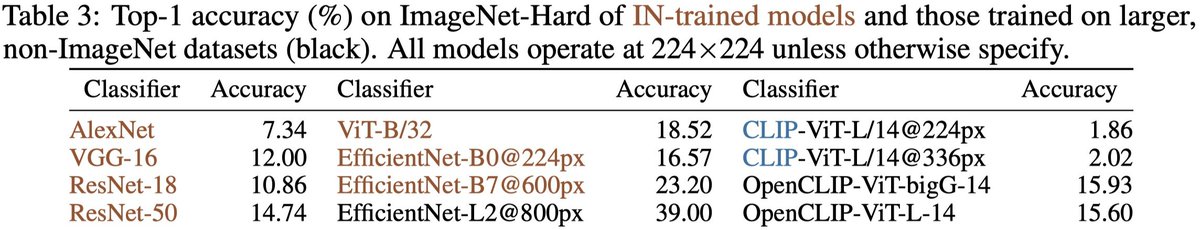

ImageNet-Hard is a new benchmark that challenges state-of-the-art vision-language models and ImageNet-trained classifiers.

Work led by the amazingly talented taesiri!!! With Giang Nguyen , Sarra Habchi, and Cor-Paul Bezemer.

Code, paper & dataset: taesiri.github.io/ZoomIsAllYouNe…

n/n