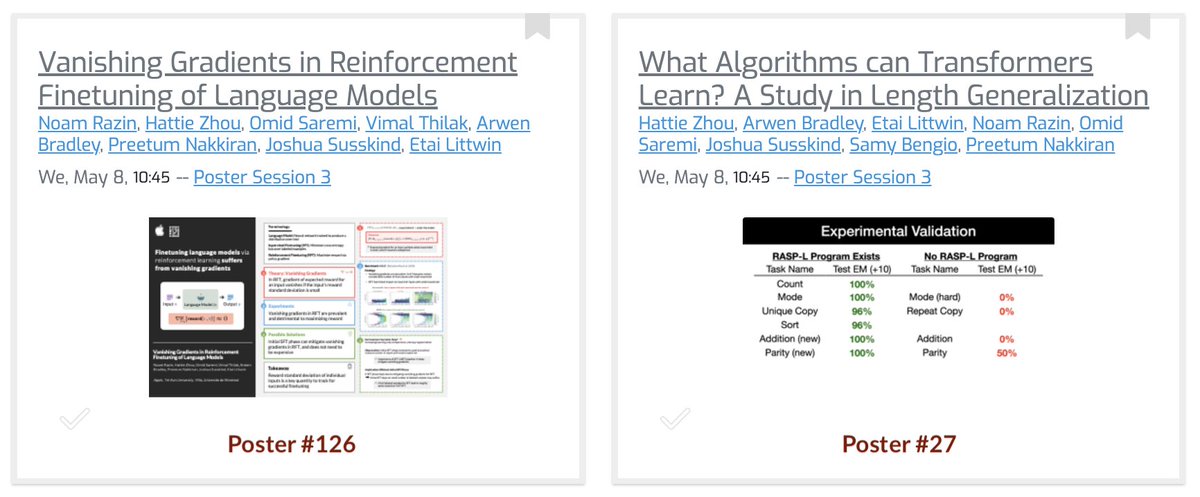

In Vienna for two 🔦 spotlights at #ICLR2024

🕵MuSR by Zayne Sprague @ ICLR 24 +al, Tues 10:45am

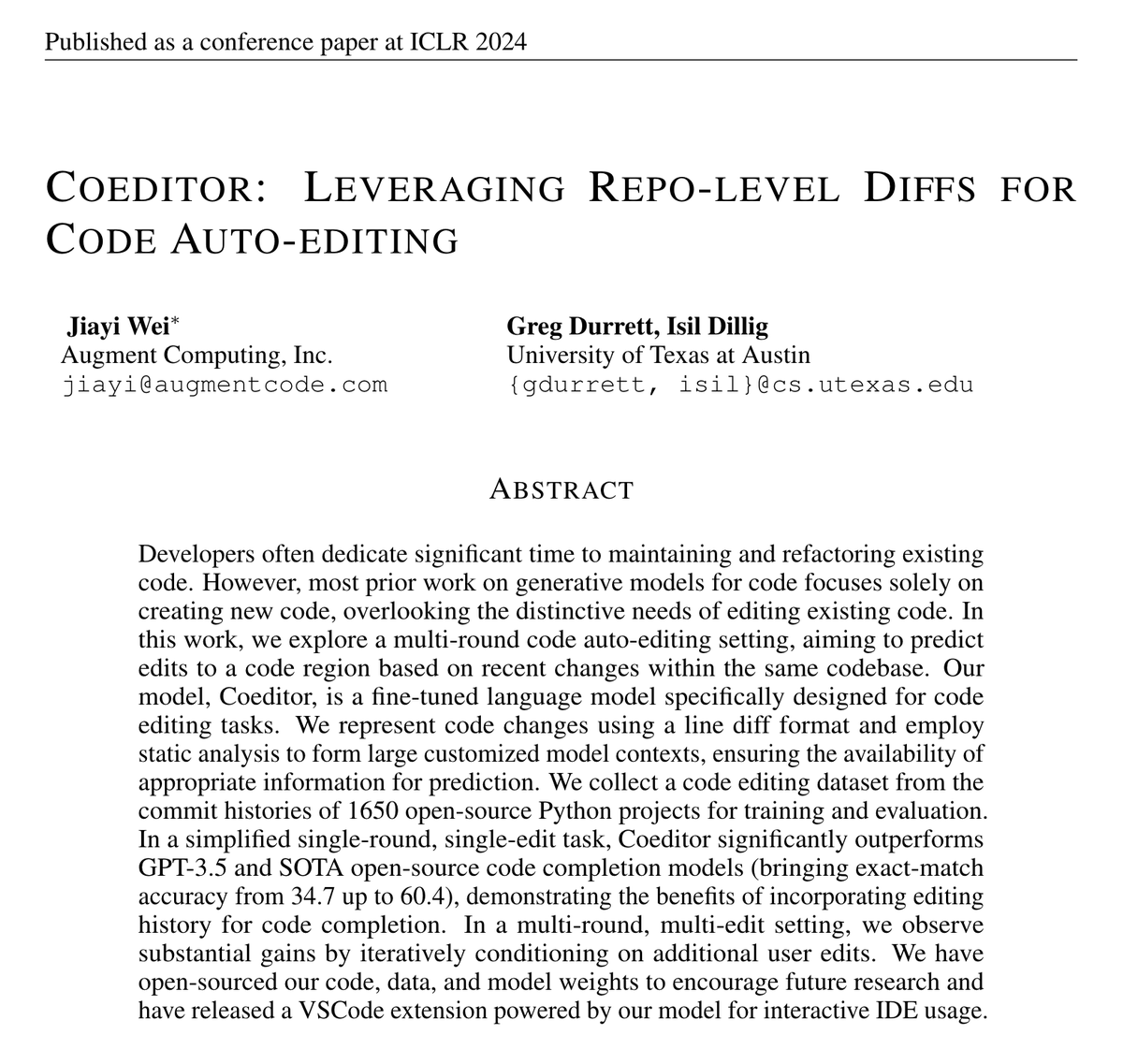

🧑💻✍️ Coeditor by Jiayi Wei with Isil Dillig , Thurs 10:45am (presented by me)

DM me if you're interested in chatting about these, reasoning + factuality in LLMs, RLHF, or other topics!

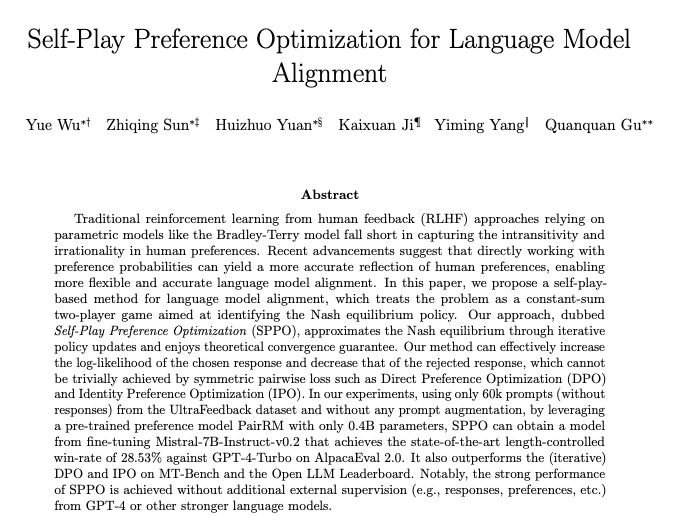

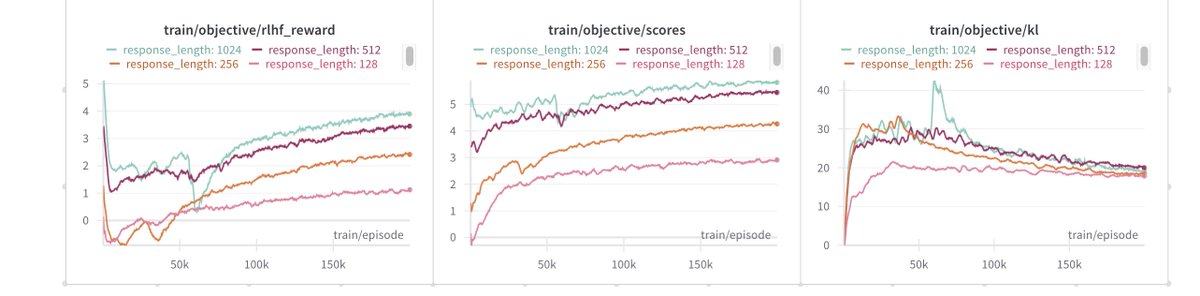

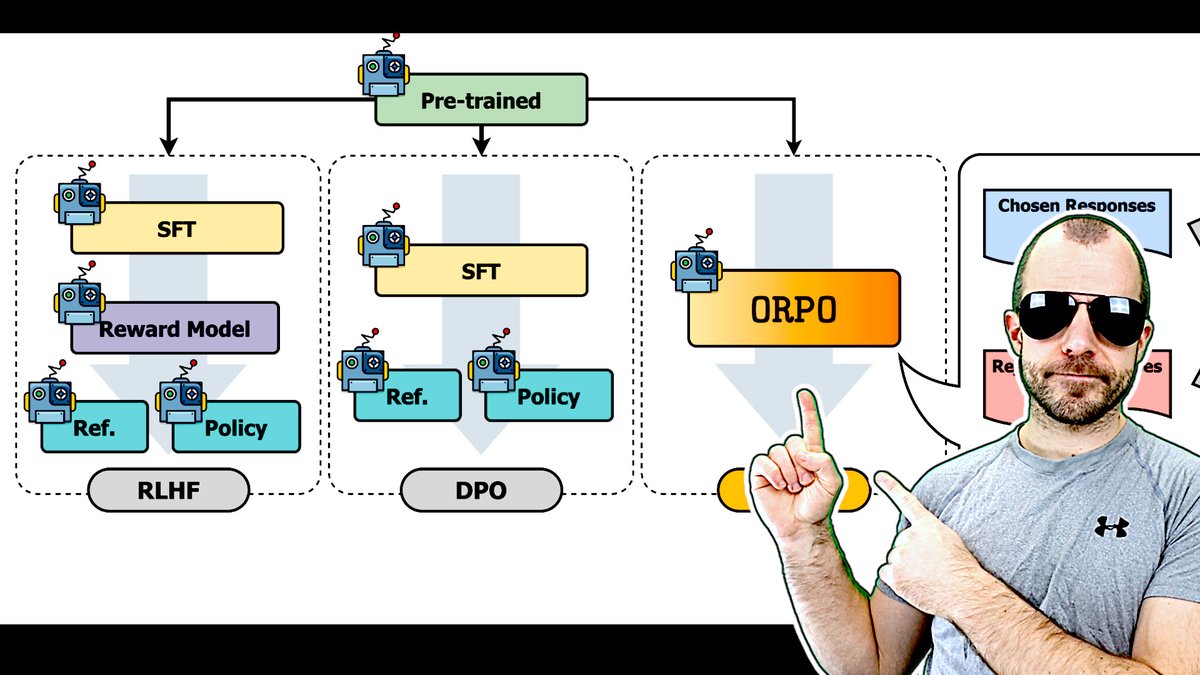

![fly51fly (@fly51fly) on Twitter photo 2024-04-30 21:50:58 [LG] DPO Meets PPO: Reinforced Token Optimization for RLHF

arxiv.org/abs/2404.18922

- This paper models RLHF as an MDP, offering a token-wise characterization of LLM's generation process. It theoretically demonstrates advantages of token-wise MDP over sentence-wise bandit… [LG] DPO Meets PPO: Reinforced Token Optimization for RLHF

arxiv.org/abs/2404.18922

- This paper models RLHF as an MDP, offering a token-wise characterization of LLM's generation process. It theoretically demonstrates advantages of token-wise MDP over sentence-wise bandit…](https://pbs.twimg.com/media/GMccCBsakAAlV3z.jpg)