Introducing a new blog post on interpretable data fusion for distributed learning via gradient matching. Unlike traditional methods, our approach offers human interpretability and maintains privacy. Check it out at: bit.ly/3UQeCok #machinelearning #datascience

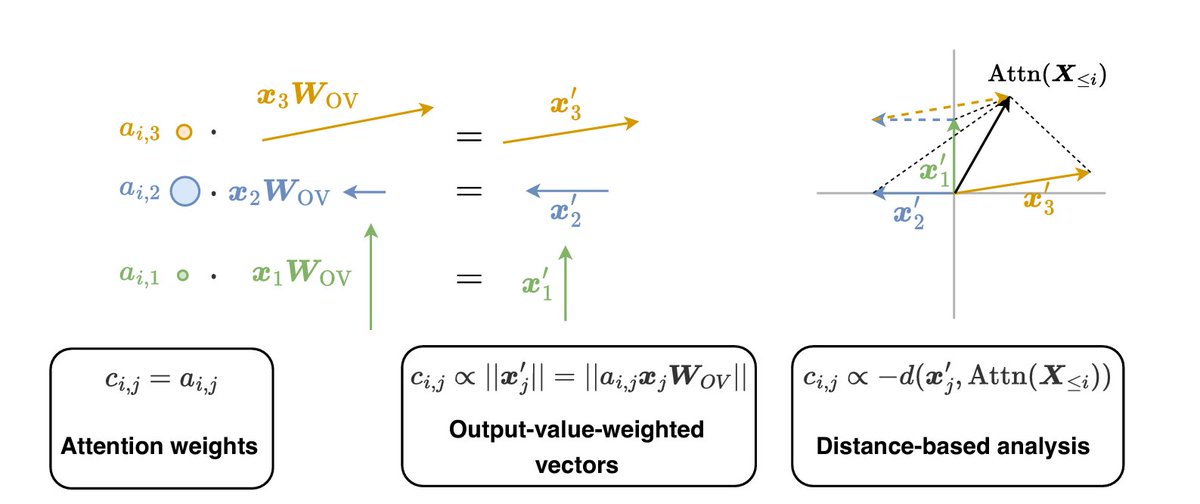

*A Primer on the Inner Workings of Transformer LMs*

by Javier Ferrando Gabriele Sarti Arianna Bisazza Marta R. Costa-jussa

I was waiting for this! Cool comprehensive survey on interpretability methods for LLMs, with a focus on recent techniques (e.g., logit lens).

arxiv.org/abs/2405.00208

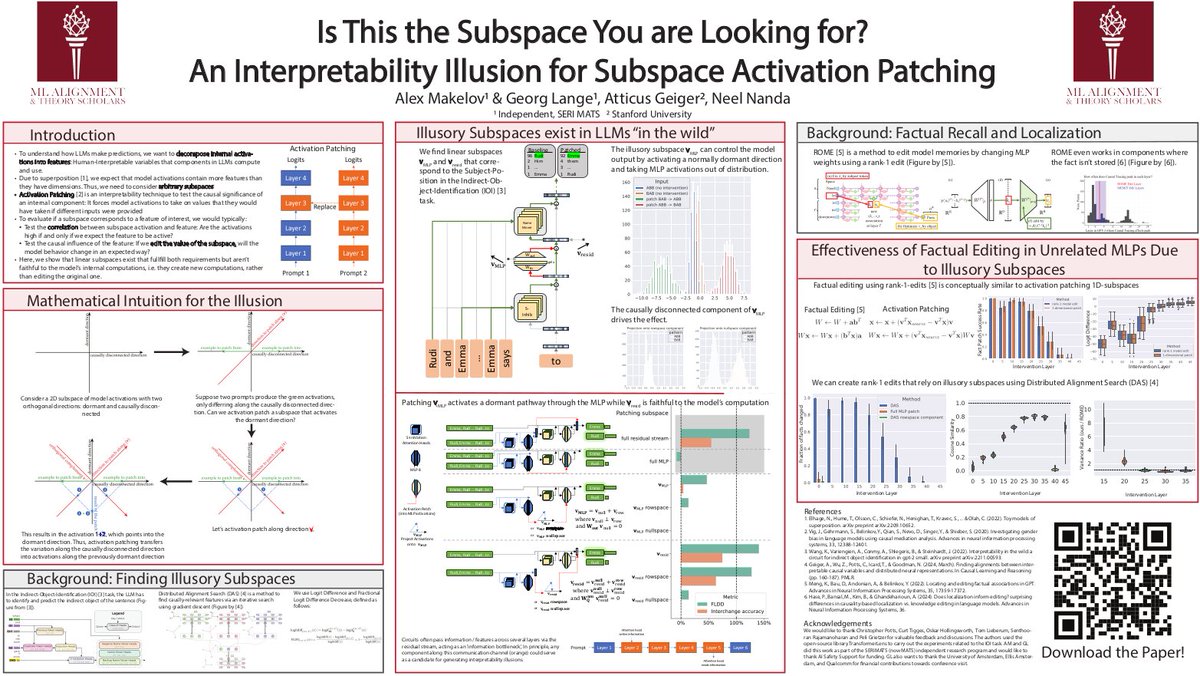

If you're at #ICLR2024 , drop by poster

#263 to hear about pitfalls of using subspace activation patching for interpretability! Joint work with Georg Lange, Atticus Geiger, Neel Nanda

Focus on interpretability to build trust in AI systems. Making your models transparent and understandable helps stakeholders see how decisions are made, boosting confidence in AI solutions. Clear, explainable AI is essential for widespread adoption. #TrustInAI #ExplainableAI

![fly51fly (@fly51fly) on Twitter photo 2024-05-02 22:14:58 [CL] A Primer on the Inner Workings of Transformer-based Language Models

arxiv.org/abs/2405.00208

- The paper provides a concise technical introduction to interpretability techniques used to analyze Transformer-based language models, focusing on the generative decoder-only… [CL] A Primer on the Inner Workings of Transformer-based Language Models

arxiv.org/abs/2405.00208

- The paper provides a concise technical introduction to interpretability techniques used to analyze Transformer-based language models, focusing on the generative decoder-only…](https://pbs.twimg.com/media/GMm0tXbbwAAGj3f.jpg)

![Javier Ferrando (@javifer_96) on Twitter photo 2024-05-03 08:49:36 [1/4] Introducing “A Primer on the Inner Workings of Transformer-based Language Models”, a comprehensive survey on interpretability methods and the findings into the functioning of language models they have led to.

ArXiv: arxiv.org/pdf/2405.00208 [1/4] Introducing “A Primer on the Inner Workings of Transformer-based Language Models”, a comprehensive survey on interpretability methods and the findings into the functioning of language models they have led to.

ArXiv: arxiv.org/pdf/2405.00208](https://pbs.twimg.com/media/GMpDM85WcAELzoF.jpg)