UW NLP

@uwnlp

The NLP group at the University of Washington.

ID:3716745856

20-09-2015 10:26:25

1,1K Tweets

11,1K Followers

160 Following

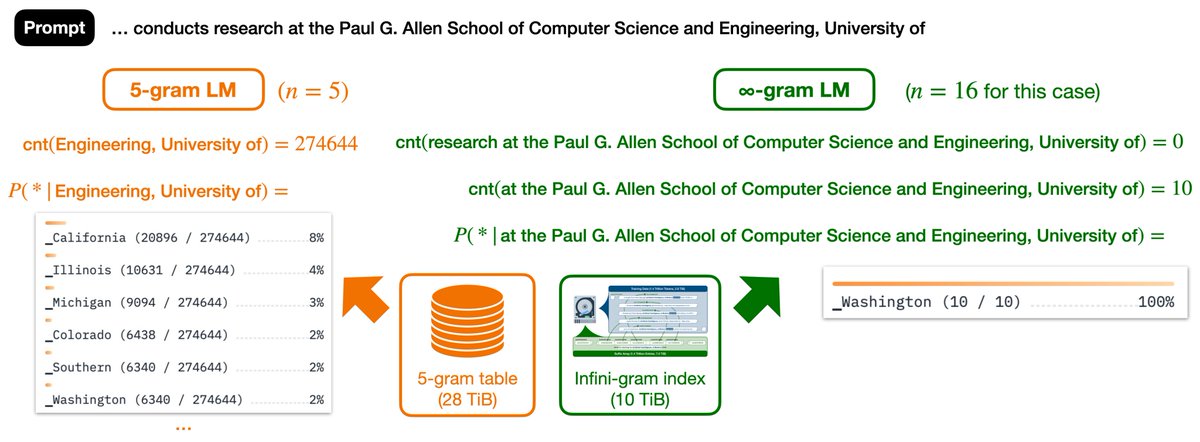

The infini-gram paper is updated with the incredible feedback from the online community 🧡 We added references to papers of Jeff Dean (@🏡) Yee Whye Teh Ehsan Shareghi Edward Raff et al.

arxiv.org/abs/2401.17377

Also happy to share that the infini-gram API has served 30 million queries!

When augmented with retrieval, LMs sometimes overlook retrieved docs and hallucinate 🤖💭

To make LMs trust evidence more and hallucinate less, we introduce Context-Aware Decoding: a decoding algorithm improving LM's focus on input contexts

📖 arxiv.org/pdf/2305.14739…

#NAACL2024

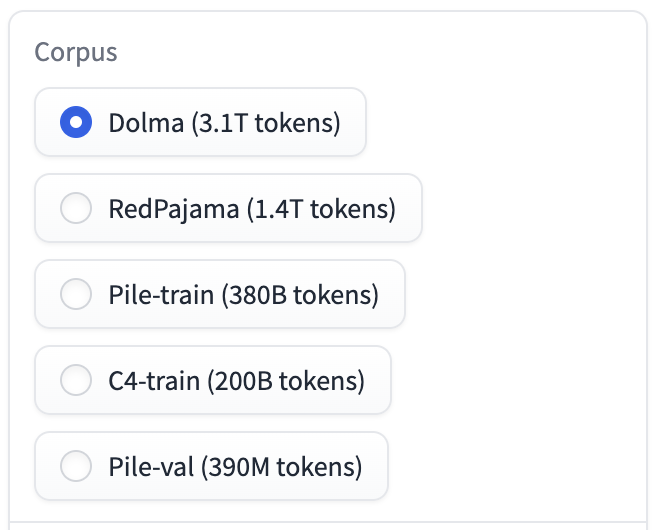

Announcing the infini-gram API 🚀🚀

API Endpoint: api.infini-gram.io

API Documentation: infini-gram.io/api_doc

No API key needed! Simply issue POST requests to the endpoint and receive the results in a fraction of a second.

As we’re in the early stage of rollout, please pic.twitter.com/ckwsxiJPJF

![Jiacheng Liu (Gary) (@liujc1998) on Twitter photo 2024-02-29 18:40:55 [Fun w/ infini-gram 📖 #6] Have you ever taken a close look at Llama-2’s vocabulary? 🧐 I used infini-gram to plot the empirical frequency of all tokens in the Llama-2 vocabulary. Here’s what I learned (and more Qs raised): 1. While Llama-2 uses a BPE tokenizer, the tokens are [Fun w/ infini-gram 📖 #6] Have you ever taken a close look at Llama-2’s vocabulary? 🧐 I used infini-gram to plot the empirical frequency of all tokens in the Llama-2 vocabulary. Here’s what I learned (and more Qs raised): 1. While Llama-2 uses a BPE tokenizer, the tokens are](https://pbs.twimg.com/media/GHhnHOMacAIczas.jpg)

![Jiacheng Liu (Gary) (@liujc1998) on Twitter photo 2024-02-23 18:49:18 [Fun w/ infini-gram 📖 #5] What does RedPajama say about Letter Frequency? Image shows the letter distribution. Seems that there’s a lot less letter “h” in RedPajama than expected (using Wikipedia page as gold reference: en.wikipedia.org/wiki/Letter_fr…). Thoughts? 🤔 (I issued a single [Fun w/ infini-gram 📖 #5] What does RedPajama say about Letter Frequency? Image shows the letter distribution. Seems that there’s a lot less letter “h” in RedPajama than expected (using Wikipedia page as gold reference: en.wikipedia.org/wiki/Letter_fr…). Thoughts? 🤔 (I issued a single](https://pbs.twimg.com/media/GHCvISNasAAHoX0.png)

![Jiacheng Liu (Gary) (@liujc1998) on Twitter photo 2024-02-13 17:05:24 [Fun w/ infini-gram #3] Today we’re tracing down the cause of memorization traps 🪤 Memorization trap is a type of prompt where memorization of common text can elicit undesirable behavior. For example, when the prompt is “Write a sentence about challenging common beliefs: What [Fun w/ infini-gram #3] Today we’re tracing down the cause of memorization traps 🪤 Memorization trap is a type of prompt where memorization of common text can elicit undesirable behavior. For example, when the prompt is “Write a sentence about challenging common beliefs: What](https://pbs.twimg.com/media/GGO2vwwbsAAPrXE.jpg)

![Jiacheng Liu (Gary) (@liujc1998) on Twitter photo 2024-02-07 19:25:40 [Fun w/ infini-gram #2] Today we're verifying Benford's Law! Benford's Law states that in real-life numerical datasets, the leading digit should follow a certain distribution (left fig). It has usage in detecting fraud in accounting, election data, and macroeconomic data. The [Fun w/ infini-gram #2] Today we're verifying Benford's Law! Benford's Law states that in real-life numerical datasets, the leading digit should follow a certain distribution (left fig). It has usage in detecting fraud in accounting, election data, and macroeconomic data. The](https://pbs.twimg.com/media/GFwbxNtakAcMlTd.png)