Yann LeCun

@ylecun

Professor at NYU. Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

ID:48008938

http://yann.lecun.com 17-06-2009 16:05:51

18,9K Tweets

707,9K Followers

716 Following

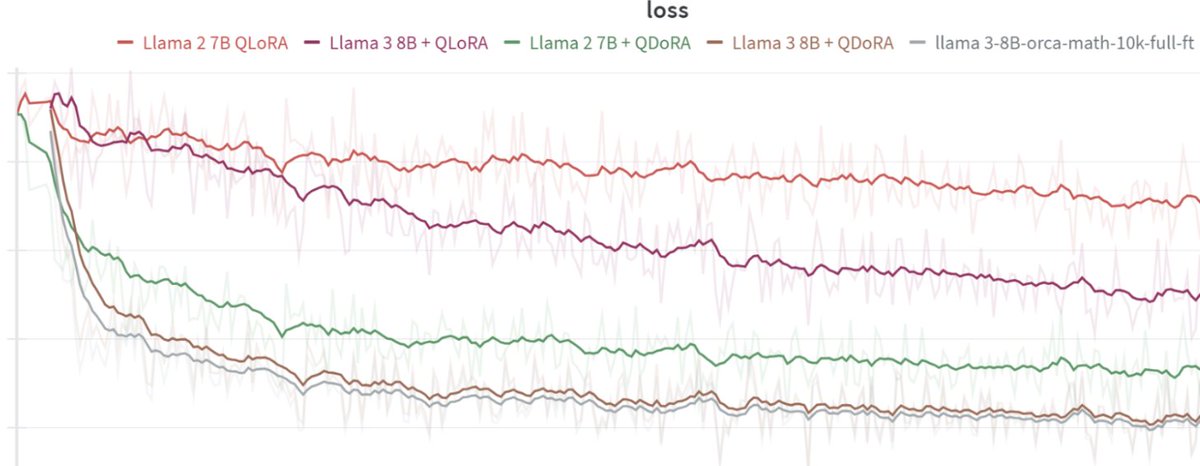

Easily Fine-tune AI at Meta Llama 3 70B! 🦙 I am excited to share a new guide on how to fine-tune Llama 3 70B with PyTorch FSDP, Q-Lora, and Flash Attention 2 (SDPA) using Hugging Face build for consumer-size GPUs (4x 24GB). 🚀

Blog: philschmid.de/fsdp-qlora-lla…

The blog covers:

👨💻

Delighted to share our new preprint! with Alec Marantz, @DavidPoeppel and Jean-Rémi King:

biorxiv.org/content/10.110…

'Hierarchical dynamic coding coordinates speech comprehension in the brain'

Summary below 👇

1/8

Is it possible to produce an AI system that is not biased?

As we saw with Google Gemini, it's clear that bias in our AI models will always be a huge problem. So what's the solution?

Here Yann LeCun nails it - It's the same solution we found with the press: freedom and diversity.

Yann LeCun Yann LeCun delivered a lecture on Objective-Driven AI.

He began with a reality check: 'Machine Learning falls short compared to humans and animals!'

Here's his insight on constructing AI systems that learn, reason, plan, and prioritize safety:

1/5