Ehsan Shareghi

@EhsanShareghi

Teaching/working on #NLProc. Currently an assistant professor at Monash University and previously a postdoc in University of Cambridge. Opinions are my own.

ID:1365097706704703488

https://eehsan.github.io 26-02-2021 00:33:57

70 Tweets

186 Followers

161 Following

It is a waste of reviewers' efforts if an AC/SAC chooses to add a meta-review/decision inconsistent with the reviews/scores. This nullifies the rebuttal authors provide too. AC/SAC could allocate better reviewers at the start, or engage in the discussion. Not healthy! #emnlp2023

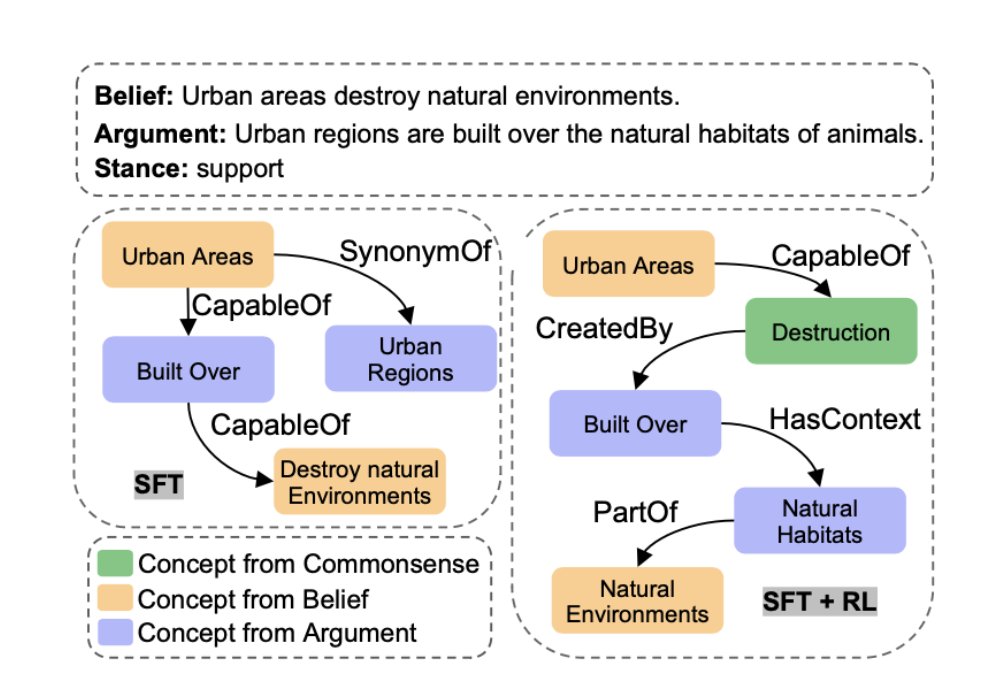

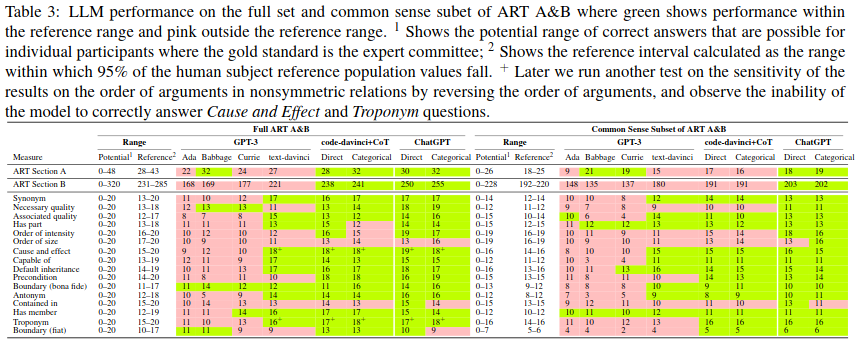

We (Fangyu Liu Nigel Collier ) reported related observations earlier in CogSci23 (arxiv.org/pdf/2208.11981…) - LLMs (those we tested) do not have a reliable sense of directionality. This is quite problematic for asymmetric relations. Good to see other works in this space.

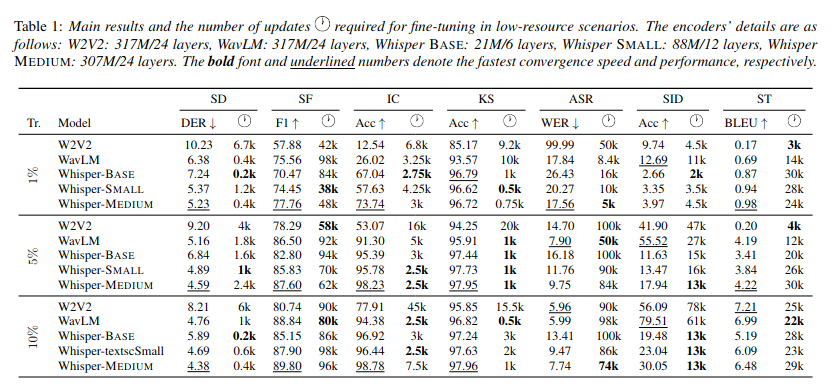

Speech encoders are foundation models too! We investigate (1) robustness in low-resource condition, (2) where they potentially capture content and prosodic information, and (3) their representational properties. #INTERSPEECH2023

Paper: arxiv.org/pdf/2305.17733…

This may not age well ... have been thinking about this for a while now ... I will learn from your thoughts/views on this #generativeAI

Delighted to announce our paper 'On Reality and the Limits of Language Data' in collaboration with Ehsan Shareghi and Fangyu Liu at . We've spent the last 9 months reading and thinking about the limitations of pre-trained language m…lnkd.in/d6RSeVXN lnkd.in/dT-t3n22