Back in the Continual Learning game with Alessio Devoto and Simone Scardapane!

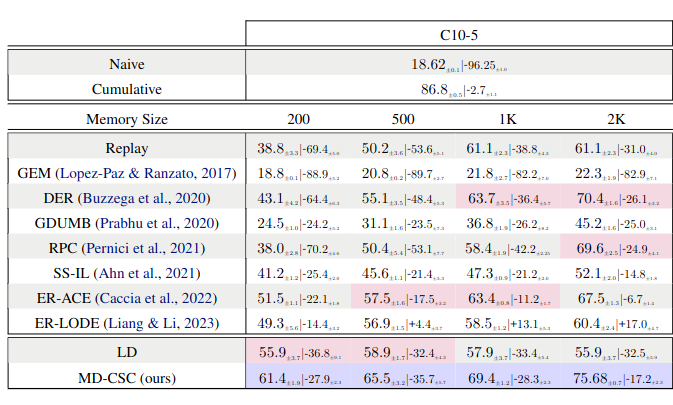

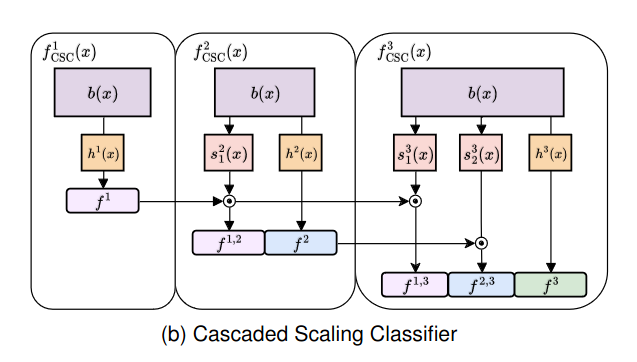

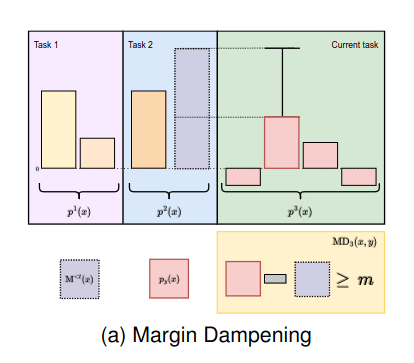

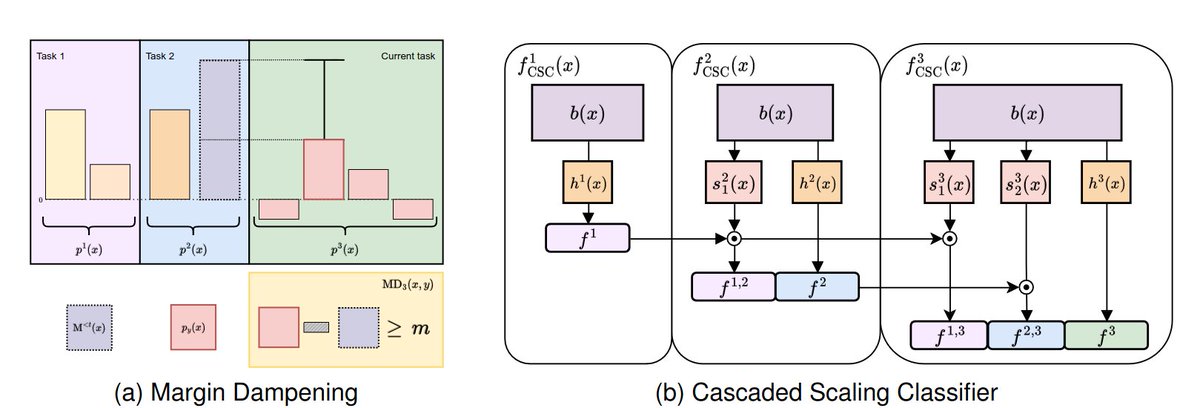

In this paper, we propose a regularization schema and a novel classifier head. Combining these two will improve the plasticity of your model while actively fighting catastrophic forgetting.

*Conditional computation in NNs: principles and research trends*

by Alessio Devoto Valerio Marsocci Jary Pomponi Pasquale Minervini 🚀 looking for postdocs!

Our latest tutorial on increasing modularity in NNs with conditional computation, covering MoEs, token selection, & early exits.

arxiv.org/abs/2403.07965

My first paper of 2024 is out!

With Matteo Gambella, Manuel Roveri, and Simone Scardapane, we developed NACHOS, the first Neural Architecture Search framework for automatically designing optimal Early Exits NNs.

You can find the paper here: arxiv.org/abs/2401.13330

Ferenc Huszár ICML Conference I created a repo containing only the modified paper and then anonymized it.

I will share the latter link, and I hope it will work or the reviewer...

Martin Mundt Kristian Kersting Wolfgang Stammer Very interesting, thanks for posting it. I will try to use it in my next Continual Learning research!

Hope to get interesting results