Sourabh Medapati

@activelifetribe

Research Engineer @ Google Deepmind

ID:1425636229698134019

12-08-2021 01:52:31

23 Tweets

51 Followers

733 Following

most exciting paper *ever* from our Google AI lab at Princeton University: naman agarwal danielsuo Xinyi Chen

arxiv.org/abs/2312.06837

*** Convolutional filters predetermined by the theory, no learning needed! ***

for anyone looking to hire strong engineers!! bilal did great work with us at Google DeepMind last summer 👌

#AI173 with 216 passengers stuck in Magadan, Russia since 2 pm IST Tue 6/6 after technical issues. Passengers staying at makeshift accommodation in a nearby school. Conditions have become miserable, no updates from Air India, bad food. Crew not helping. PMO India Dr. S. Jaishankar (Modi Ka Parivar)

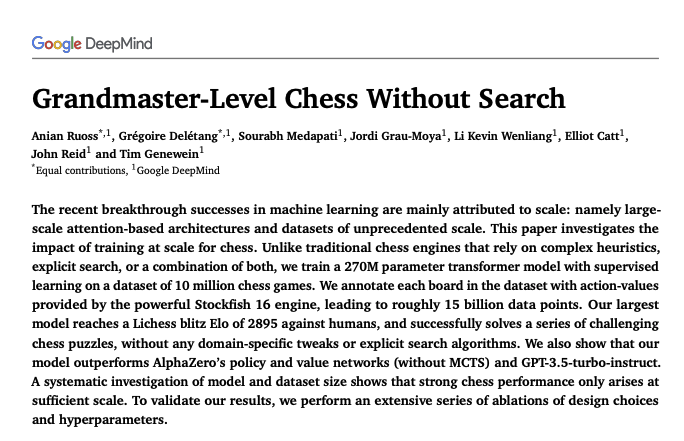

![fly51fly (@fly51fly) on Twitter photo 2024-02-08 03:46:42 [LG] Grandmaster-Level Chess Without Search A Ruoss, G Delétang, S Medapati, J Grau-Moya, L K Wenliang, E Catt, J Reid, T Genewein [Google DeepMind] (2024) arxiv.org/abs/2402.04494 - The authors train transformer models of different sizes (9M, 136M, 270M parameters) on a dataset… [LG] Grandmaster-Level Chess Without Search A Ruoss, G Delétang, S Medapati, J Grau-Moya, L K Wenliang, E Catt, J Reid, T Genewein [Google DeepMind] (2024) arxiv.org/abs/2402.04494 - The authors train transformer models of different sizes (9M, 136M, 270M parameters) on a dataset…](https://pbs.twimg.com/media/GFyQCYlaEAAJS4b.jpg)