Davis Blalock

@davisblalock

Research scientist + first hire @MosaicML. @MIT PhD. I write + retweet technical machine learning content. If you write a thread about your paper, tag me for RT

ID:805547773944889344

http://bit.ly/3OXJbDs 04-12-2016 23:02:10

1,2K Tweets

12,2K Followers

165 Following

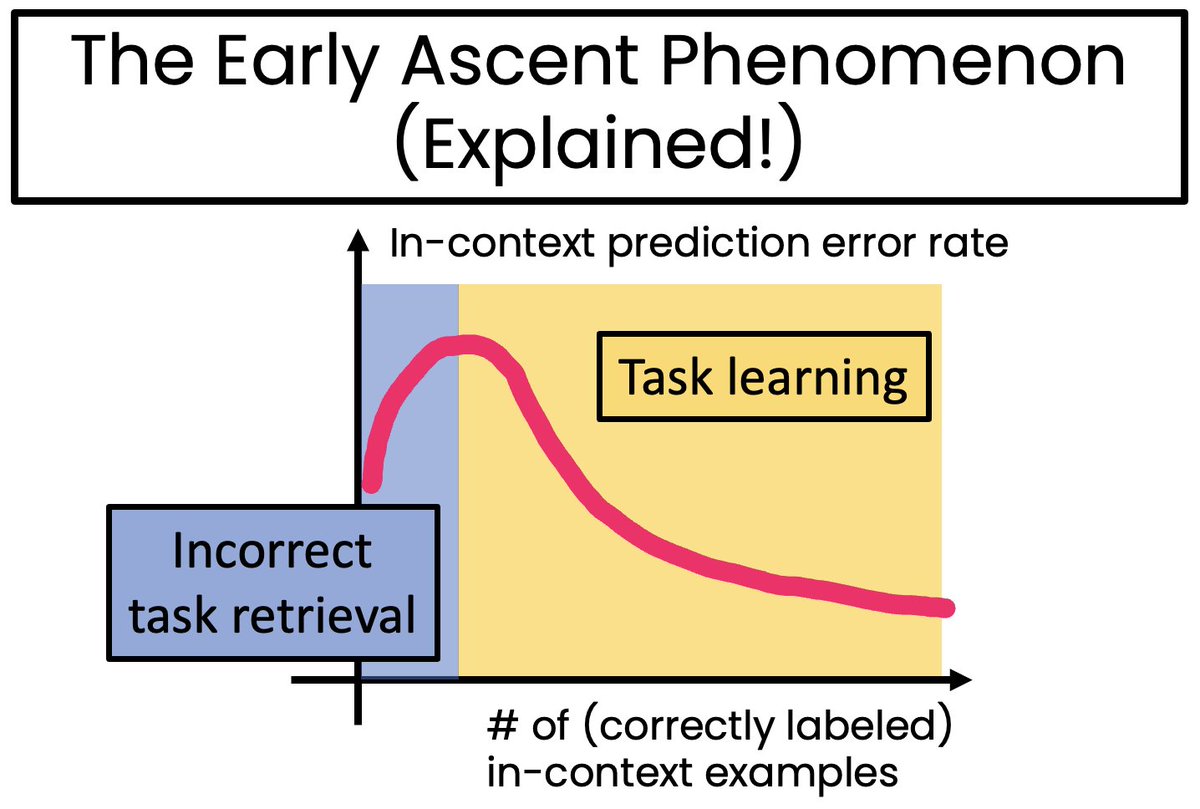

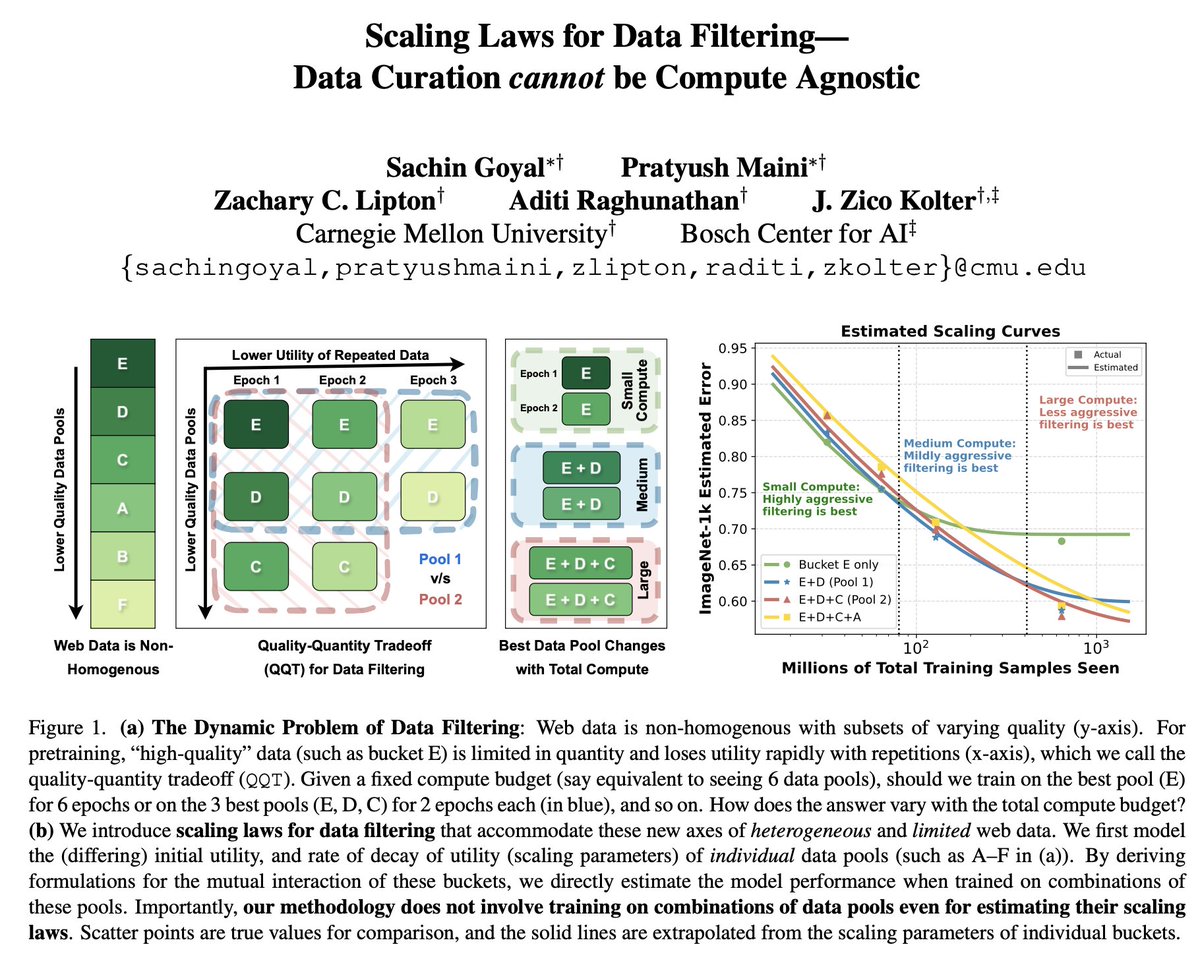

1/ 🥁Scaling Laws for Data Filtering 🥁

TLDR: Data Curation *cannot* be compute agnostic!

In our #CVPR2024 paper, we develop the first scaling laws for heterogeneous & limited web data.

w/Sachin Goyal Zachary Lipton Aditi Raghunathan Zico Kolter

📝:arxiv.org/abs/2404.07177

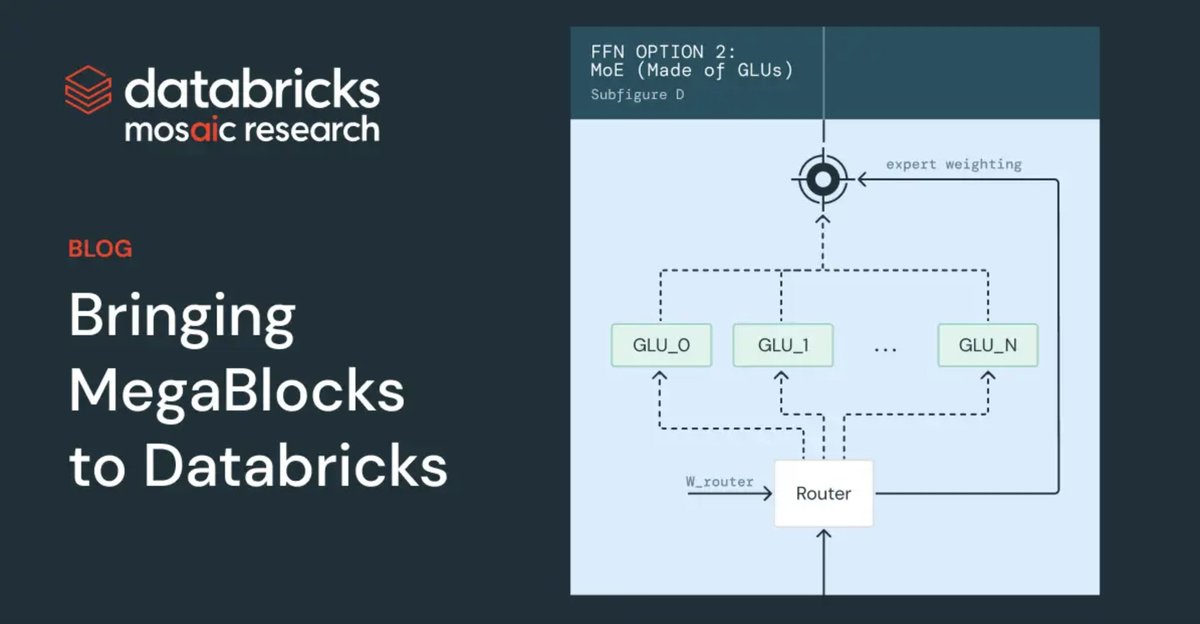

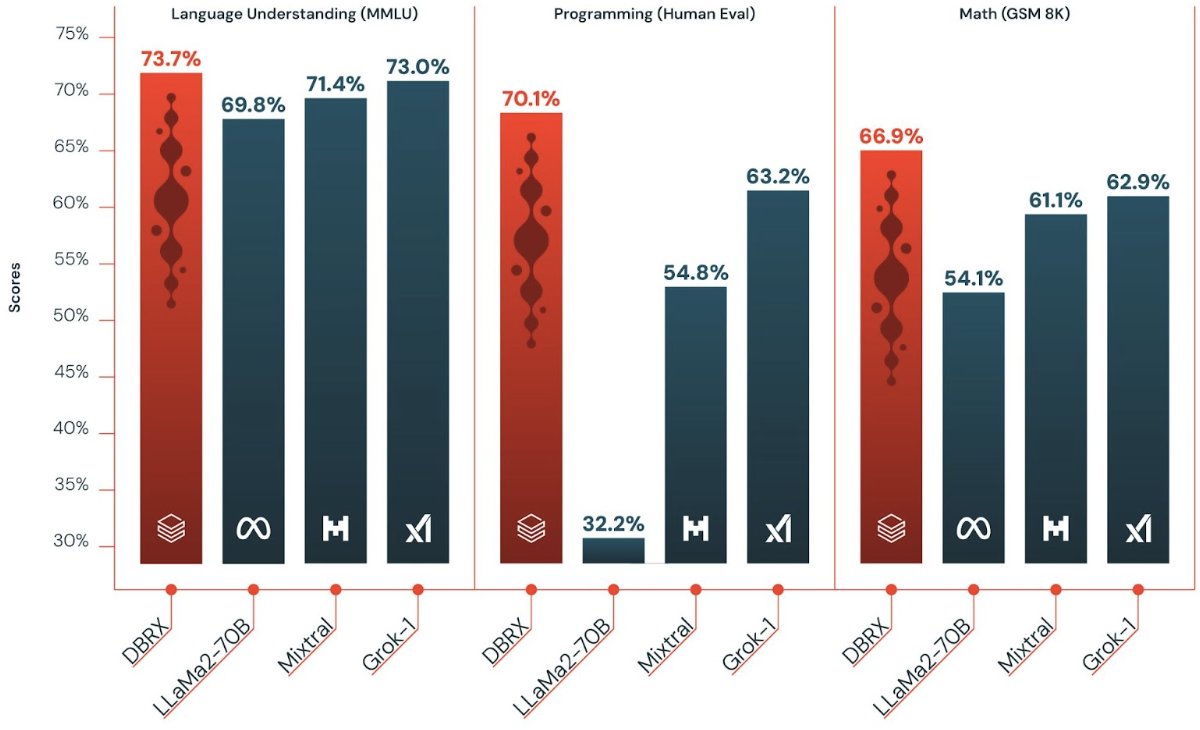

In this MLOps Community episode, MosaicML's Davis Blalock and bandish share war stories and lessons learned from pushing the limits of #LLM training and helping dozens of customers get LLMs into production. 🤝

👀 Watch the full episode: home.mlops.community/public/videos/…

#mlops #llms