Goran Glavaš

@gg42554

Professor for #NLProc @Uni_WUE.

ID:382302464

https://sites.google.com/view/goranglavas 29-09-2011 20:48:47

381 Tweets

994 Followers

261 Following

Great work by Andreea Iana to provide the first truly multilingual news recommendation dataset to date, enabling research on multilingual and cross-lingual news recommendation!

Happy for the opportunity to talk about xMIND, our new #multilingual #news #dataset , joint work w/ Goran Glavaš & Heiko Paulheim, at the Academic Speed Dating event organized by the Mannheim Center for Data Science!

Check out xMIND at: github.com/andreeaiana/xM…

With LLMs, it's easier than ever to do solid NLP for standard languages, BUT can your LLM *reason* in micro- and nano-dialects? Check our DIALECT-COPA shared task for more details. w. Nikola Ljubešić and Ivan Vulić

For research works in #multilingual news #recommendersystems , we are looking for people with skills in the following languages: Guarani, Haitian Creole, Quechua, Somali, Thai, Indonesian, Swahili, Tamil, Georgian, Vietnamese, Japanese. Get in touch with Andreea Iana. Please RT!

Great effort by Andreea Iana! An amazing resource & codebase for anyone working on news recommendation. Easy to train SotA models and comparatively evaluate and ablate them (true apple-to-apple comparisons)!

We (WüNLP Universität Würzburg #UniWürzburg ) are hiring! Looking for a postdoc to work on LLM alignment.

More info at tinyurl.com/5n7xy9c9 and tinyurl.com/2murbute

Come join our young and energetic team in Würzburg, one of the prettiest and most livable cities in Germany!

Great work by Gregor Geigle! SoTA massively multilingual Vision-and-Language models obtained with few days of training on consumer-grade GPUs.

Thanks to Gregor Geigle, you can now evaluate exactly how multilingual your favorite 'multilingual' V&L model truly is.

Happy to share that I presented our work about Demographic Adaptation at Insights Workshop today at EACL2023.

🙌Thanks to my co-authors:

Anne Lauscher (she/her) Dirk Hovy Goran Glavaš @spponzius

📖Check here: aclanthology.org/2023.findings-…

(1/2)

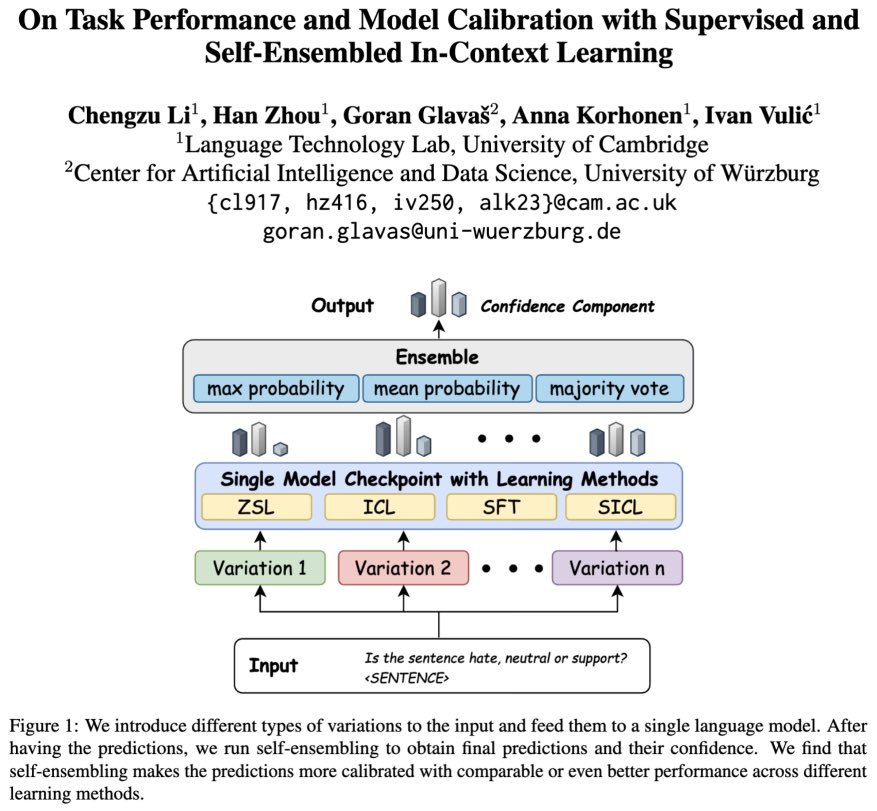

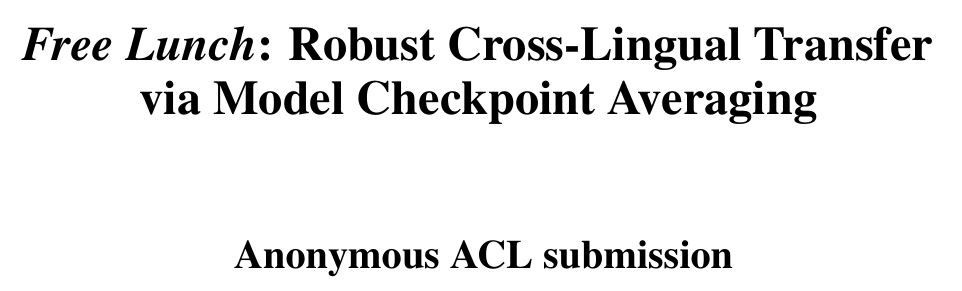

Gladly sharing that our work with Goran Glavaš and Ivan Vulić is accepted to #acl2023 , takeaways: (1) averaging training checkpoints outperforms conventional model selection for cross-lingual transfer; (2) averaging checkpoints from multiple runs brings further gains if heads are aligned

Still training #news #recommendersystems with explicit user models? Check our SIGIR 2024 paper 'Simplifying Content-Based Neural News Recommendation: On User Modeling and Training Objectives' to learn about alternatives! arxiv.org/abs/2304.03112 Andreea Iana Goran Glavaš Data and Web Science Group