Hugging Face

@huggingface

The AI community building the future. https://t.co/VkRPD0VKaZ

#BlackLivesMatter #stopasianhate

ID:778764142412984320

https://huggingface.co 22-09-2016 01:13:35

8,8K Tweets

341,4K Followers

188 Following

Earlier today, AI at Meta released Llama 3!🦙 Marking it as the next step in open AI development! 🚀Llama 3 comes with ~10% improvement compared to Llama 2 and in 2️⃣ sizes, 8B and 70B. 🤯

👉 philschmid.de/sagemaker-llam…

Learn how to run Llama 3 70B at 9ms latency with > 2000…

Llama 3 released! 🚨🔔AI at Meta just released their best open LLM! 👑🚀 Llama 3 is the next iteration of Llama with a ~10% relative improvement to its predecessor! 🤯 Llama 3 comes in 2 different sizes 8B and 70B with a new extended tokenizer and commercially permissive license!…

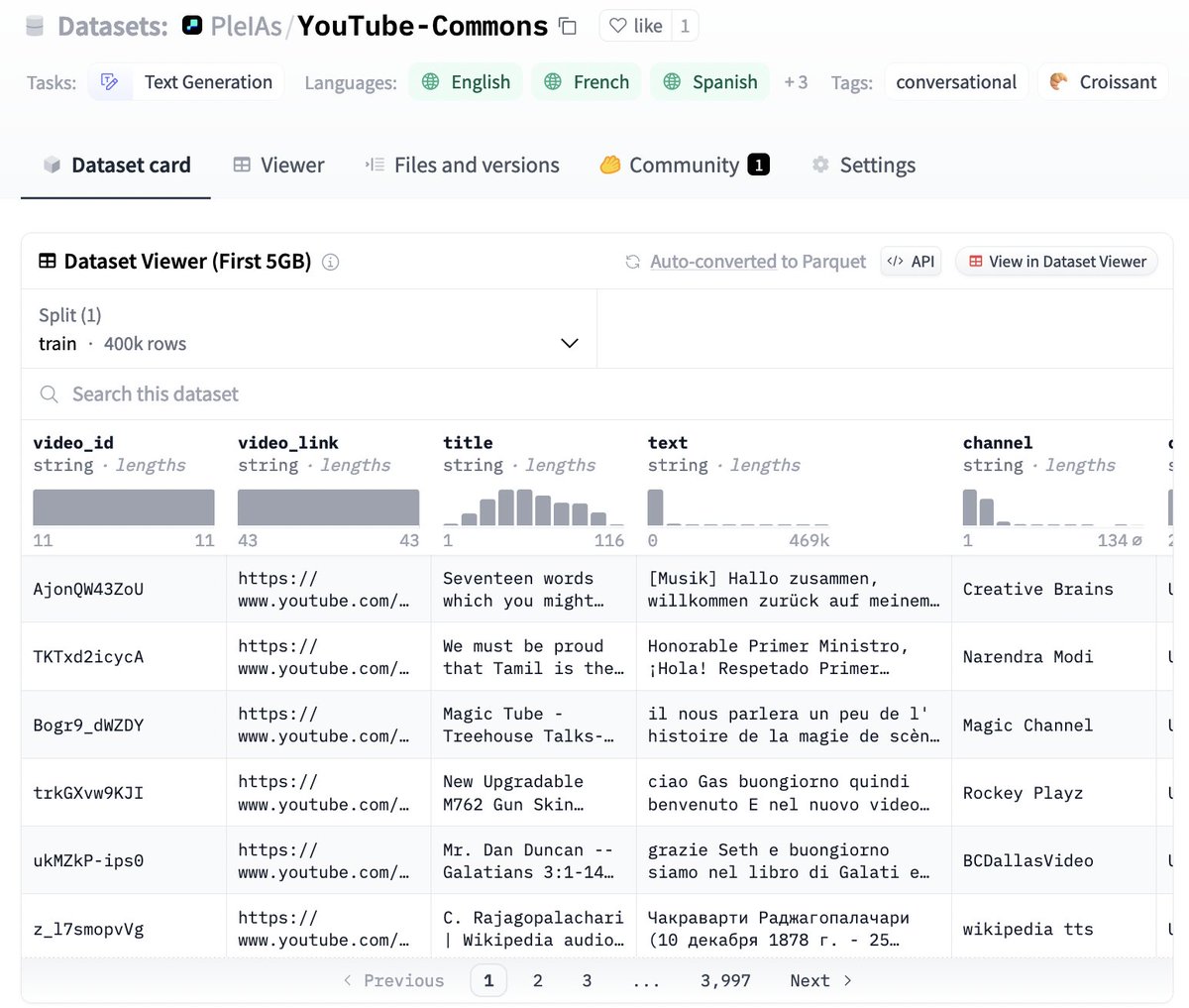

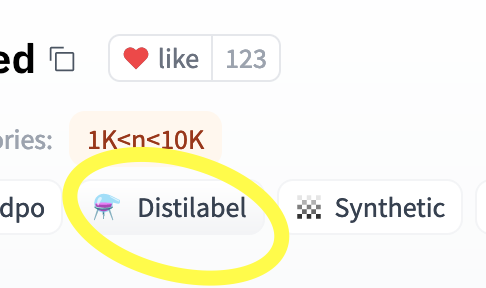

Did you know that Argilla and distilabel datasets have over 6 million hub downloads on the Hub? 🤯

Now, distilabel datasets will be even easier to identify thanks to the new icon added to the Hugging Face Hub—a nice addition to yesterday's release!

github.com/argilla-io/dis…