John Nay

@johnjnay

CEO @NormativeAI //

Chairman @BklnInvest //

Fellow @CodeXStanford //

More at https://t.co/IpzZqFi04M

ID:4654501943

https://law.stanford.edu/directory/john-nay/ 30-12-2015 14:10:39

1,7K Tweets

14,3K Followers

110 Following

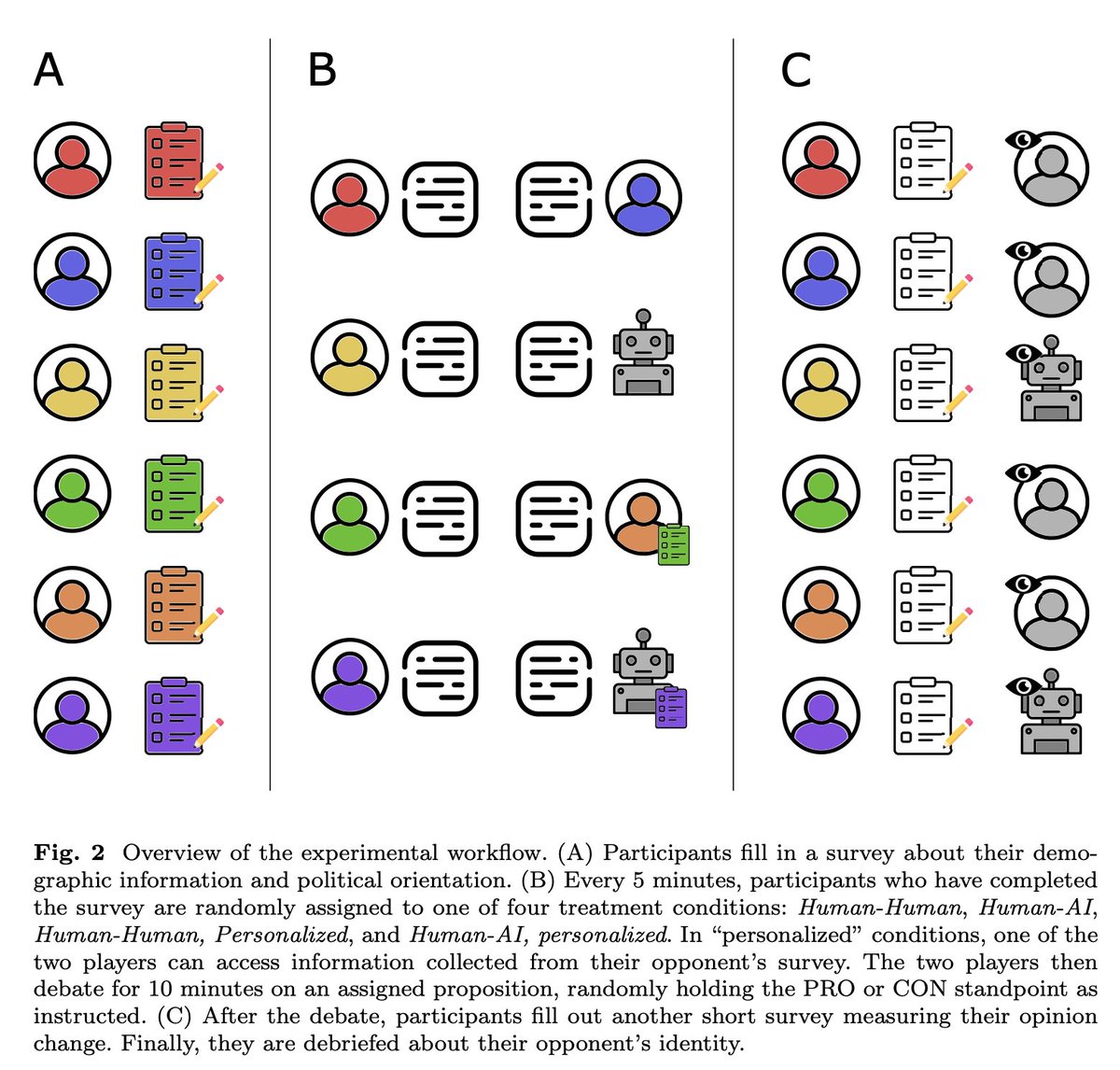

Now releasing content from our NYSE 🏛 AI agents event

Here Attorney General Matt Platkin discusses large gap he sees in AI expertise between gov & private sector

He also underlines need for society to come together to decide where human responsibility should apply along complicated Gen AI stack

Glad to have made (small) contributions to the LangChain community/codebase back in the day (years ago, feels like decades in LLM time)

Harrison Chase is building an important community and company

The Attorney General Matt Platkin perspective on AI Agents & Law was insightful.

Thank you for being part of this event!