Pedro Cuenca

@pcuenq

ML Engineer at 🤗 Hugging Face | Co-founder at LateNiteSoft (Camera+). I love AI and photography.

ID:1132965807896563714

27-05-2019 11:04:32

1,6K Tweets

4,8K Followers

769 Following

Follow People

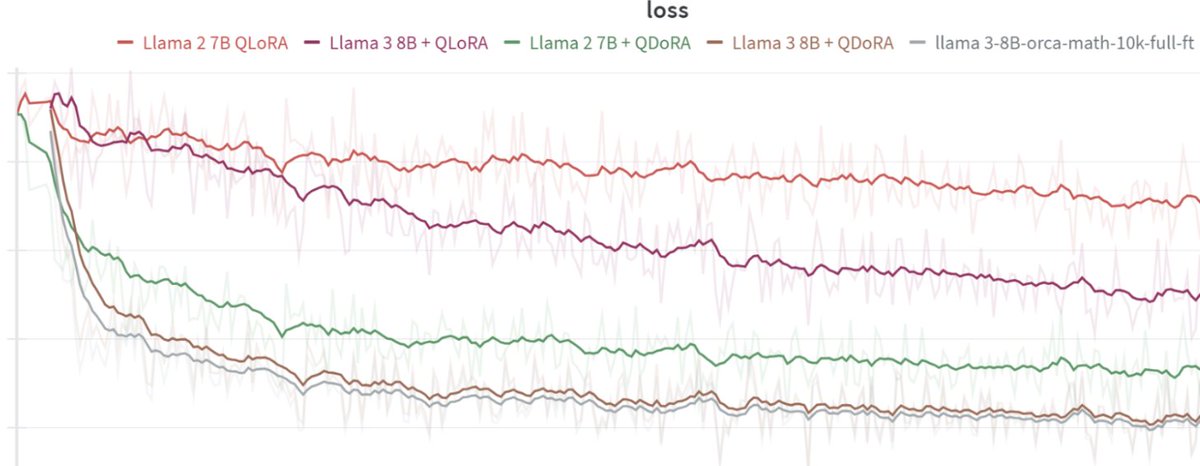

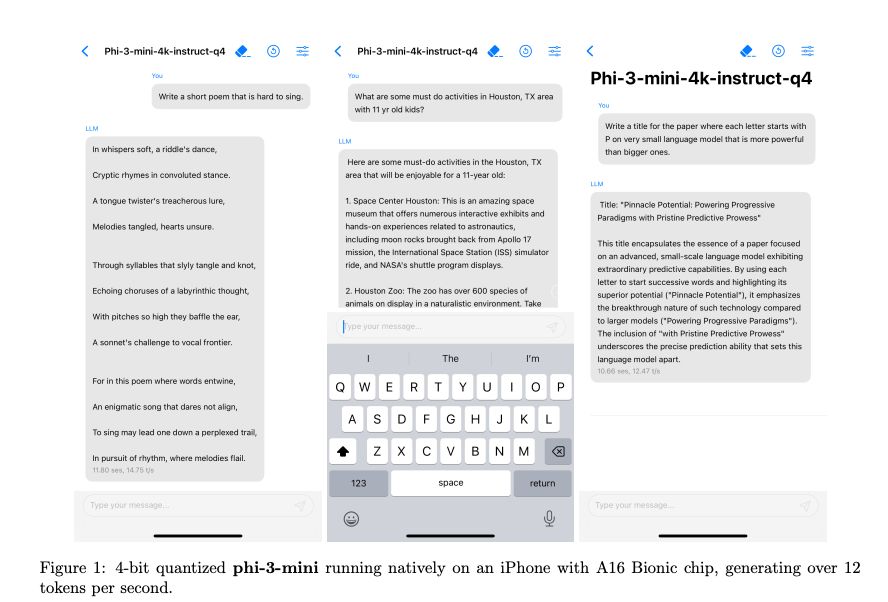

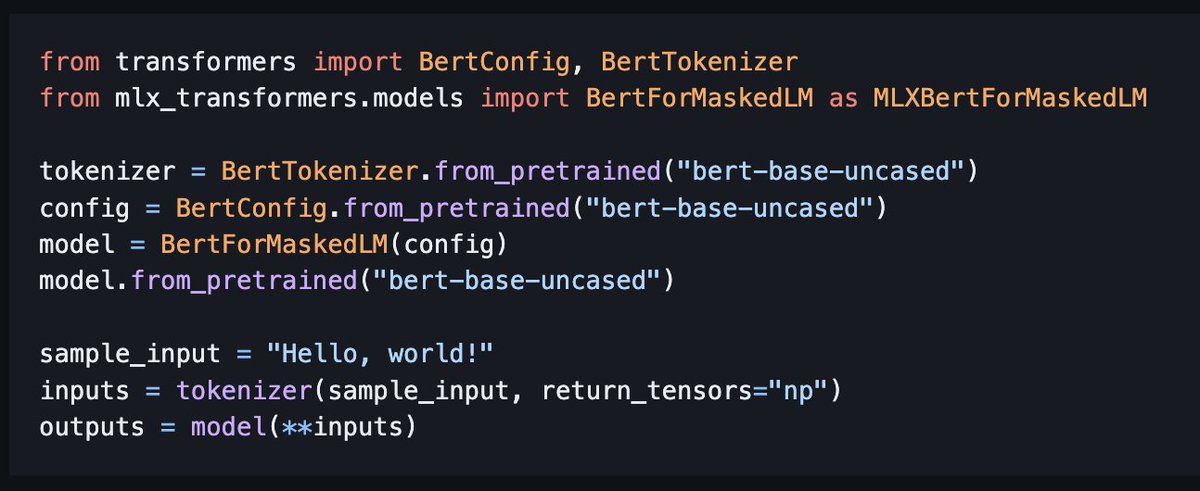

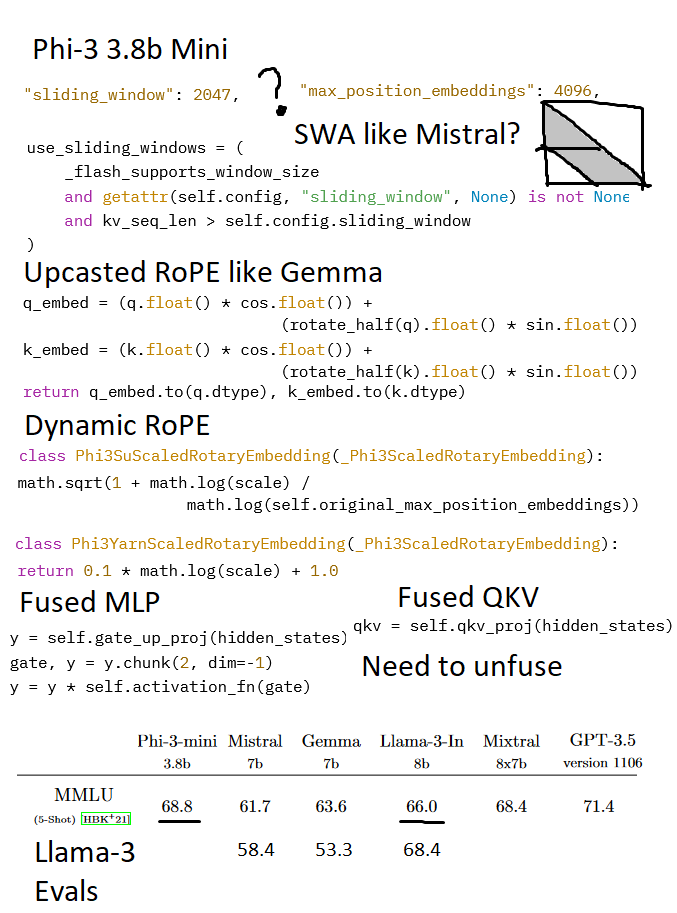

Phi 3 (3.8B) got released! The paper said it was just a Llama arch, but I found some quirks while adding this to Unsloth AI:

1. Sliding window of 2047? Mistral v1 4096. So does Phi mini have SWA? (And odd num?) Max RoPE position is 4096?

2. Upcasted RoPE? Like Gemma?

3. Dynamic

huggingface.co/bartowski/Meta…

I just remade and uploaded my quants for AI at Meta Llama 3 8B instruct GGUF to Hugging Face using the latest llamacpp release with official support, so no hacking needed to make the end token work, generation is perfect with llama.cpp ./main

Will have

Datasets might be more impactful than models at this point and this may be the GPT4 of datasets.

Courtesy of the amazing Guilherme who trained Falcon & the Hugging Face team!