Shreya Shankar

@sh_reya

I study ML & AI engineers and try to make their lives a little better. PhD-ing in databases & HCI @Berkeley_EECS @UCBEPIC and MLOps-ing around town. She/they.

ID:2286218053

http://www.sh-reya.com 11-01-2014 06:46:16

4,1K Tweets

39,4K Followers

593 Following

Follow People

👩🏫 Who validates the validators?

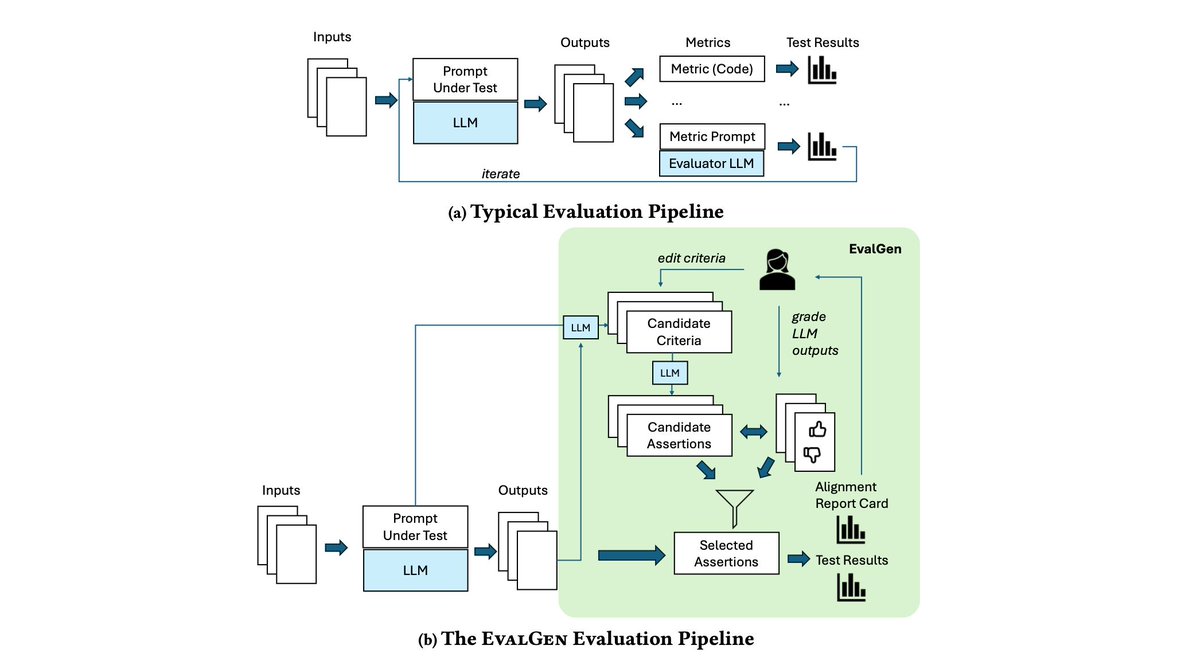

Shreya Shankar et al. introduced EvalGen, a mixed-initiative approach that aligns LLM-generated evaluations of LLM outputs with human preferences, addressing inherent biases and improving reliability.

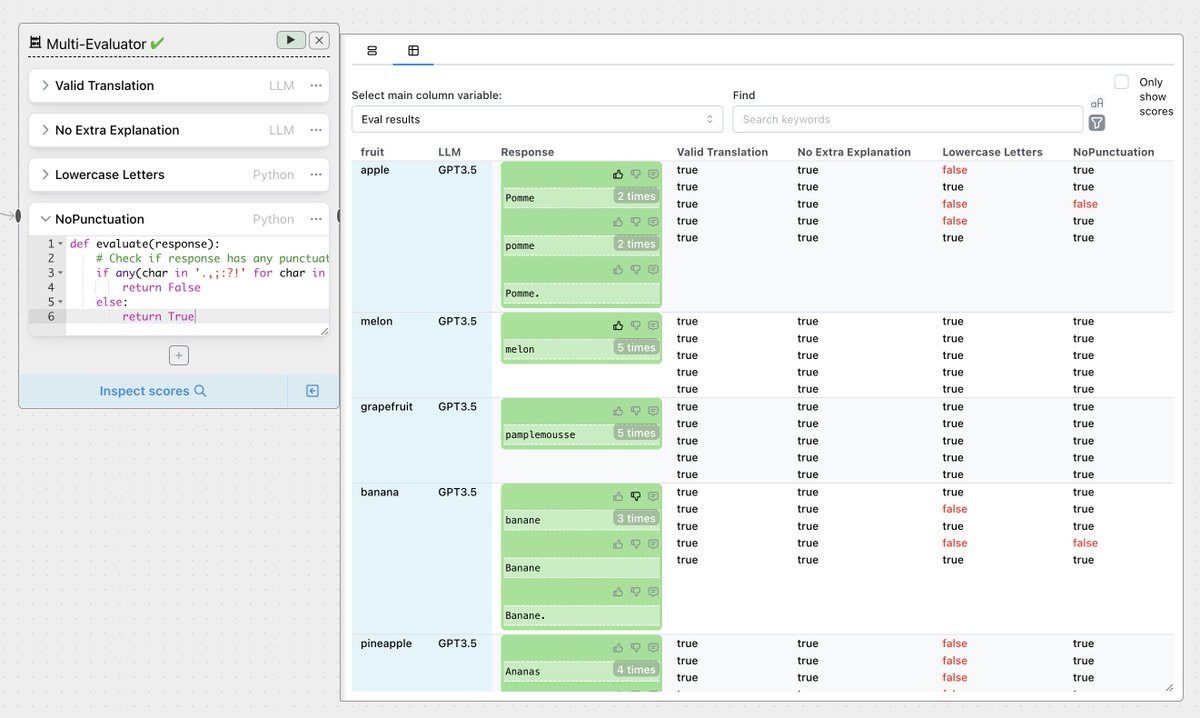

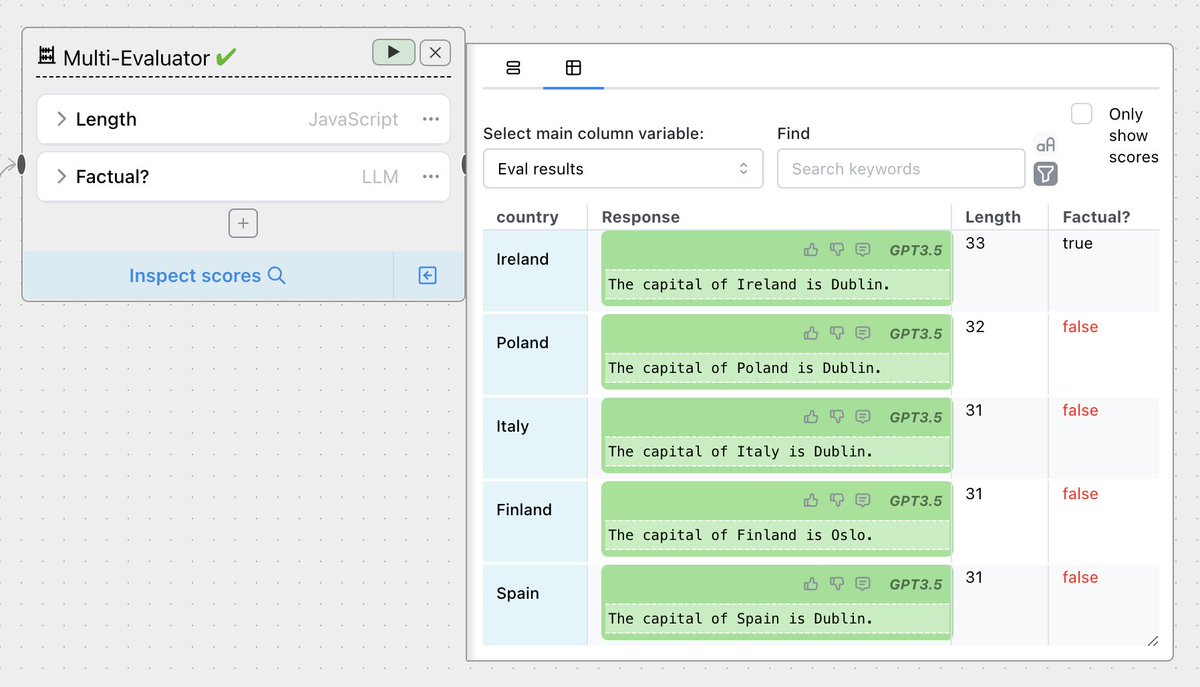

Implementation details:

arxiv.org/abs/2404.12272

Shreya Shankar This is a super interesting workflow and something we are doing really manually currently. Build evals > test using sample with human GT labels > refine eval. Then, in dev, run evals routinely, look for increased scores, but review outputs to apply qualitative adjustment.

since I'm deeply immersed in evals right now (and the process of building them) I got a kick out of this paper from Shreya Shankar J.D. Zamfirescu bjoern hartmann @[email protected] Aditya Parameswaran Ian Arawjo (@[email protected])

it addresses the challenge of time-efficiently coming up with evals that are aligned with practitioners

some…