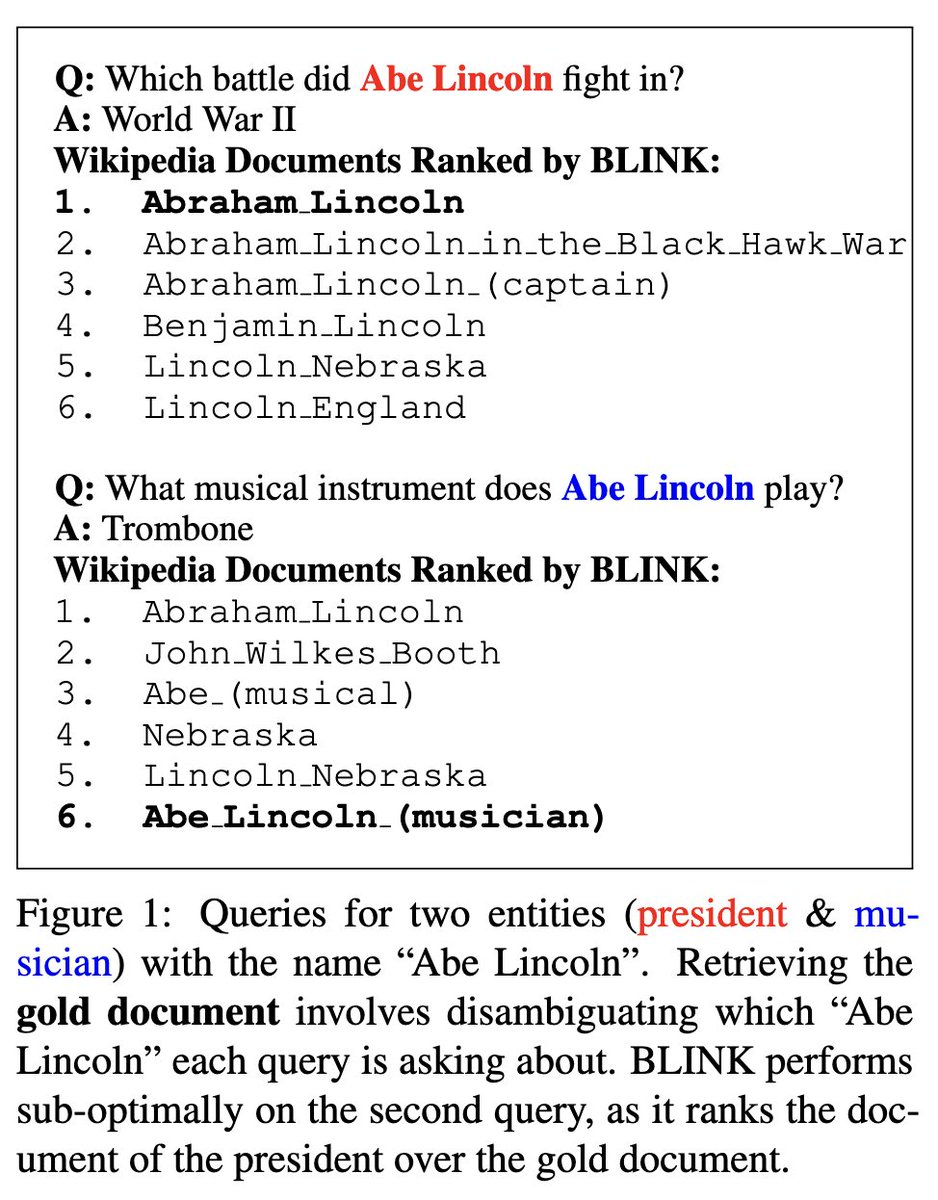

Do you know there's more than one Michael Jordan?🤨 Of course you do. Do retrievers?🤔 Our #ACL2021NLP paper evaluates the entity disambiguation capabilities of retrievers & how popularity bias affects retrieval

Idea: Construct sets of queries for all entities which share a name

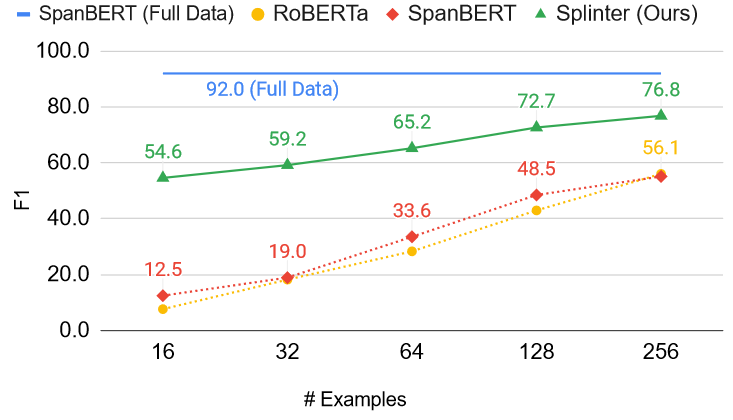

Ever wanted to train a QA model with only 100 examples?

Check out Splinter, our new extractive QA model, trained with a novel self-supervised pretraining task, which excels at the few-shot setting 🔥

at #ACL2021NLP

Paper: arxiv.org/abs/2101.00438

1/N

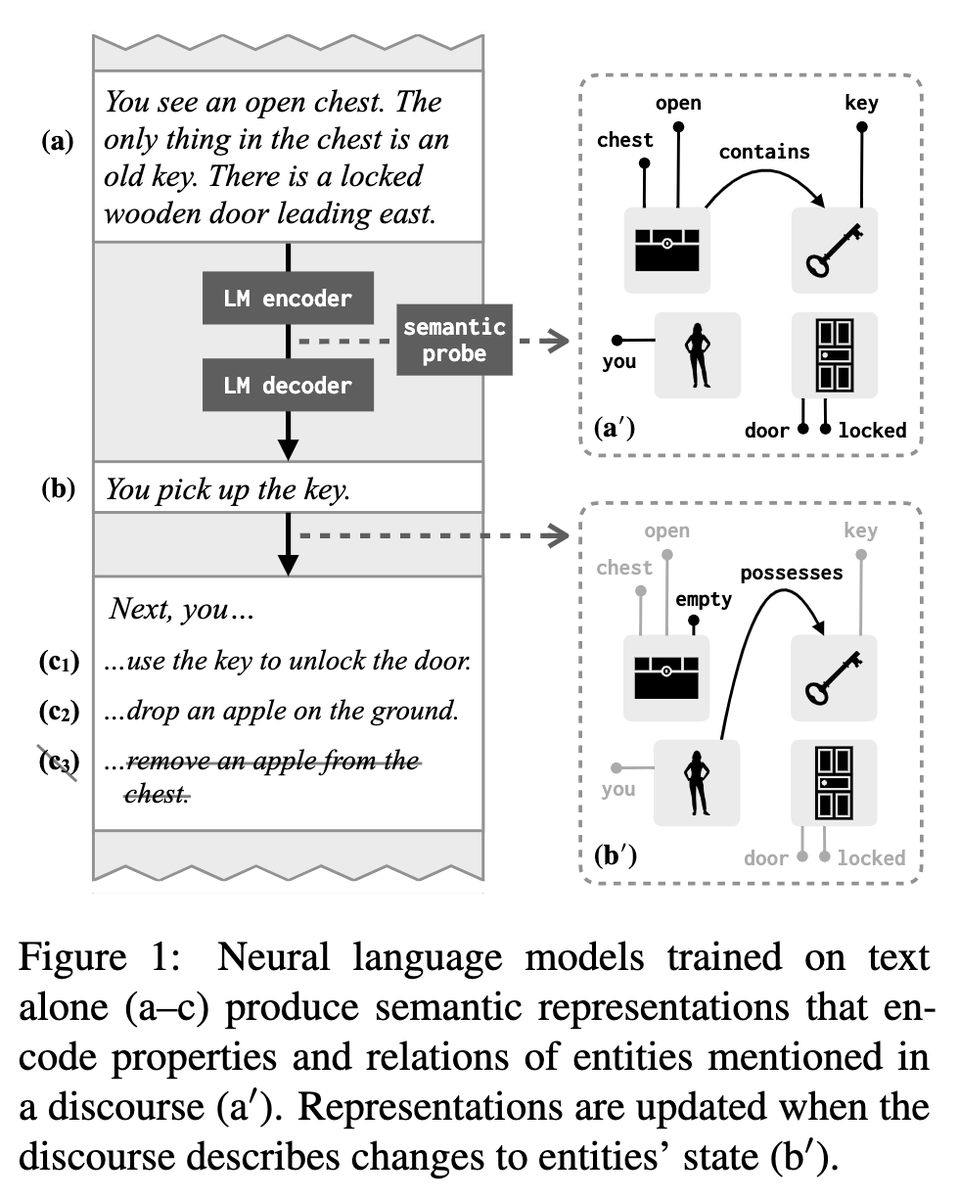

Do neural language models (trained on text alone!) construct representations of meaning? In a new #ACL2021NLP paper, we find that LM representations implicitly model *entities and situations* as they evolve through a discourse. 1/

arxiv.org/abs/2106.00737

Excited to introduce our paper “Learning Contextualized Knowledge Structures for Commonsense Reasoning”, which will appear in Findings of #ACL2021NLP !

Paper: arxiv.org/abs/2010.12873

Code: github.com/INK-USC/HGN

🧵[1/n]

![Jun Yan (@jun_yannn) on Twitter photo 2021-05-24 19:02:29 Excited to introduce our paper “Learning Contextualized Knowledge Structures for Commonsense Reasoning”, which will appear in Findings of #ACL2021NLP!

Paper: arxiv.org/abs/2010.12873

Code: github.com/INK-USC/HGN

🧵[1/n] Excited to introduce our paper “Learning Contextualized Knowledge Structures for Commonsense Reasoning”, which will appear in Findings of #ACL2021NLP!

Paper: arxiv.org/abs/2010.12873

Code: github.com/INK-USC/HGN

🧵[1/n]](https://pbs.twimg.com/media/E2LMBABVcAAPZle.png)

We’ll be presenting “Learning to Generate Task-Specific Adapters from Task Description” at Poster 2G (5-7pm PT). Please come and chat with us! 😊 #acl2021nlp

TL;DR: We train a hypernetwork that takes in a task description and generates task-specific adapter parameters.

Happy to introduce my internship work at Amazon Science: “AdaTag: Multi-Attribute Value Extraction from Product Profiles with Adaptive Decoding”, which got accepted to #ACL2021NLP !

Paper: arxiv.org/abs/2106.02318

🧵[1/n]

![Jun Yan (@jun_yannn) on Twitter photo 2021-06-15 17:28:58 Happy to introduce my internship work at @AmazonScience: “AdaTag: Multi-Attribute Value Extraction from Product Profiles with Adaptive Decoding”, which got accepted to #ACL2021NLP!

Paper: arxiv.org/abs/2106.02318

🧵[1/n] Happy to introduce my internship work at @AmazonScience: “AdaTag: Multi-Attribute Value Extraction from Product Profiles with Adaptive Decoding”, which got accepted to #ACL2021NLP!

Paper: arxiv.org/abs/2106.02318

🧵[1/n]](https://pbs.twimg.com/media/E38IDB5VcAAtzgW.jpg)

Have you ever wanted to get word token distributions, but had to throw away oov items because simply counting frequencies can’t handle them? This paper is for you!

Just in time for the party, here is a summary of our #ACL2021NLP findings on Modeling the Unigram Distribution :)

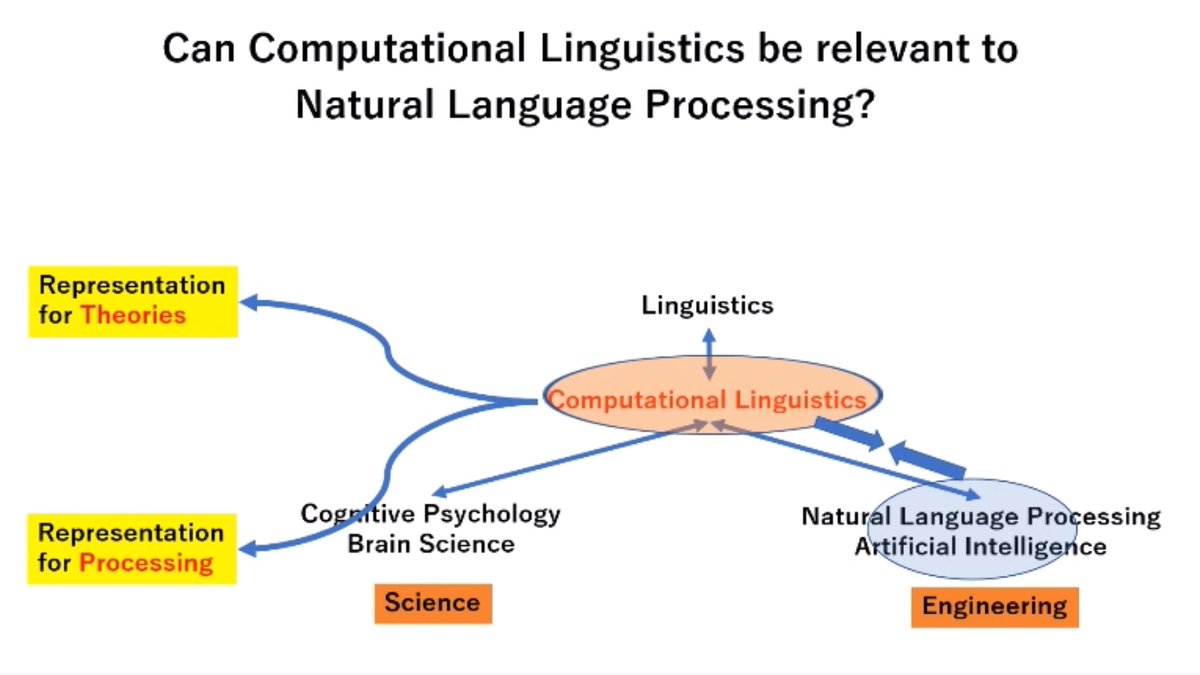

We often ask: what is the difference between Computational Linguistics and Natural Language Processing?

A wonderful perspective from Prof. Junichi Tsuji, #ACL2021NLP Lifetime Achievement Award winner, with the message:

It is time for us to reconnect NLP and CL!

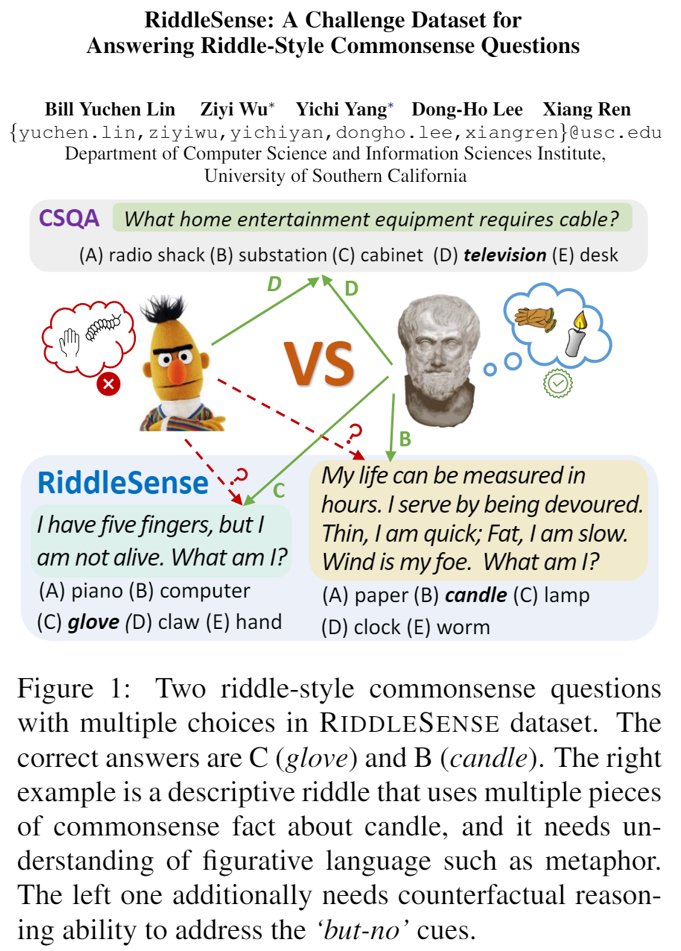

Wanna have some fun with your NLU models? Try out RiddleSense, our new QA dataset in #ACL2021NLP . It consists of multi-choice questions featuring both linguistic creativity and common-sense knowledge.

Project website: inklab.usc.edu/RiddleSense/ Paper: arxiv.org/abs/2101.00376 [1/5]

![Bill Yuchen Lin 🤖 (@billyuchenlin) on Twitter photo 2021-07-06 18:31:16 Wanna have some fun with your NLU models? Try out RiddleSense, our new QA dataset in #ACL2021NLP. It consists of multi-choice questions featuring both linguistic creativity and common-sense knowledge.

Project website: inklab.usc.edu/RiddleSense/ Paper: arxiv.org/abs/2101.00376 [1/5] Wanna have some fun with your NLU models? Try out RiddleSense, our new QA dataset in #ACL2021NLP. It consists of multi-choice questions featuring both linguistic creativity and common-sense knowledge.

Project website: inklab.usc.edu/RiddleSense/ Paper: arxiv.org/abs/2101.00376 [1/5]](https://pbs.twimg.com/media/E5odW6_VgAQmnzR.jpg)

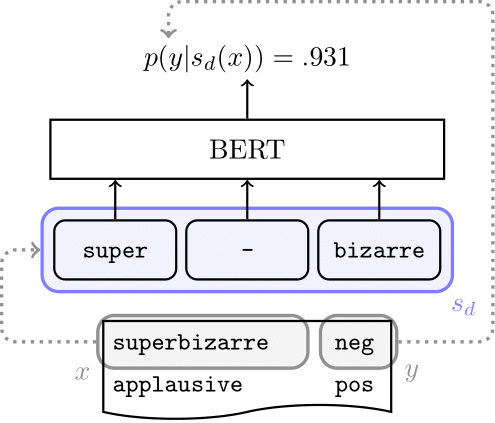

BERT's tokenizations are sometimes morphologically superb-iza-rre, but does that impact BERT's semantic representations of complex words? Our upcoming #ACL2021NLP paper (w/ Janet Pierrehumbert & hinrich schuetze) takes a look at this question. arxiv.org/pdf/2101.00403… /1

Thank you everyone for attending my talk on 'Including Signed Languages' at the #ACL2021NLP Best Paper Session! I am so grateful for all the enthusiasm 😊

We will upload ASL interpretations soon! In the meantime, here's my pre-recording with EN subtitles: youtu.be/AYEIcOsUyWs

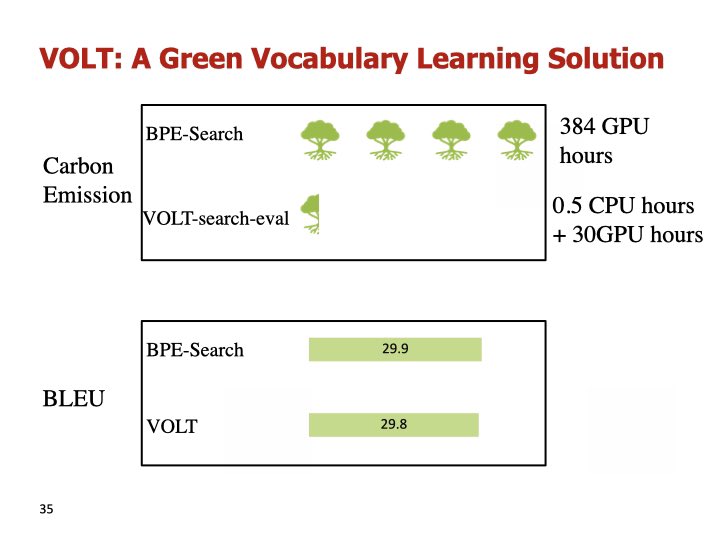

Honored and flattered to receive the best paper award of #ACL2021NLP . Thank to wonderful co-authors Jingjing, Hao, Chun and Zaixiang, to the extraordinary MLNLC team at Bytedance AI Lab, and zhenyuan, weiying, hang, and ACL committee for support!

to Olympic sprinter #SuBingtian

Is there a connection between Shapley Values and attention-based explanations in NLP?

Yes! Our #ACL2021NLP paper proves that **attention flows** can be Shapley Value explanations, but regular attention and leave-one-out cannot.

arxiv.org/abs/2105.14652

w/ Dan Jurafsky Stanford NLP Group

Introducing our #acl2021nlp work on evaluating and improving multi-lingual language models (ML-LMs) for commonsense reasoning (CSR). We present resources on probing & benchmarking, and a method for improving ML-LMs. [1/6]

Paper, code, data, etc.: inklab.usc.edu/XCSR/

![Bill Yuchen Lin 🤖 (@billyuchenlin) on Twitter photo 2021-06-22 17:50:02 Introducing our #acl2021nlp work on evaluating and improving multi-lingual language models (ML-LMs) for commonsense reasoning (CSR). We present resources on probing & benchmarking, and a method for improving ML-LMs. [1/6]

Paper, code, data, etc.: inklab.usc.edu/XCSR/ Introducing our #acl2021nlp work on evaluating and improving multi-lingual language models (ML-LMs) for commonsense reasoning (CSR). We present resources on probing & benchmarking, and a method for improving ML-LMs. [1/6]

Paper, code, data, etc.: inklab.usc.edu/XCSR/](https://pbs.twimg.com/media/E4gQYZ7UUAMg6Kp.jpg)

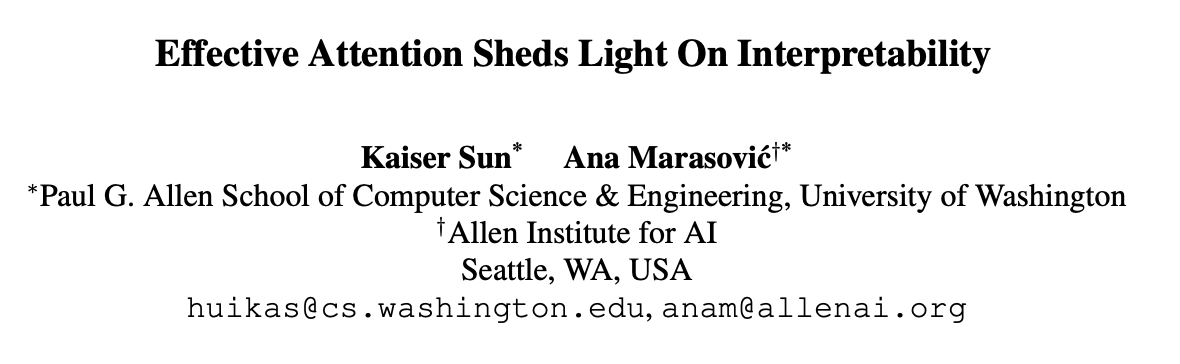

Our paper “Effective Attention Sheds Light On Interpretability”(w/ Ana Marasović) was accepted into Findings of ACL2021 #ACL2021NLP #NLProc

Pre-print available at: arxiv.org/abs/2105.08855

Thread⬇️

Our #ACL2021NLP paper 'Lower Perplexity is Not Always Human-Like' is now at arxiv.org/abs/2106.01229

The perplexity of language models is getting lower. Does this mean that they better simulate human incremental language processing? The answer is in our title.

(Tohoku NLP Group (migrated to @tohoku_nlp))

![Valentin Hofmann (@vjhofmann) on Twitter photo 2021-06-30 12:42:02 Word meaning varies across linguistic and extralinguistic contexts, but so far there has been no attempt in #NLProc to model both types of variation jointly. Our #ACL2021NLP paper fills this gap by introducing dynamic contextualized word embeddings. arxiv.org/pdf/2010.12684… [1/4] Word meaning varies across linguistic and extralinguistic contexts, but so far there has been no attempt in #NLProc to model both types of variation jointly. Our #ACL2021NLP paper fills this gap by introducing dynamic contextualized word embeddings. arxiv.org/pdf/2010.12684… [1/4]](https://pbs.twimg.com/media/E5IU__sXMAYwMIV.png)