📢📢 Looking forward to present our #CVPR2022 paper Panoptic Neural Fields at poster stand 218a this morning!

🌐: abhijitkundu.info/projects/pnf/

📜: arxiv.org/abs/2205.04334

🎥: youtu.be/3aXHxuQ-xBM

With Kyle, Xiaoqi Alireza Fathi Caroline Leonidas Guibas Andrea Tagliasacchi 🇨🇦🏔️ Frank Dellaert Tom.

(1/7) Our #CVPR2022 paper “How Much More Data Do I Need? Estimating Requirements For Downstream Tasks” explores data collection practices!

w/ James Lucas David Acuna Daiqing Li Jonah Philion Jose M. Alvarez Zhiding Yu Sanja Fidler Marc T. Law

Webpage: nv-tlabs.github.io/estimatingrequ…

Excited to present our work on head avatars at #CVPR2022 . Stop by at our poster 258 on Friday morning 10:00 - 12:30 and let's have a chat!

Malte Prinzler Titus Leistner Carsten Rother Matthias Niessner Justus Thies

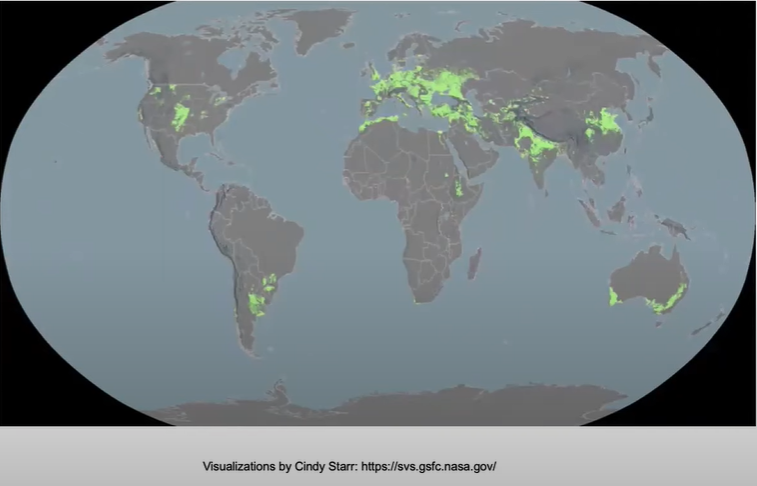

Interesting recent information about wheat (ስንዴ) growing locations around the globe during winter. Source: NASA Harvest (on CVPR2022) #Ethiopia is seen clearly on the map.-

Mentioning some people here 😁 Solomon Kassa Solomon Assefa Conflict Zone Abiy Ahmed Ali 🇪🇹

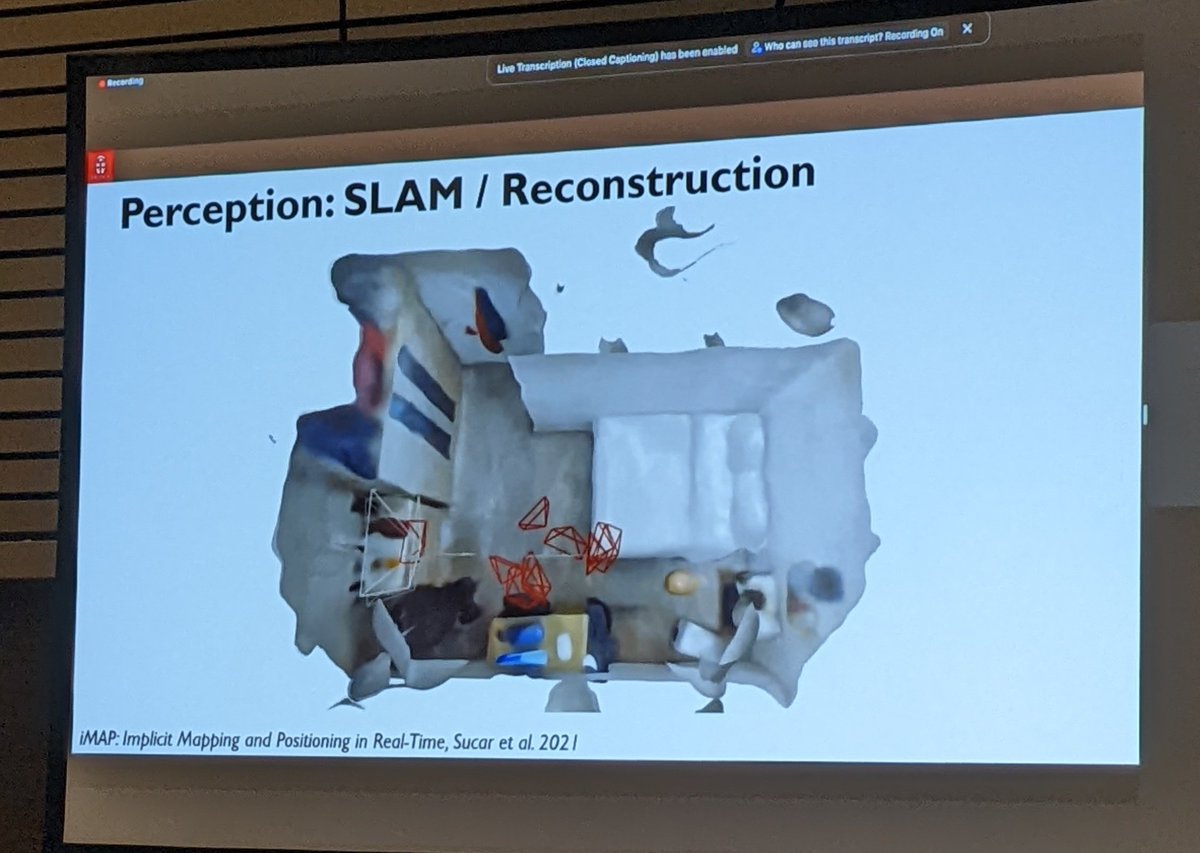

Glad to see Edgar Sucar, Andrew Davison 'Implicit Mapping and Positioning in Real-time' highlighted in the Neural Fields in Robotics tutorial at #cvpr2022

Take a look at the project here edgarsucar.github.io/iMAP/