Tim G. J. Rudner

@timrudner

Faculty Fellow at NYU. Trustworthy (safe x transparent x fair) ML.

I'm on the faculty job market.

Prev: PhD Student & Rhodes Scholar at University of Oxford.

ID:4338555809

https://timrudner.com 01-12-2015 09:55:56

2,1K Tweets

1,8K Followers

695 Following

CDS’ Tim G. J. Rudner & Julia Stoyanovich (@stoyanoj) join the national effort to set standards for AI safety with the U.S. AI Safety Institute Consortium.

“The stakes are high. And it’s important to get this right,” said Rudner.

Learn more: nyudatascience.medium.com/cds-tim-g-j-ru…

Seems like everyone wants to know more about how large language models work, but existing explainers often:

-Are too shallow or too technical

-Focus on how LLMs predict the next word, which is only part of the story

So we wrote 3 explainers of our own! 🧵

cset.georgetown.edu/article/the-su…

Making a good benchmark may seem easy---just collect a dataset---but it requires getting multiple high-level design choices right.

Thomas Woodside and I wrote a post on how to design good ML benchmarks:

safe.ai/blog/devising-…

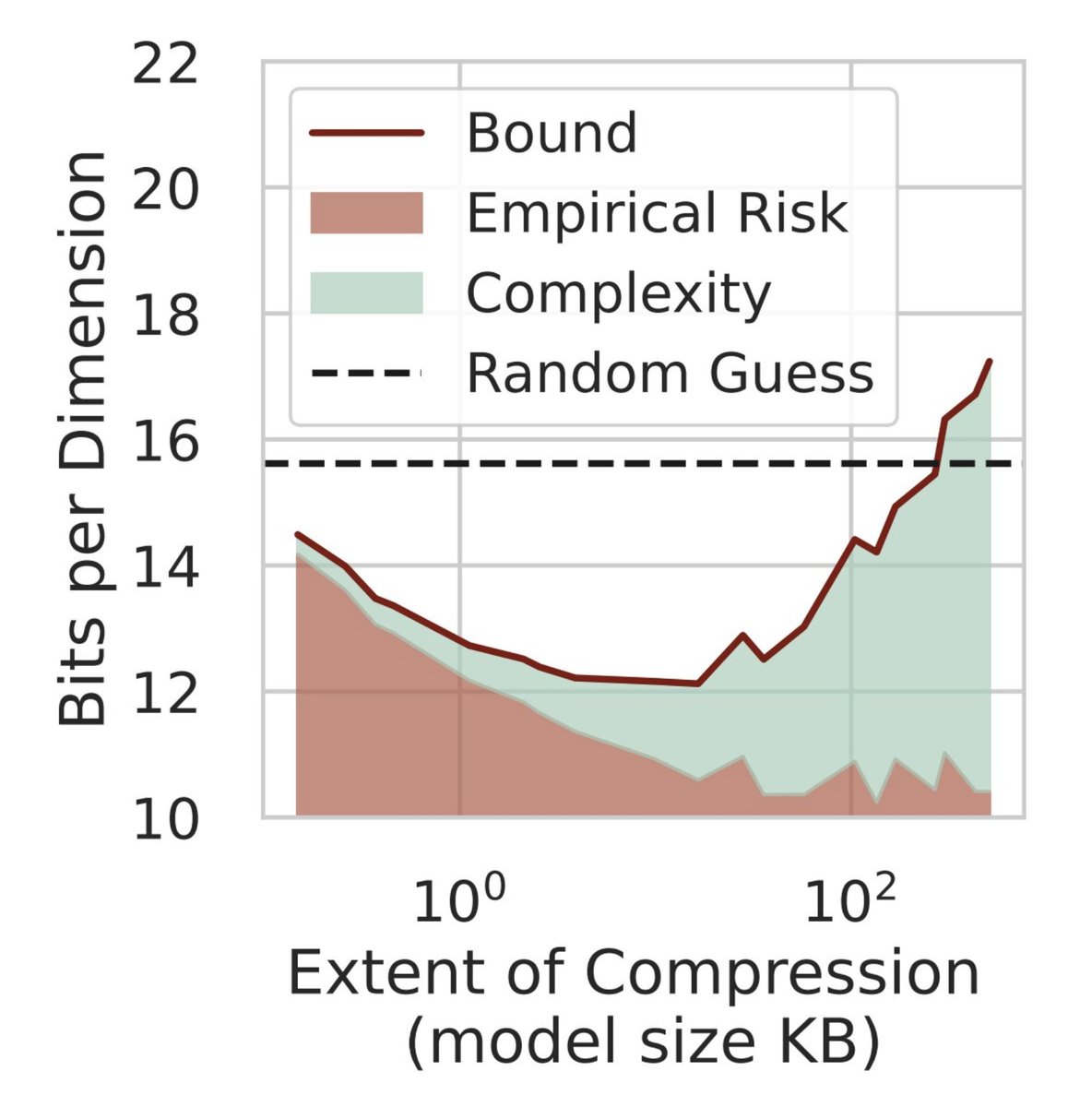

In this work we construct the first nonvacuous generalization bounds for LLMs, helping to explain why these models generalize.

w/ Sanae Lotfi, Yilun Kuang, Tim G. J. Rudner Micah Goldblum, Andrew Gordon Wilson

arxiv.org/abs/2312.17173

A 🧵on how we make these bounds

1/9

📢 We obtained the **first non-vacuous generalization bounds** for pre-trained LLMs! 🚀🤖

Check out our paper (📄: arxiv.org/abs/2312.17173) and Micah Goldblum's excellent thread 🧵below:

📢 We obtained the **first non-vacuous generalization bounds** for pre-trained large language models! 🚀🤖

Check out our paper (arxiv.org/abs/2312.17173) and Sanae Lotfi's excellent thread 🧵 below:

Please apply if you are a UK undergraduate from under-represented backgrounds interested in exploring what a career in AI research is like. Deadline Feb 17!

Google DeepMind has kindly supported the AI internship projects UNIQ+, University of Oxford, thank you!

Excited to share that my book on technological revolutions and power transitions will be published in Princeton University Press @princetonupress.bsky.s in August.

Available to preorder ($30 paperback):

- amazon.com/Technology-Ris…

- bookshop.org/p/books/techno…

![Cong Lu (@cong_ml) on Twitter photo 2024-02-23 17:04:51 🚨 Model-based methods for offline RL aren’t working for the reasons you think! 🚨 In our new work, led by @anyaasims, we uncover a hidden “edge-of-reach” pathology which we show is the actual reason why offline MBRL methods work or fail! Let's dive in! 🧵 [1/N] 🚨 Model-based methods for offline RL aren’t working for the reasons you think! 🚨 In our new work, led by @anyaasims, we uncover a hidden “edge-of-reach” pathology which we show is the actual reason why offline MBRL methods work or fail! Let's dive in! 🧵 [1/N]](https://pbs.twimg.com/media/GHCX5YtasAEB9kI.jpg)

![Ahmad Beirami (@abeirami) on Twitter photo 2024-02-05 01:00:27 [#eacl2024 paper] TL;DR We introduce 𝗴𝗿𝗮𝗱𝗶𝗲𝗻𝘁-𝗯𝗮𝘀𝗲𝗱 𝗿𝗲𝗱 𝘁𝗲𝗮𝗺𝗶𝗻𝗴 (𝗚𝗕𝗥𝗧), an effective method for triggering language models to produce unsafe responses, even when the LM is finetuned to be safe through 𝑎𝑙𝑖𝑔𝑛𝑚𝑒𝑛𝑡. [#eacl2024 paper] TL;DR We introduce 𝗴𝗿𝗮𝗱𝗶𝗲𝗻𝘁-𝗯𝗮𝘀𝗲𝗱 𝗿𝗲𝗱 𝘁𝗲𝗮𝗺𝗶𝗻𝗴 (𝗚𝗕𝗥𝗧), an effective method for triggering language models to produce unsafe responses, even when the LM is finetuned to be safe through 𝑎𝑙𝑖𝑔𝑛𝑚𝑒𝑛𝑡.](https://pbs.twimg.com/media/GFiG9NgWAAAXZPS.jpg)