Xi Ye

@xiye_nlp

I study NLP. CS PhD student @UTAustin. Incoming postdoc fellow @PrincetonPLI. Incoming assistant professor @UAlberta (Summer 2025).

ID:1242135548040548352

https://www.cs.utexas.edu/~xiye/ 23-03-2020 17:06:14

127 Tweets

1,5K Followers

305 Following

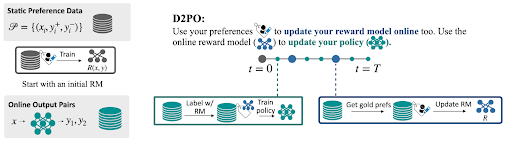

Labeling preferences online for LLM alignment improves DPO vs using static prefs. We show we can use online prefs to train a reward model and label *even more* preferences to train the LLM.

D2PO: discriminator-guided DPO

Work w/ Nathan Lambert Scott Niekum Tanya Goyal Greg Durrett

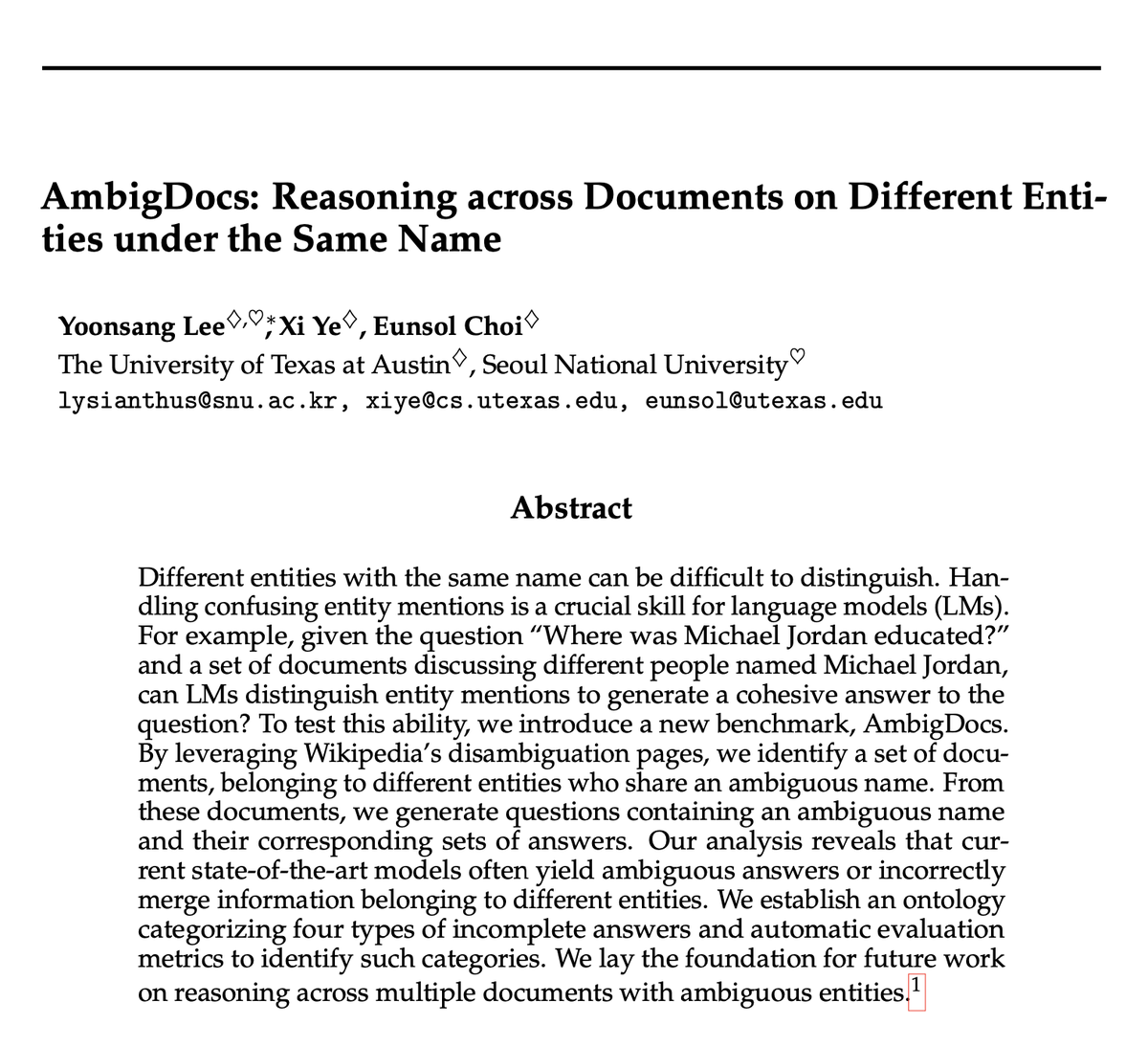

Can LMs correctly distinguish🔎 confusing entity mentions in multiple documents?

We study how current LMs perform QA task when provided ambiguous questions and a document set📚 that requires challenging entity disambiguation.

Work done at Computer Science at UT Austin✨ w/ Xi Ye, Eunsol Choi

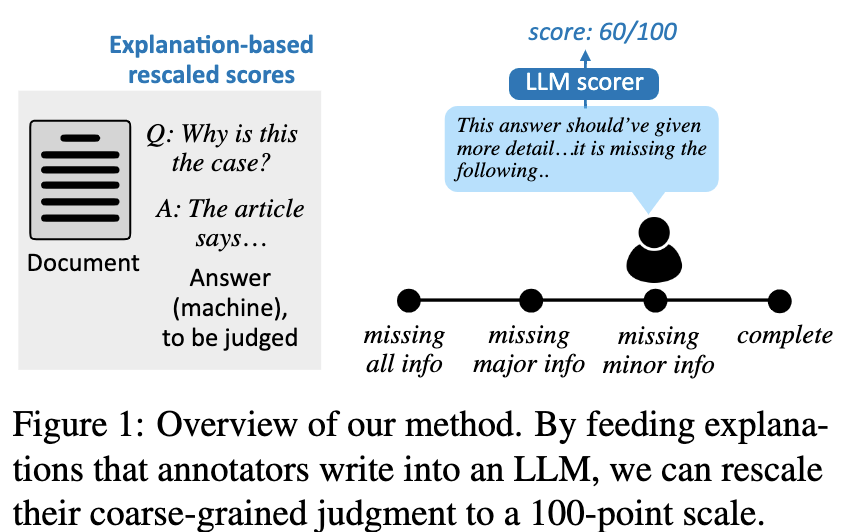

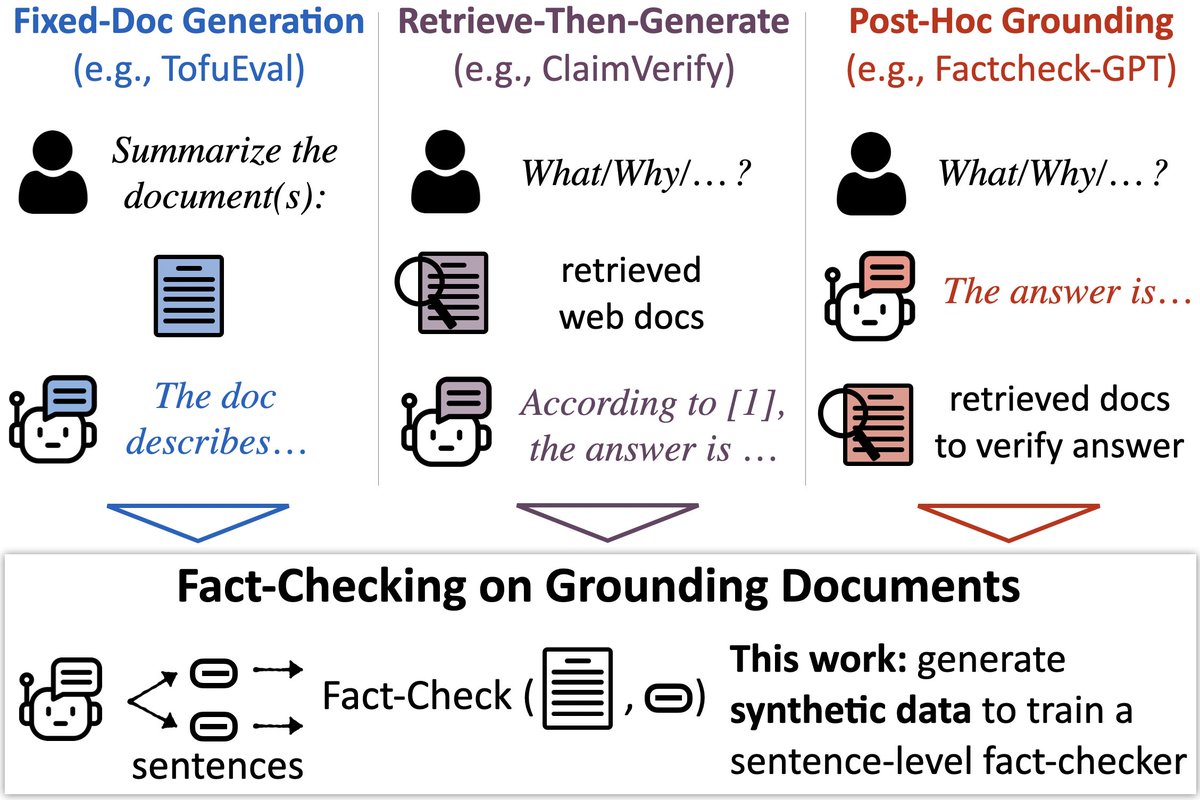

🔎📄New model & benchmark to check LLMs’ output against docs (e.g., fact-check RAG)

🕵️ MiniCheck: a model w/GPT-4 accuracy @ 400x cheaper

📚LLM-AggreFact: collects 10 human-labeled datasets of errors in model outputs

arxiv.org/abs/2404.10774

w/ Philippe Laban, Greg Durrett 🧵

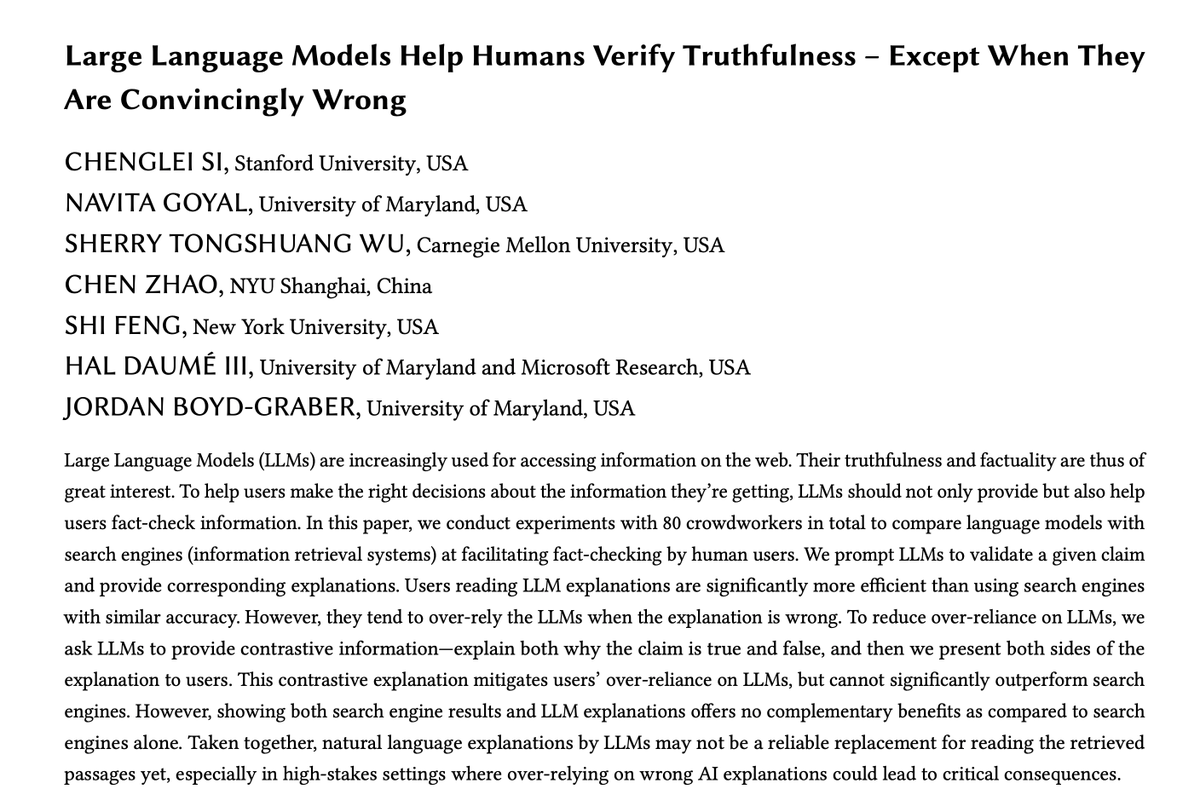

This is a cool method, but 'superhuman' is an overclaim based on the data shown. There are better datasets than FActScore for evaluating this:

ExpertQA arxiv.org/abs/2309.07852 by Chaitanya Malaviya +al

Factcheck-GPT arxiv.org/abs/2311.09000 by Yuxia Wang +al (+ same methodology) 🧵

Super excited to bring ChatGPT Murder Mysteries to #ICLR2024 from our dataset MuSR as a spotlight presentation!

A big shout-out goes to my coauthors, Xi Ye Kaj Bostrom Swarat Chaudhuri @ ICLR 2024 and Greg Durrett

See you all there 😀

Heading to #NeurIPS2023 ✈️

Happy to chat about LLM explanations, LLM reasoning, and more (as well as crawfish and oyster🦪

📣📣I am also on Academic jobmarket this year. Any chat welcome!

I’ll present SatLM with Jocelyn(Qiaochu) Chen and Greg Durrett on Wednesday (Poster Session 4)

Still feel weird about the virtual board thing😂

Here’s a trick that helped me get some interaction

#EMNLP2023

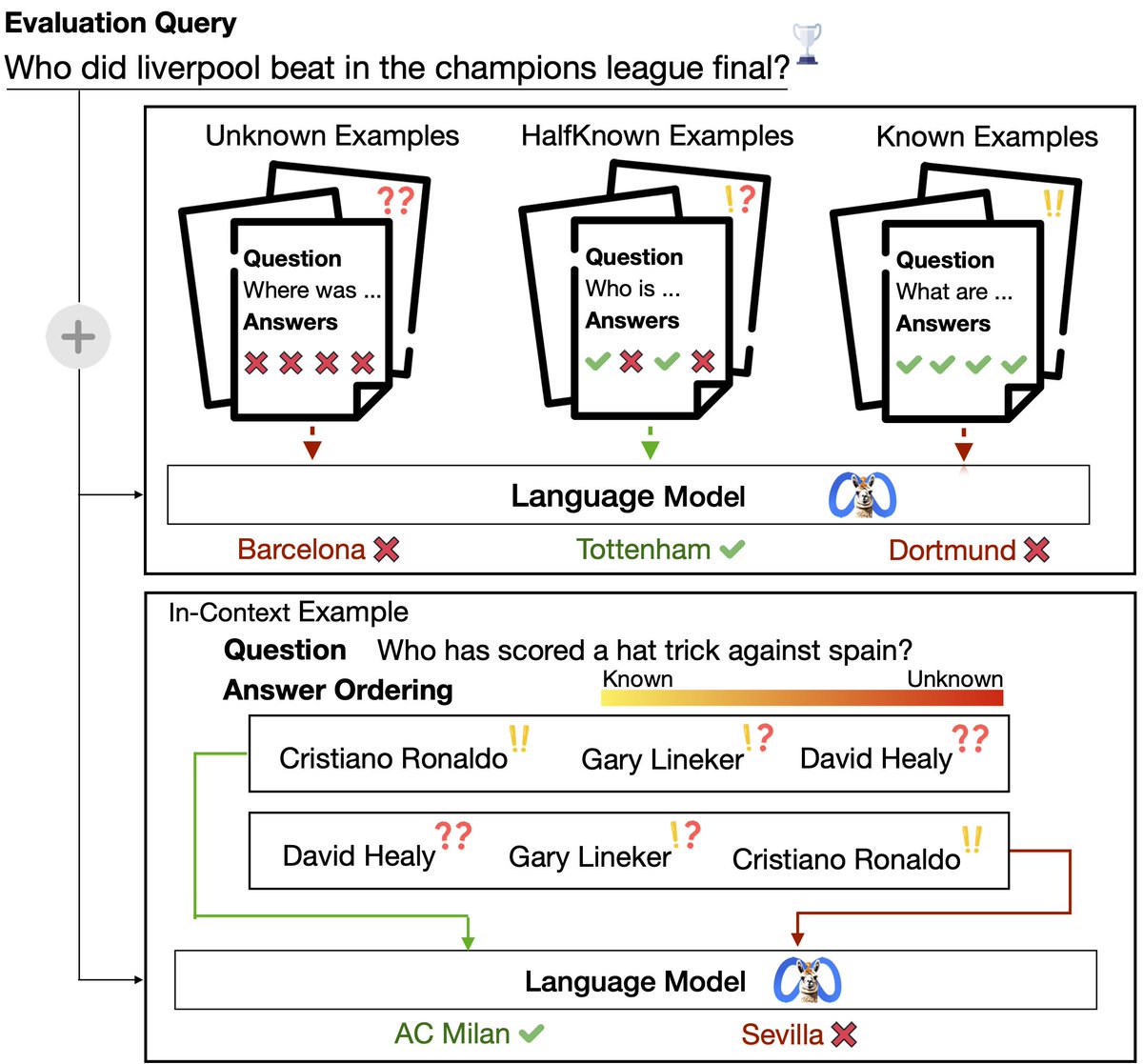

Known example❗️ or Unknown example❓ to prompt an LM?

We propose best practices for crafting ✏️ in-context examples according to LMs' parametric knowledge 📚.

Work done at Computer Science at UT Austin ✨ w/ Pranav Atreya, Xi Ye, Eunsol Choi

lilys012.github.io/assets/pdf/cra…

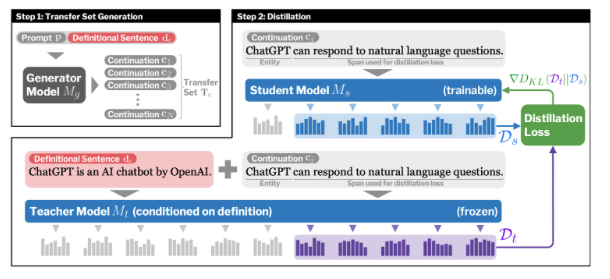

How do we teach LMs about new entities? Our #NeurIPS2023 paper proposes a distillation-based method to inject new entities via definitions. The LM can then make inferences that go beyond those definitions!

arxiv.org/abs/2306.09306

w/Yasumasa Onoe, Michael Zhang, Greg Durrett, Eunsol Choi

Check out Zayne Sprague 's super interesting work, MuSR.

We use GPT-4 to create murder mysteries🕵️ (and more) to test LLMs' reasoning abilities. We all had a lot of fun solving the mysteries during the project LOL.

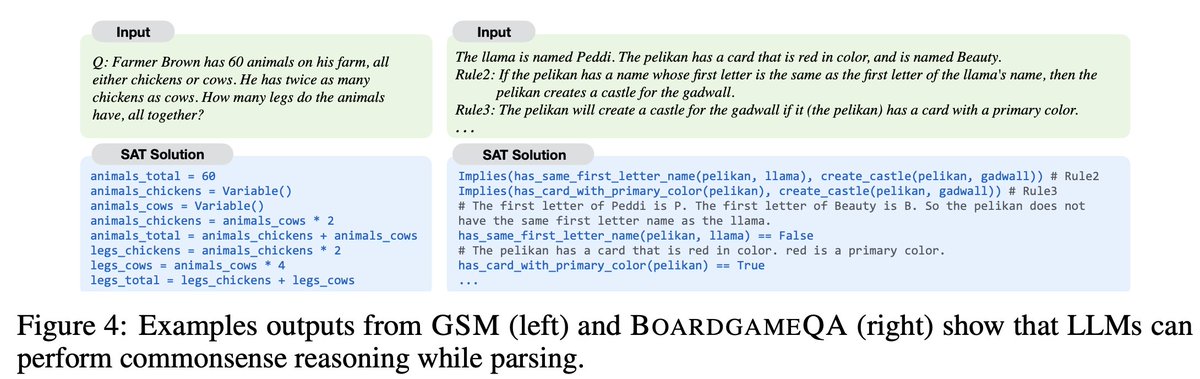

SatLM is now accepted at #NeurIPS2023

One strength💪of this framework is that it handles many tasks with the same solver (see previous 🧵). We added new results on BoardgameQA (released recently). SatLM also shows SOTA💡on this dataset requiring lots of commonsense knowledge.