EdinburghNLP

@EdinburghNLP

The Natural Language Processing Group at the University of Edinburgh

ID:862649978170245120

http://edinburghnlp.inf.ed.ac.uk/ 11-05-2017 12:45:56

988 Tweets

11,3K Followers

140 Following

Congrats to Aaditya Ura ( looking for PhD ), Pasquale Minervini 🚀 looking for postdocs!, Aryo Pradipta Gema and andreas motzfeldt for this cool initiative!

Open Life Science AI

Leaderboard: huggingface.co/spaces/openlif…

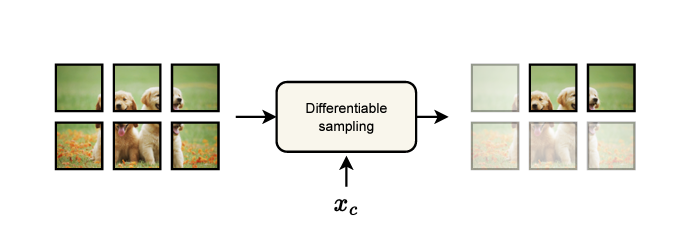

Emile van Krieken Chendi Qian Mathias Niepert Christopher Morris Zhe Zeng kareem ahmed Guy Van den Broeck Daniel Daza Thiviyan Thanapalasingam Peter Bloem (@[email protected]) Taraneh Younesian I also got reports that Adaptive IMLE from Pasquale Minervini 🚀 looking for postdocs!, L. Franceschi, and Mathias Niepert works very well for some graph-related sampling tasks, but I haven't tried it out myself yet

arxiv.org/abs/2209.04862

🚨New paper🚨

Excited to share that 'Select and Summarize: Scene Saliency for Movie Script Summarization' has been accepted at the #NAACL2024 Findings. 🎬

w. Frank Keller

arxiv.org/abs/2404.03561…

If you are curious to discover more about Dynamic Memory Compression, I will give a preview during my keynote talk at the MOOMIN workshop eaclmeeting

See you on Thursday, March 21st at 9:30 AM!

moomin-workshop.github.io/program

*Conditional computation in NNs: principles and research trends*

by Alessio Devoto Valerio Marsocci Jary Pomponi Pasquale Minervini 🚀 looking for postdocs!

Our latest tutorial on increasing modularity in NNs with conditional computation, covering MoEs, token selection, & early exits.

arxiv.org/abs/2403.07965

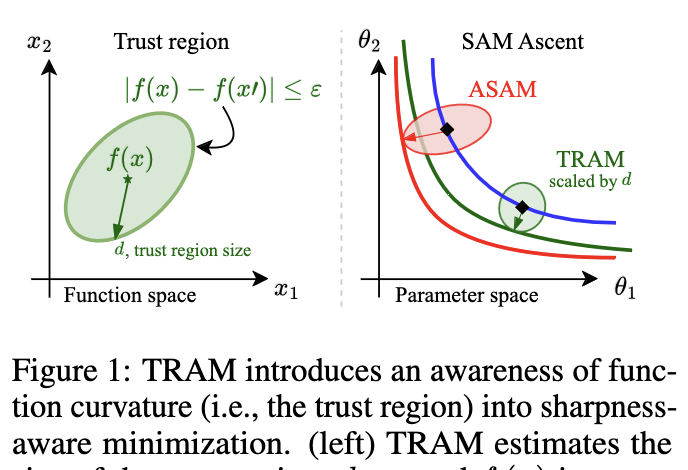

CR for TRAM is now live! See you at #ICLR2024 in Vienna (as a spotlight poster)

now feat.

* Vision exps (Better Imagenet→CIFAR/Cars/Flowers transfer)

* +ablations (XL model, weird combos)

* Pictures (see below!)

w/ Naomi Saphra Pradeep Dasigi Hao Peng

openreview.net/forum?id=kxebD…

![fly51fly (@fly51fly) on Twitter photo 2024-04-05 22:15:32 [CL] Learning to Plan and Generate Text with Citations arxiv.org/abs/2404.03381 - Long-form generation in information-seeking scenarios has seen increasing demand for verifiable systems that generate responses with supporting evidence like citations. Most approaches rely on… [CL] Learning to Plan and Generate Text with Citations arxiv.org/abs/2404.03381 - Long-form generation in information-seeking scenarios has seen increasing demand for verifiable systems that generate responses with supporting evidence like citations. Most approaches rely on…](https://pbs.twimg.com/media/GKbx6S5bUAARXFU.jpg)