🚨 new paper 🚨

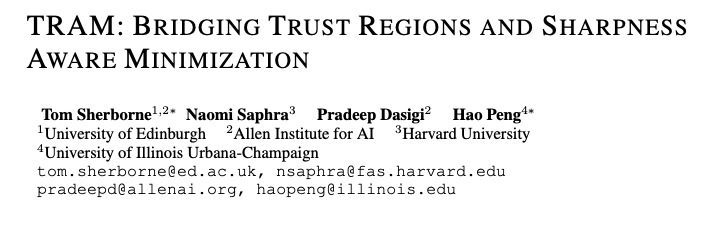

Can we train for flat minima with less catastrophic forgetting?

We propose Trust Region Aware Minimization for smoothness in parameter+representations. TL;DR representations matter as much as parameters!

arxiv.org/abs/2310.03646 w/Naomi Saphra Pradeep Dasigi Hao Peng

CR for TRAM is now live! See you at #ICLR2024 in Vienna (as a spotlight poster)

now feat.

* Vision exps (Better Imagenet→CIFAR/Cars/Flowers transfer)

* +ablations (XL model, weird combos)

* Pictures (see below!)

w/ Naomi Saphra Pradeep Dasigi Hao Peng

openreview.net/forum?id=kxebD…

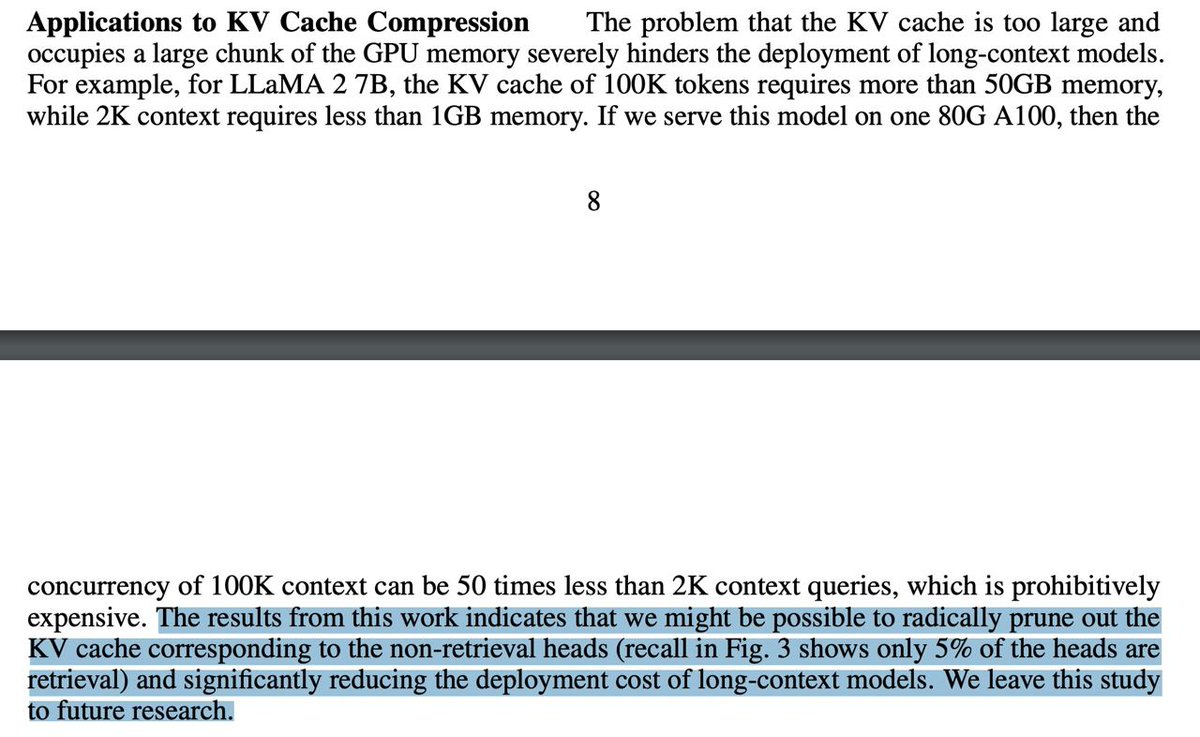

Yao Fu Yizhong Wang Guangxuan Xiao Hao Peng Really important one. Hope to see a framework to detect & store only 10-15% of heads cache to support longer context (w/ sliding attention). Likely, there are other heads that we wanna store, I doubt there are more than 20% for most of the tasks.

TRAM is accepted to ICLR 2024 #ICLR2024 as a Spotlight! See you in Vienna 🇦🇹! Thanks to Naomi Saphra, Pradeep Dasigi, Hao Peng and Allen Institute for AI AllenNLP

Vision experiments, more discussion and visuals coming soon to the camera ready!

I'll be at #ICLR2024 next week in Vienna presenting TRAM at a Spotlight Poster! Come find me at Halle B Thu 9 May 10:45AM-12:45PM CEST

Lets talk about SAM, OOD generalisation, PhDing at EdinburghNLP or working at cohere

w/ Naomi Saphra Pradeep Dasigi Hao Peng

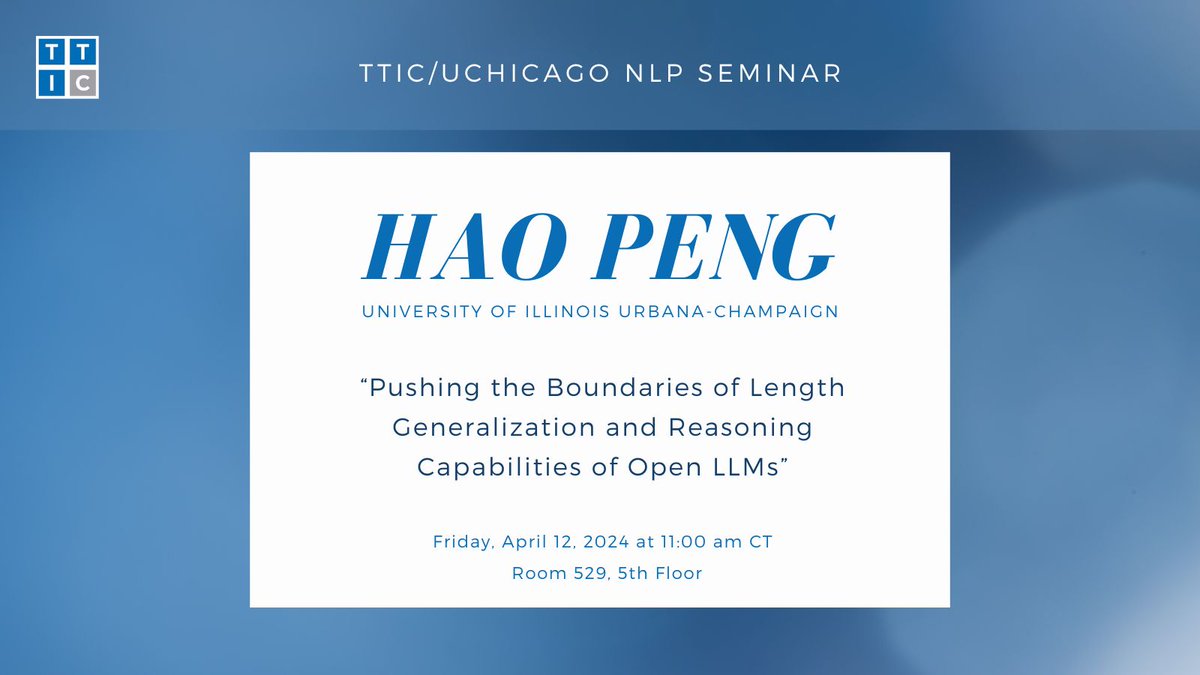

Friday, April 12, 2024 at 11:00 am CT: TTIC/UChicagoCS NLP Seminar presents Hao Peng (@haopeng_nlp) of Illinois Computer Science with a talk titled 'Pushing the Boundaries of Length Generalization and Reasoning Capabilities of Open LLMs.' Please join us in Room 529, 5th floor at TTIC.

Congrats on the great work! Ganqu Cui hanbin Ning Ding Xingyao Wang Hao Peng Zhiyuan Liu TsinghuaNLP UIUC NLP Lifan Yuan

🎙️ Speaker Announcement🎙️

We're pleased to announce the keynote speakers to the 17th Midwest Speech & Language Days Symposium #MSLD2024 , happening University of Michigan, April 15-16:

🌟Eric Fosler-Lussier Eric Fosler-Lussier

🌟Hao Peng Hao Peng

🌟Betsy Sneller Betsy Sneller BLACK LIVES MATTER

🌟Emma Strubell Emma Strubell

Many thanks to my wonderful collaborators/advisor Xingyao Wang , @lifan__yuan , Yangyi Chen and Hao Peng !

Check out our paper for more details: arxiv.org/abs/2311.09731

Kung-Hsiang Steeve Huang Heng Ji Hao Peng Han Zhao Shafiq Joty UIUC NLP Illinois Computer Science The Grainger College of Engineering Shout out to Dr Huang! What a journey and good luck in your new chapter of life!

Yao Fu Yizhong Wang Guangxuan Xiao Hao Peng If you freeze everything but retrieval heads during continual pretraining, can you still get the same perfect retrieval accuracy as full parameter training?