Neural Magic

@neuralmagic

Deploy the fastest ML on CPUs and GPUs using only software. GitHub: https://t.co/99a5S2627M #sparsity #opensource

ID:997536616481722369

https://neuralmagic.com 18-05-2018 17:57:17

721 Tweets

4,8K Followers

1,6K Following

Follow People

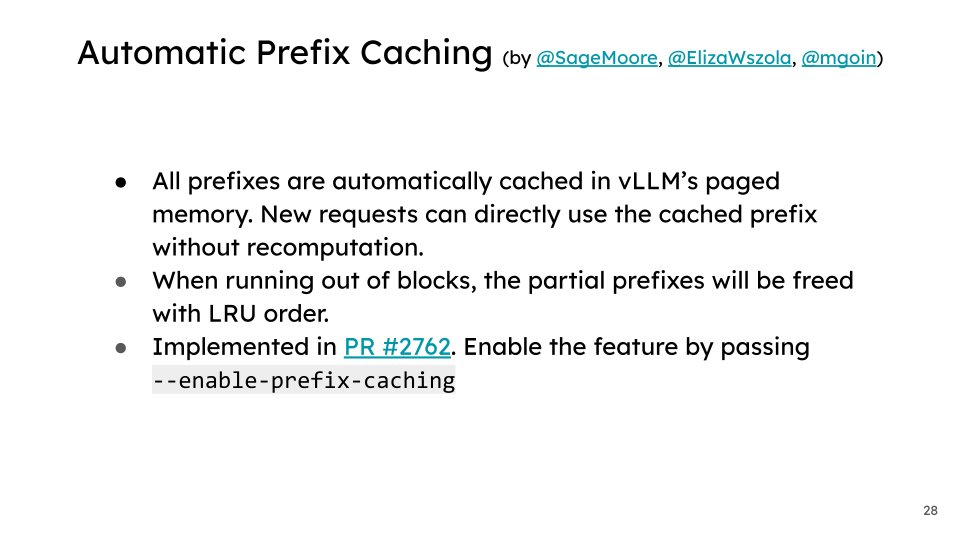

One major production feature you should try out is Automatic Prefix Caching (APC). Thanks to the contribution from Neural Magic, vLLM can _automatically_ cache long system prompts or common prefixes. This drastically saves on the compute needed for multi-turn convos as well.

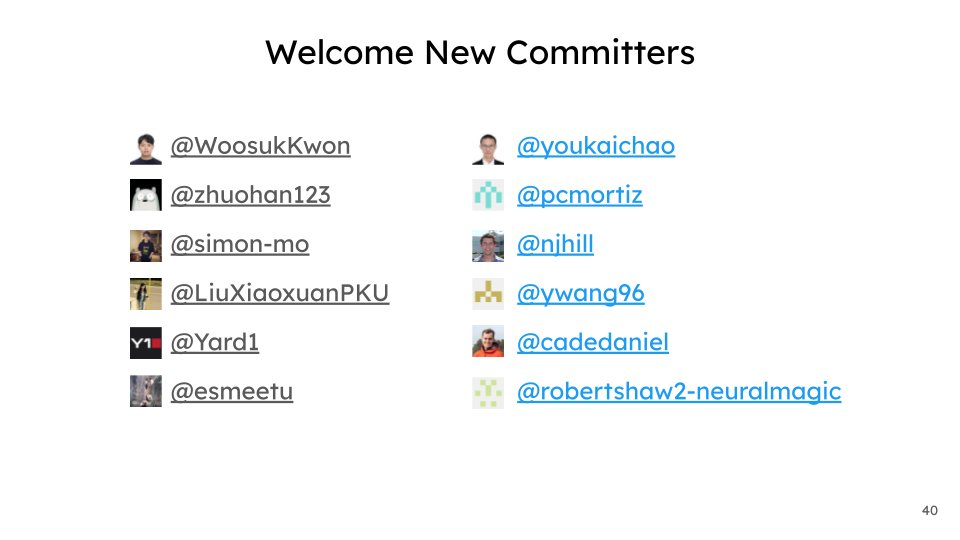

We are doubling our committer base for vLLM to ensure it is best-in-class and a truly community effort. This is just a start. Let's welcome Kaichao You, Philipp Moritz, Nick Hill, Roger Wang, Cade Daniel 🇺🇸, Robert Shaw as committers and thank you for your great work! 👏

Generative AI is a treasure trove of opportunity. Has @NeuralMagic struck gold?

Dive into a Q&A with CEO Brian Stevens in TechBullion covering 👉

1️⃣ Neural Magic's technology story

2️⃣ Future generative AI capabilities

3️⃣ New strategic partnerships

twitter.com/TechBullion/st…

Had a great AI Day with Cerebras! Our CTOs, Mark Kurtz and Sean Lie, with their teams, released expertly-optimized Llama 2 7B models that have been sparsified for superior performance and memory: huggingface.co/collections/ne…

🙏 James Wang (in NYC) and Julie Choi for including us!

Democratize AI Using Optimized CPUs As The Onramp To Generative AI: Interview with Neural Magic CEO Brian Stevens Neural Magic techbullion.com/democratize-ai… #AI #GenerativeAI #software TechBullion

Doing things smarter with math, algorithms, and software... That's what Akamai Technologies is all about. Akamai and Neural Magic team up to accelerate AI workloads on edge CPU servers siliconangle.com/2024/03/12/aka… via SiliconANGLE

Happy to release AQLM, a new SOTA method for low-bitwidth LLM quantization, targeted to the “extreme” 2-3bit / parameter range.

With: black_samorez @efrantar and collaborators

Arxiv: arxiv.org/abs/2401.06118

Compression & Inference Code: github.com/Vahe1994/AQLM

Snapshots:

[1/n]

![Dan Alistarh (@DAlistarh) on Twitter photo 2024-02-07 15:23:57 Happy to release AQLM, a new SOTA method for low-bitwidth LLM quantization, targeted to the “extreme” 2-3bit / parameter range. With: @black_samorez @efrantar and collaborators Arxiv: arxiv.org/abs/2401.06118 Compression & Inference Code: github.com/Vahe1994/AQLM Snapshots: [1/n] Happy to release AQLM, a new SOTA method for low-bitwidth LLM quantization, targeted to the “extreme” 2-3bit / parameter range. With: @black_samorez @efrantar and collaborators Arxiv: arxiv.org/abs/2401.06118 Compression & Inference Code: github.com/Vahe1994/AQLM Snapshots: [1/n]](https://pbs.twimg.com/media/GFviBrEWwAAQ0aS.jpg)

We've been taking popular fine-tuned LLMs from Hugging Face and applying #SparseGPT to compress them 50% with sparsity and quantization to save on memory and compute during inference. Here are two examples:

Llama 2 7b chat: huggingface.co/neuralmagic/Ll…

Hermes 2 - Solar 10.7B:…