Sanmi Koyejo

@sanmikoyejo

I lead @stai_research at Stanford.

ID:2790739088

04-09-2014 22:44:16

180 Tweets

1,5K Followers

85 Following

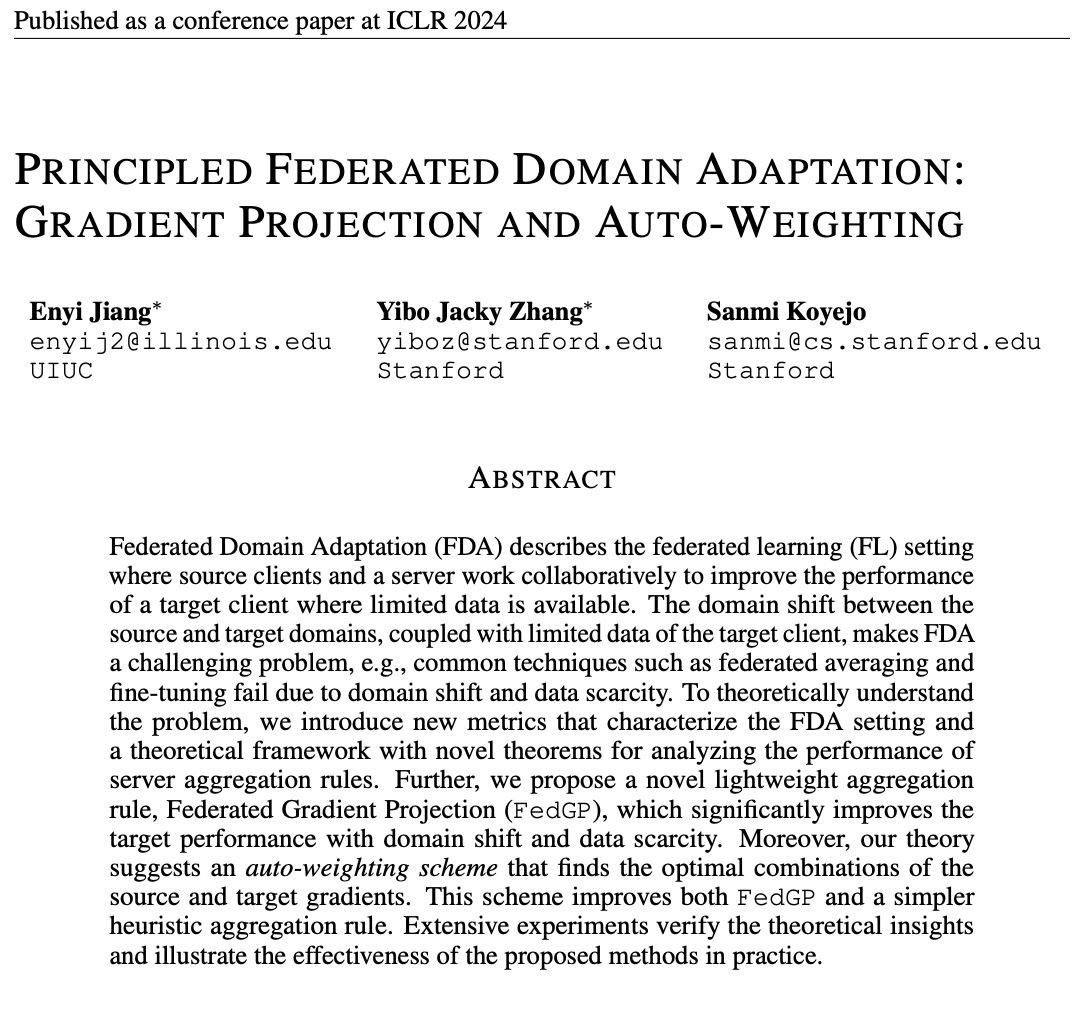

⏰ 26 days until ICLR 2024. 1️⃣ of the many FL 👩🔬 papers there is: 'Principled Federated Domain Adapation: Gradient Projection➕Auto-weighting' -- frm Enyi Jiang Yibo Jacky Zhang Sanmi Koyejo University of Illinois Stanford University. Built w/ 🌼 Flower! See you in Austria 🚀

📝 Paper: buff.ly/3vXZVpv

Proxy methods: not just for causal effect estimation! #aistats24

Adapt to domain shifts in an unobserved latent, with either

-concept variables

-multiple training domains

arxiv.org/abs/2403.07442

Tsai Stephen Pfohl Olawale Salaudeen Nicole Chiou (she/her) Kusner Alexander D'Amour ([email protected]) Sanmi Koyejo

A new report from Sanmi Koyejo & @stanfordHAI: The effect of AI on Black Americans, including health: 'AI in medical imaging & diagnostics excels at reducing unnecessary deaths, but employing diverse training datasets will be key to ensure equal performance across racial groups.'

New white paper: Stanford HAI and Black in AI join forces to present considerations for The Black Caucus’s policy initiatives by highlighting where AI can exacerbate racial inequalities and where it can benefit Black communities. Read here: hai.stanford.edu/white-paper-ex…

HAI faculty affiliate Sanmi Koyejo presented the white paper to Rep. Steven Horsford, Yvette D. Clarke, Rep. Barbara Lee, Rep. Emanuel Cleaver at the inaugural The Black Caucus’s AI Policy Series meeting last week & met with Senator @corybooker in Washington along w/ Black in AI CEO Gelyn Watkins.

Are you hiring top AI talent?

Here is a list of Ph.D. students affiliated with Stanford AI Lab who are on the industry and academic job markets this year! This list showcases diverse research areas and 41% of these graduates are URMs!

Check it out: ai.stanford.edu/blog/sail-grad…

Today we’re joined by Sanmi Koyejo, assistant professor at Stanford University to discuss his award-winning papers: ‘Are Emergent Abilities of Large Language Models a Mirage?’ & 'Comprehensive Assessment of Trustworthiness in GPT Models'.

🎧/📷: twimlai.com/go/671

🔑 takeaways (1/5)

From Africa to the 'World Cup of AI' = NeurIPS! decisiveagents.com/capetocarthage

Thanks for a beautiful journey Rihab Gorsane, Omayma Mahjoub, Ruan de Kock, Siddarth Singh, Zohra Slim and Karim Beguir. ❤️ Thanks Sara Hooker Sanmi Koyejo, Shakir Mohamed for your voice 🙏 I hope it inspires many! 🌍

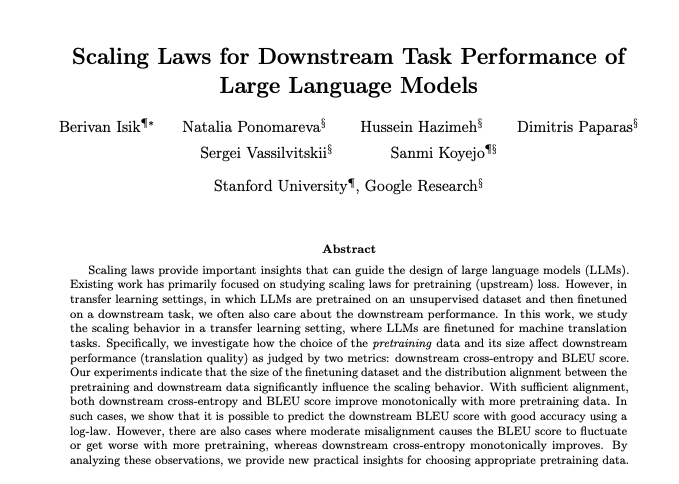

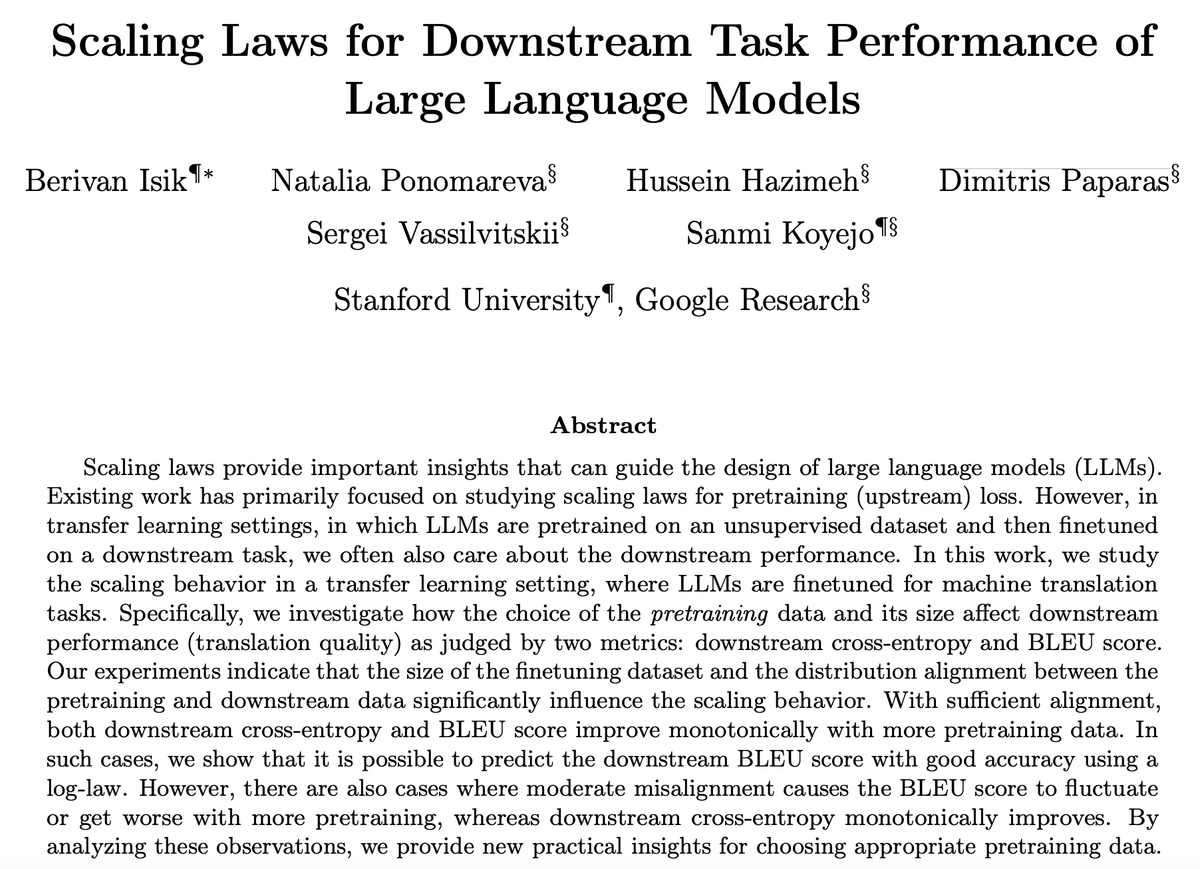

Very excited to share the paper from my last

Google AI internship: Scaling Laws for Downstream Task Performance of LLMs.

arxiv.org/pdf/2402.04177…

w/ Natalia Ponomareva, Hussein Hazimeh, Dimitris Paparas, Sergei Vassilvitskii, and Sanmi Koyejo

1/6

Transforming the reward used in RLHF gives big wins in LLM alignment and makes it easy to combine multiple reward functions! arxiv.org/pdf/2402.00742… Chirag Nagpal Jonathan Berant Jacob Eisenstein Alexander D'Amour ([email protected]) Sanmi Koyejo Victor Veitch Google DeepMind

Exciting News! CHIL 2024 features a stellar lineup of speakers and panelists. Get ready to dive into the latest innovations in computing, healthcare, and AI with Samantha Kleinberg, Sanmi Koyejo, Deb Raji, Leo Anthony Celi, David Meltzer, Kyra Gan, and Girish Nadkarni! 🌟

✨Excited to share ACM CCS 2024 about our work on unraveling the connections between Differential Privacy and Certified Robustness in Federated Learning against poisoning attacks!🛡️🤖

🗓️ Join our talk this afternoon. Happy to discuss if you are around!

Paper: arxiv.org/abs/2209.04030

How many adversarial users (instances) that a user-level (instance-level) DPFL mechanism can tolerate with certified robustness? 🧐

Check out our paper! arxiv.org/abs/2209.04030

👥 Collaboration with Yunhui Long, Pin-Yu Chen, Qinbin Li, Sanmi Koyejo, Secure Learning Lab (SLL) 🥳

Excited to announce new preprint led by Minhao Jiang Ken Liu Illinois Computer Science Stanford AI Lab !!

Q: What happens when language models are pretrained on data contaminated w/ benchmarks, ratcheting up amount of contamination?

A: LMs do better, but curves can be U-shaped!

1/2

We trained some GPT-2 models *from scratch* where evaluation data are deliberately added to/removed from pre-training to study the effects of data contamination!

Three takeaways below 🧵:

Paper: arxiv.org/abs/2401.06059

Led by Minhao Jiang & with Rylan Schaeffer Sanmi Koyejo

![sijia.liu (@sijialiu17) on Twitter photo 2024-02-15 02:09:51 [1/3] 🌟 Excited to share our latest work 'Rethinking Machine Unlearning for LLMs' on arXiv! 🚀 Check it out: arxiv.org/abs/2402.08787 📢Our mission? To cleanse LLMs of harmful, sensitive, or illegal data influences and refine their capabilities. [1/3] 🌟 Excited to share our latest work 'Rethinking Machine Unlearning for LLMs' on arXiv! 🚀 Check it out: arxiv.org/abs/2402.08787 📢Our mission? To cleanse LLMs of harmful, sensitive, or illegal data influences and refine their capabilities.](https://pbs.twimg.com/media/GGV94YkaUAAtAF4.jpg)