#ACL2023NLP tutorial links:

T1: Goal Awareness for Conversational AI: Proactivity, Non-collaborativity, and Beyond dengyang17.github.io/files/ACL2023-…

T2: Complex Reasoning in Natural Language wenting-zhao.github.io/complex-reason…

We introduce the first multimodal instruction tuning dataset: 🌟MultiInstruct🌟 in our 🚀 #ACL2023NLP 🚀 paper. MultiInstruct consists of 62 diverse multimodal tasks and each task is equipped with 5 expert-written instructions.

🚩arxiv.org/abs/2212.10773🧵[1/3]

![zhiyang xu (@zhiyangx11) on Twitter photo 2023-06-11 20:06:19 We introduce the first multimodal instruction tuning dataset: 🌟MultiInstruct🌟 in our 🚀#ACL2023NLP🚀 paper. MultiInstruct consists of 62 diverse multimodal tasks and each task is equipped with 5 expert-written instructions.

🚩arxiv.org/abs/2212.10773🧵[1/3] We introduce the first multimodal instruction tuning dataset: 🌟MultiInstruct🌟 in our 🚀#ACL2023NLP🚀 paper. MultiInstruct consists of 62 diverse multimodal tasks and each task is equipped with 5 expert-written instructions.

🚩arxiv.org/abs/2212.10773🧵[1/3]](https://pbs.twimg.com/media/FyXZnKoaEAAmew1.jpg)

New #ACL2023NLP paper!

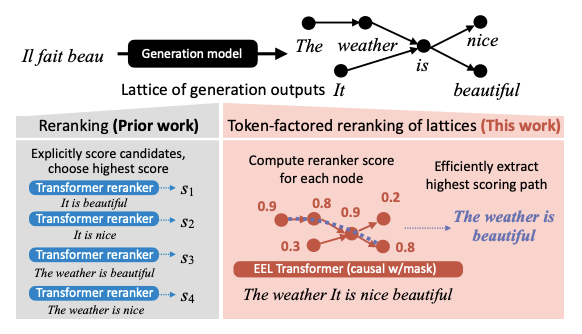

Reranking generation sets with transformer-based metrics can be slow. What if we could rerank everything at once? We propose EEL: Efficient Encoding of Lattices for fast reranking!

Paper: arxiv.org/abs/2306.00947 w/ Jiacheng Xu Xi Ye Greg Durrett

📢New paper to appear at #acl2023nlp : arxiv.org/abs/2212.10534

Human-quality counterfactual data with no humans! Introduce DISCO, our novel distillation framework that automatically generates high-quality, diverse, and useful counterfactual data at scale using LLMs.

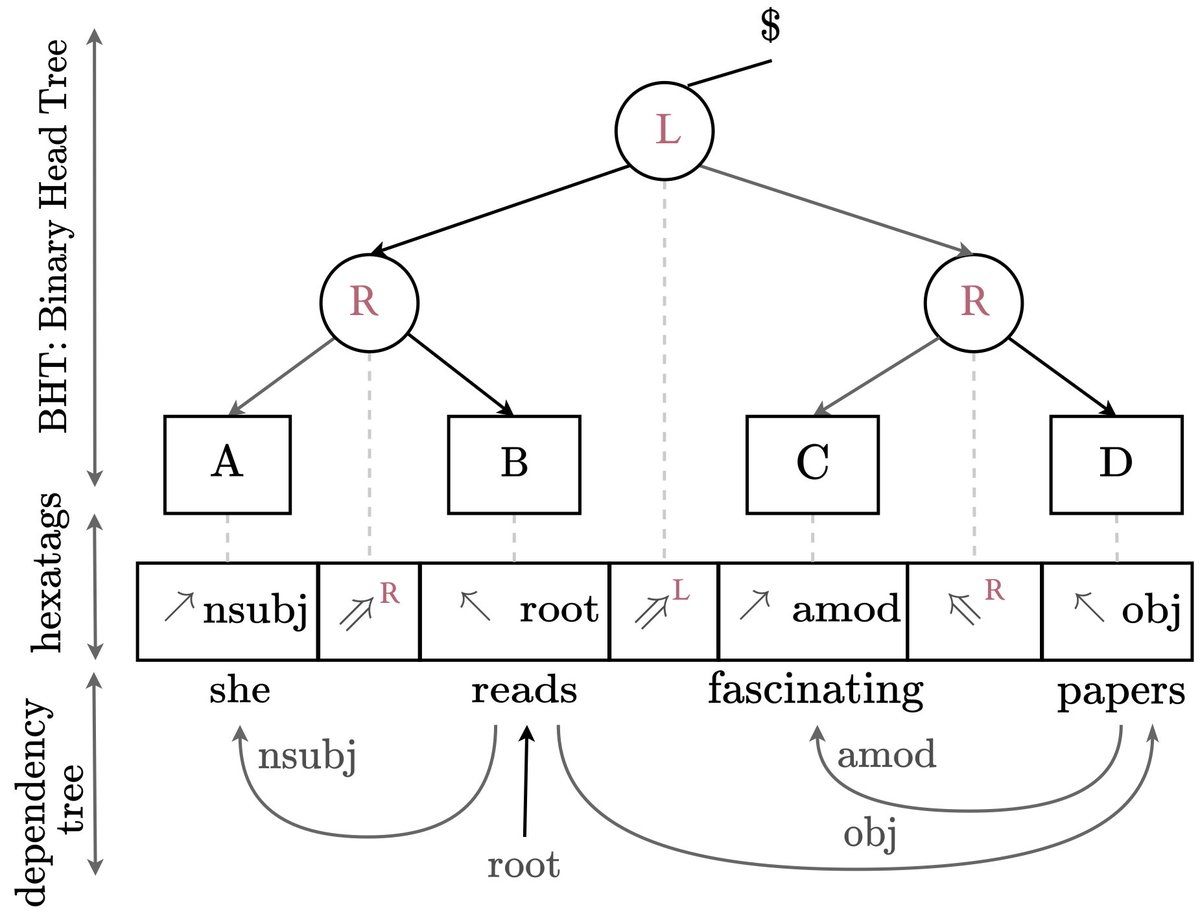

Are you a big fan of structure?

Have you ever wanted to apply the latest and greatest large language model out-of-the-box to parsing?

Are you a secret connoisseur of linear-time dynamic programs?

If you answered yes, our outstanding #ACL2023NLP paper may be just right for you!

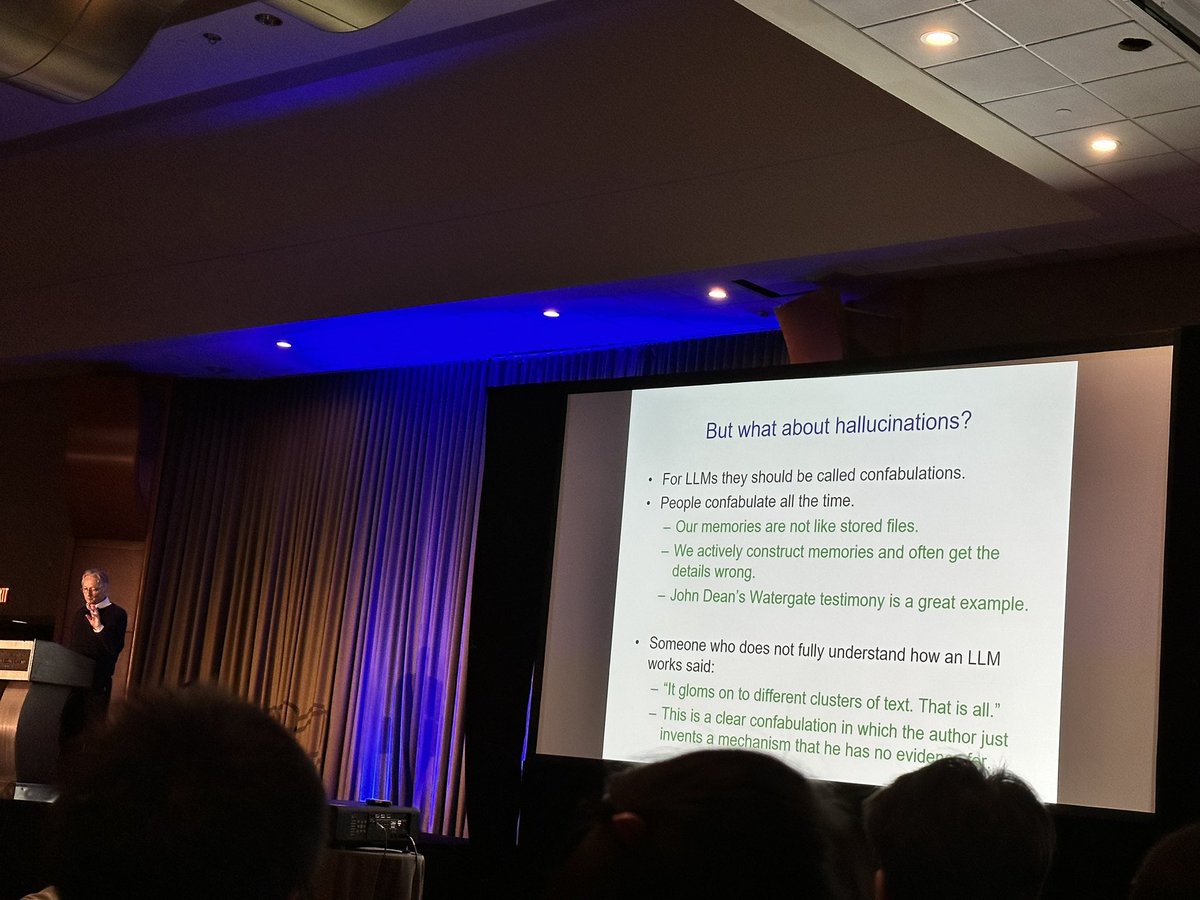

Geoffrey Hinton ( #ACL2023NLP keynote address): LLMs’ hallucinations should be called confabulations

Grateful and proud to learn I was an outstanding reviewer at #ACL2023NLP !

1% of reviewers are recognized for effort in giving thoughtful and detailed peer reviews of submissions

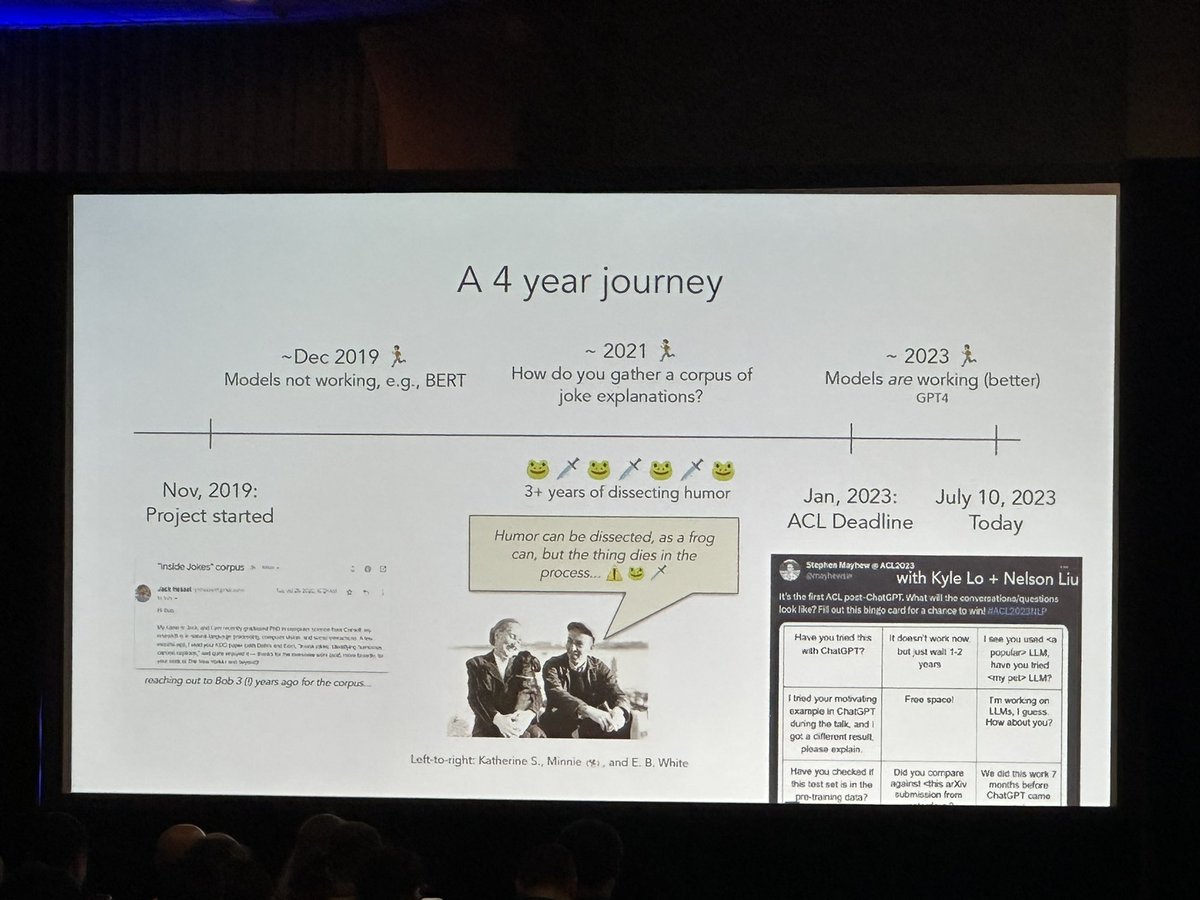

Inspirational words from Jack Hessel in his #ACL2023NLP best paper talk:

'If you're a PhD student working on a long-term project that you're excited about but still isn't coming together, keep it up...I want to read your paper!'

Slide shows the '4 year journey' from idea to award

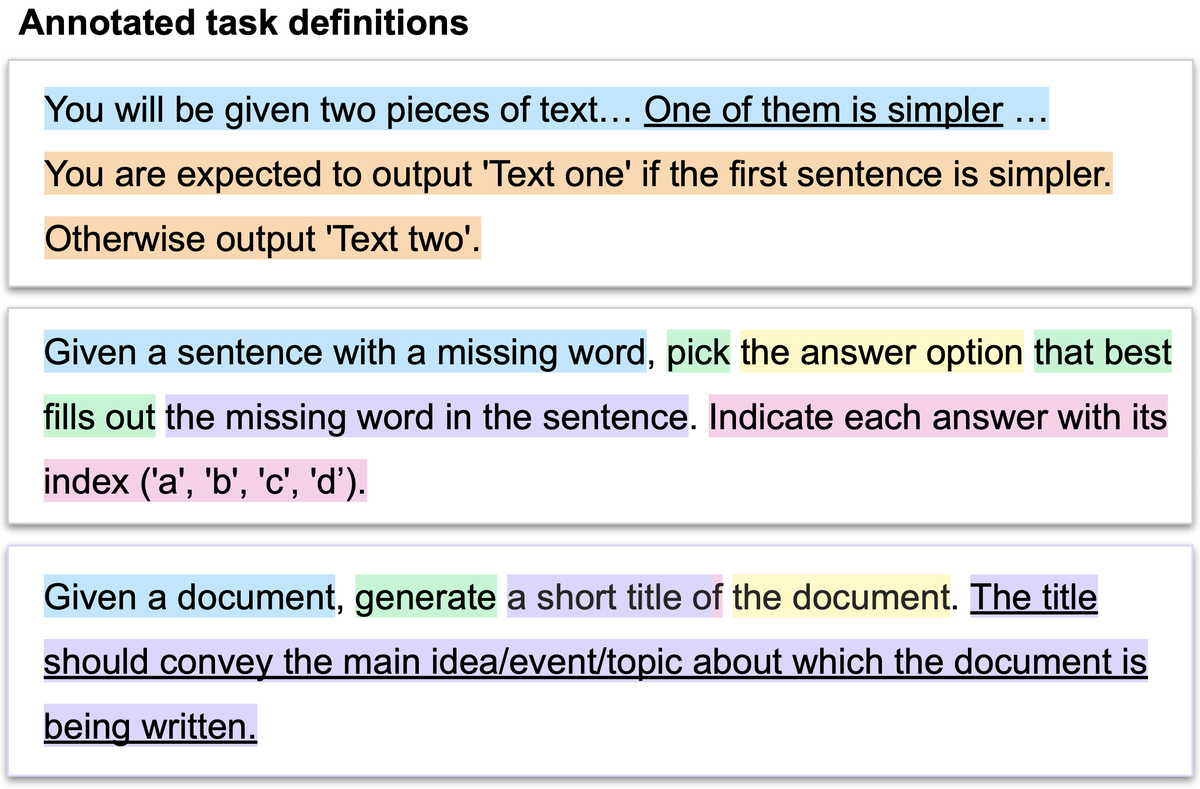

🤔Which words in your prompt are most helpful to language models? In our #ACL2023NLP paper, we explore which parts of task instructions are most important for model performance.

🔗 arxiv.org/abs/2306.01150

Code: github.com/fanyin3639/Ret…

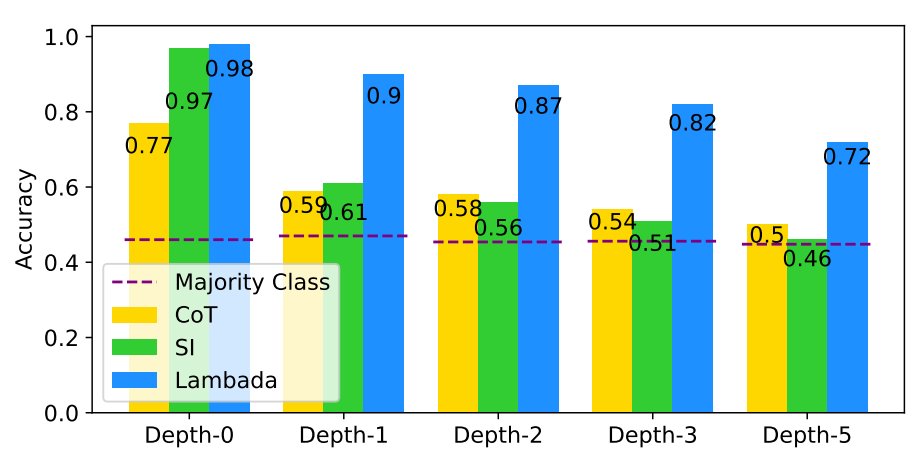

Chart captioning is hard, both for humans & AI.

Today, we’re introducing VisText: a benchmark dataset of 12k+ visually-diverse charts w/ rich captions for automatic captioning (w/ Angie Boggust Arvind Satyanarayan)

📄: vis.csail.mit.edu/pubs/vistext.p…

💻: github.com/mitvis/vistext

#ACL2023NLP

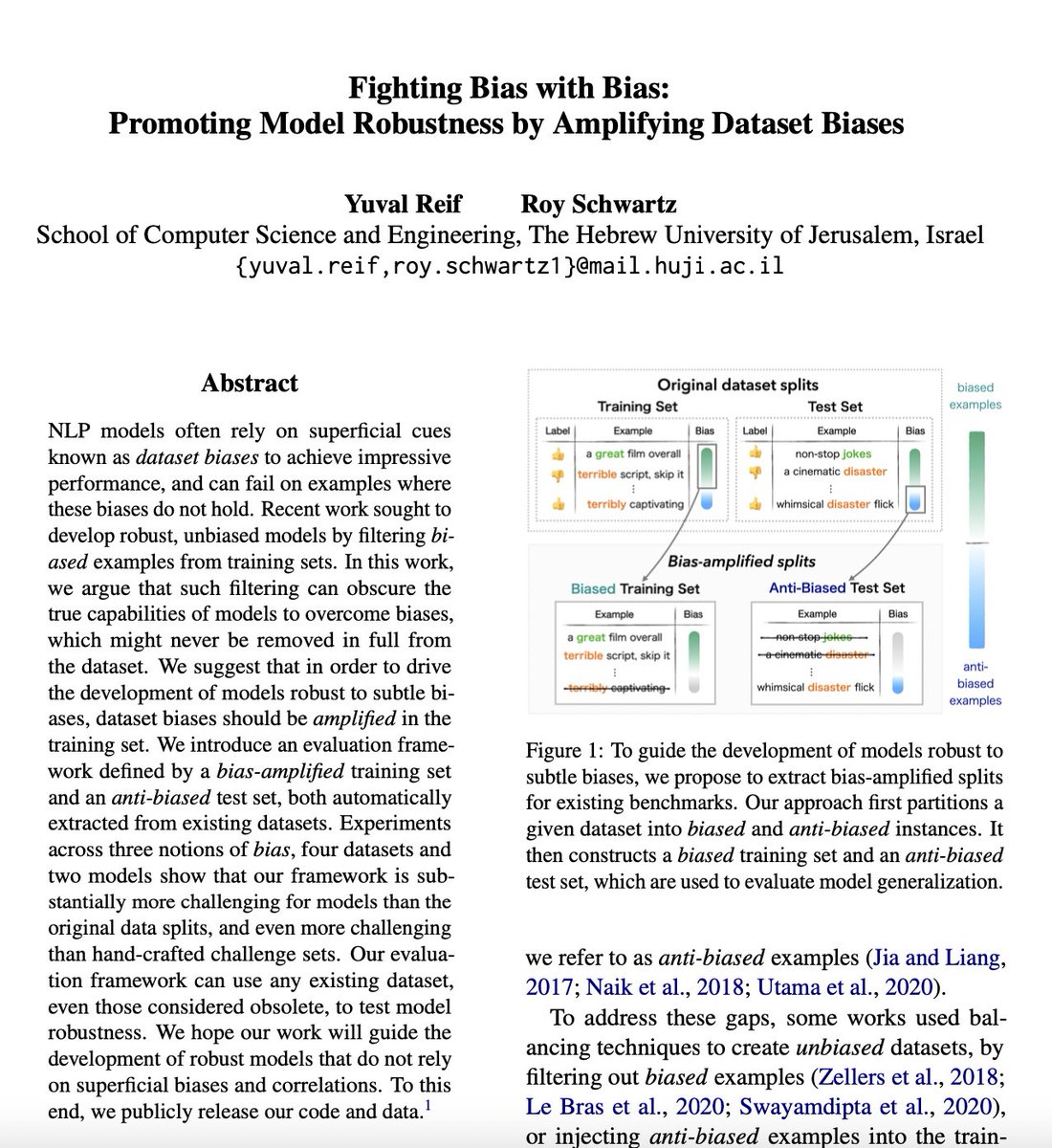

Is dataset debiasing the right path to robust models?

In our work, “Fighting Bias with Bias”, we argue that in order to promote model robustness, we should in fact amplify biases in training sets.

w/ Roy Schwartz

In #ACL2023NLP Findings

Paper: arxiv.org/abs/2305.18917

🧵👇

LLMs are used for reasoning tasks in NL but lack explicit planning abilities. In arxiv.org/abs/2307.02472, we see if vector spaces can enable planning by choosing statements to combine to reach a conclusion. Joint w/ Kaj Bostrom Swarat Chaudhuri & Greg Durrett NLRSE workshop at #ACL2023NLP

![Genta Winata (@gentaiscool) on Twitter photo 2023-05-26 00:39:04 Does an LLM forget when it learns a new language?

We systematically study catastrophic forgetting in a massively multilingual continual learning framework in 51 languages.

Preprint: arxiv.org/abs/2305.16252

⬇️🧵

The paper was accepted at #acl2023nlp findings #NLProc [1/4] Does an LLM forget when it learns a new language?

We systematically study catastrophic forgetting in a massively multilingual continual learning framework in 51 languages.

Preprint: arxiv.org/abs/2305.16252

⬇️🧵

The paper was accepted at #acl2023nlp findings #NLProc [1/4]](https://pbs.twimg.com/media/FxA6SQeWwAAZ_R7.jpg)

![Brihi Joshi (@BrihiJ) on Twitter photo 2023-05-15 07:17:39 Super excited to share our #ACL2023NLP paper! 🙌🏽

📢 Are Machine Rationales (Not) Useful to Humans? Measuring and Improving Human Utility of Free-Text Rationales

📑: arxiv.org/abs/2305.07095

🧵👇 [1/n]

#NLProc #XAI Super excited to share our #ACL2023NLP paper! 🙌🏽

📢 Are Machine Rationales (Not) Useful to Humans? Measuring and Improving Human Utility of Free-Text Rationales

📑: arxiv.org/abs/2305.07095

🧵👇 [1/n]

#NLProc #XAI](https://pbs.twimg.com/media/FwJr719XsAUMkC2.jpg)

![Martin Ziqiao Ma (@ziqiao_ma) on Twitter photo 2023-07-10 16:19:50 🎉Thrilled to share that our paper 'World-to-Words: Grounded Open Vocabulary Acquisition through Fast Mapping in Vision-Language Models' was selected for the outstanding paper award at #ACL2023NLP! Thanks @aclmeeting :-)

Let's take grounding seriously in VLMs because...

🧵[1/n] 🎉Thrilled to share that our paper 'World-to-Words: Grounded Open Vocabulary Acquisition through Fast Mapping in Vision-Language Models' was selected for the outstanding paper award at #ACL2023NLP! Thanks @aclmeeting :-)

Let's take grounding seriously in VLMs because...

🧵[1/n]](https://pbs.twimg.com/media/F0r5qAlXgAMOgr-.jpg)