tsvetshop

@tsvetshop

Group account for Prof. Yulia Tsvetkov's lab at @uwnlp. We work on low-resource, multilingual, social-oriented NLP. Details on our website:

ID:1376970544390750214

http://tsvetshop.github.io 30-03-2021 18:54:04

88 Tweets

798 Followers

131 Following

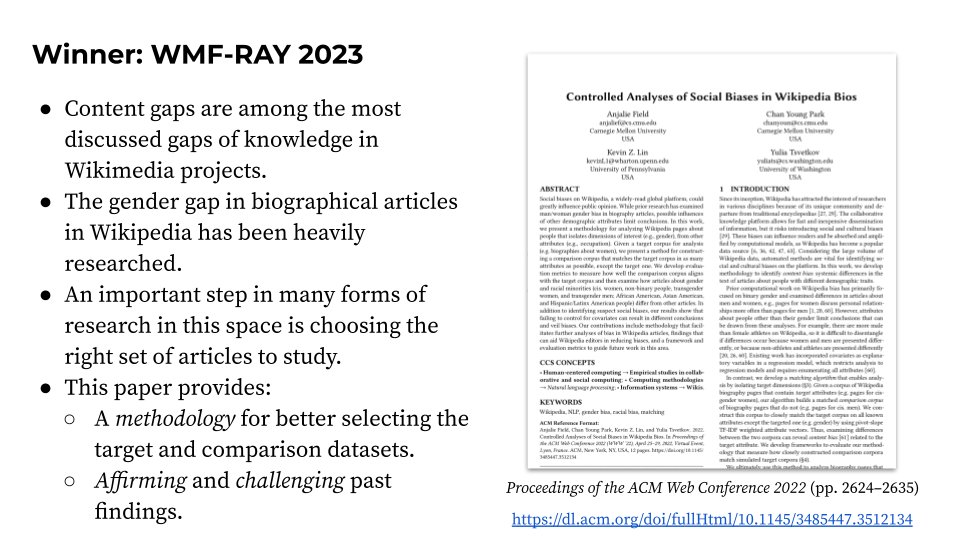

There are many dimensions to bias. University of Washington & Language Technologies Institute | @CarnegieMellon researchers led by #UWAllen professor Yulia Tsvetkov earned the Wikimedia Foundation 2023 Research Award of the Year for advancing a novel methodology for analyzing them in Wikipedia biographies: news.cs.washington.edu/2023/08/21/wik… #NLProc #CSforGood

Here's the pre-print for our FAccT'23 paper 'Examining risks of racial biases in NLP tools for child protective services' with Amanda Coston, Nupoor Gandhi, Alexandra Chouldechova, Emily Putnam-Hornstein, David Steier, and Yulia Tsvetkov tsvetshop

arxiv.org/abs/2305.19409

The first Wikimedia Foundation Research Award Research Award of the Year 2023 goes to Anjalie Field Chan Young Park Kevin Lin tsvetshop for their work 'Controlled Analyses of Social Biases in Wikipedia Bios'

⭐️ arxiv.org/abs/2101.00078

Congratulations to Anjalie Field Chan Young Park Kevin Lin for receiving “Research Award of the Year” by WikiResearch 🎉🎉🎉

A special bonus: Jimmy Wales (@jimmy_wales), the founder of Wikipedia, joined the ceremony and gave the award!!!